Flink monitoring

With the Flink plugin, you can monitor and submit Apache Flink jobs.

Typical workflow:

Monitor Flink jobs using the dedicated tool window that reflects the Apache Flink Dashboard

Connect to a Flink server

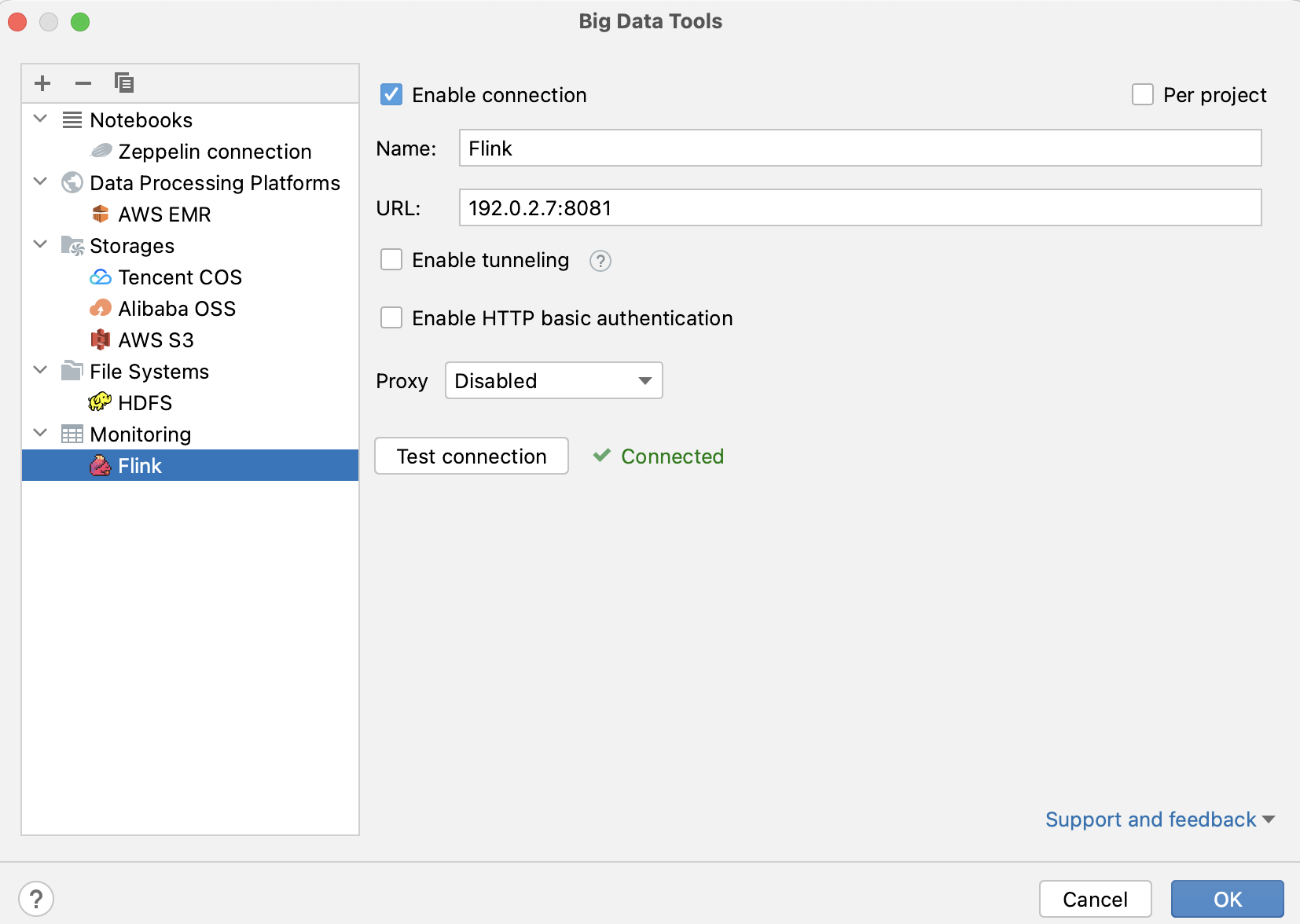

In the Big Data Tools window, click

and select Flink.

In the Big Data Tools dialog that opens, specify the connection parameters:

Name: the name of the connection to distinguish it between the other connections.

URL: specify the URL of your Apache Flink Dashboard.

Optionally, you can set up:

Per project: select to enable these connection settings only for the current project. Clear the checkbox if you want this connection to be visible in other projects.

Enable connection: clear the checkbox if you want to disable this connection. By default, the newly created connections are enabled.

Enable tunneling: creates an SSH tunnel to the remote host. It can be useful if the target server is in a private network but an SSH connection to the host in the network is available.

Select the checkbox and specify a configuration of an SSH connection (click ... to create a new SSH configuration).

Enable HTTP basic authentication: connection with the HTTP authentication using the specified username and password.

Proxy: select if you want to use IDE proxy settings or if you want to specify custom proxy settings.

Once you fill in the settings, click Test connection to ensure that all configuration parameters are correct. Then click OK.

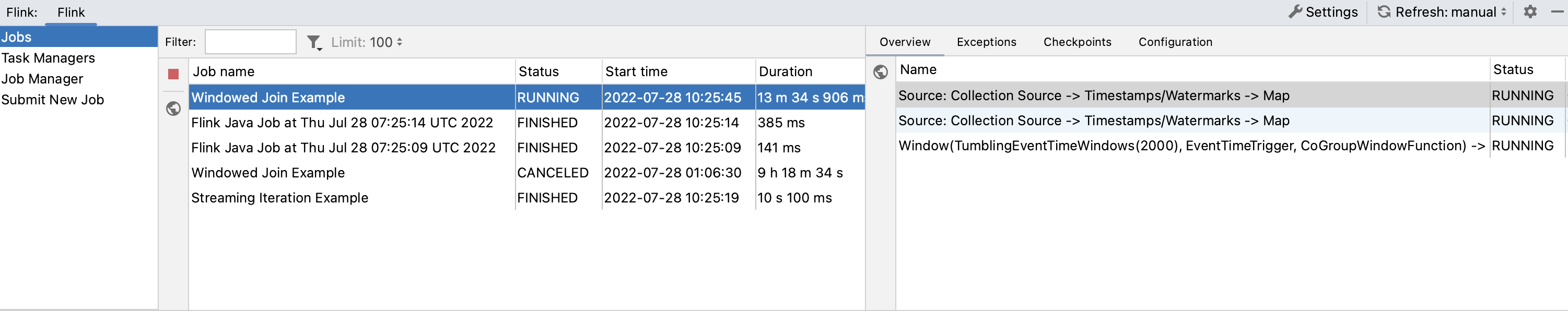

Once you have established a connection to the Flink server, the Flink tool window appears, which reflects the Apache Flink Dashboard.

Preview jobs, their configuration, exceptions, and checkpoints. Use the Filter field to filter jobs by name or click to filter them by status. If you have a running job, you can click

to terminate it.

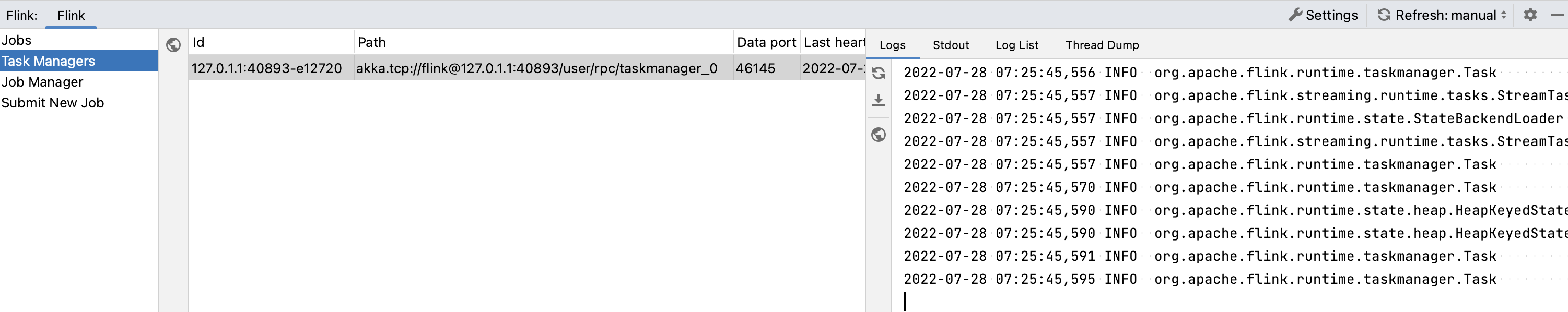

View nodes that run your tasks, view and download their logs, stdout, and thread dumps.

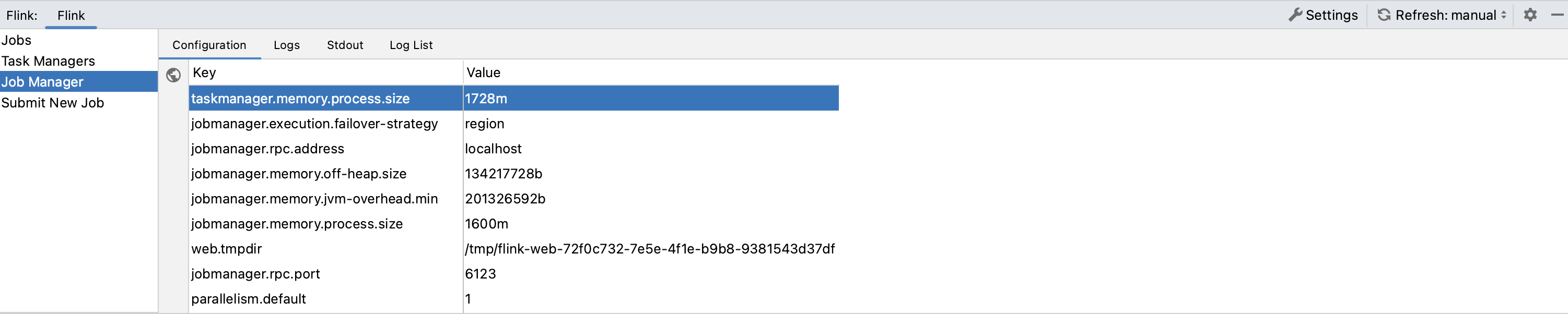

View the Job Manager, view and download logs and stdout.

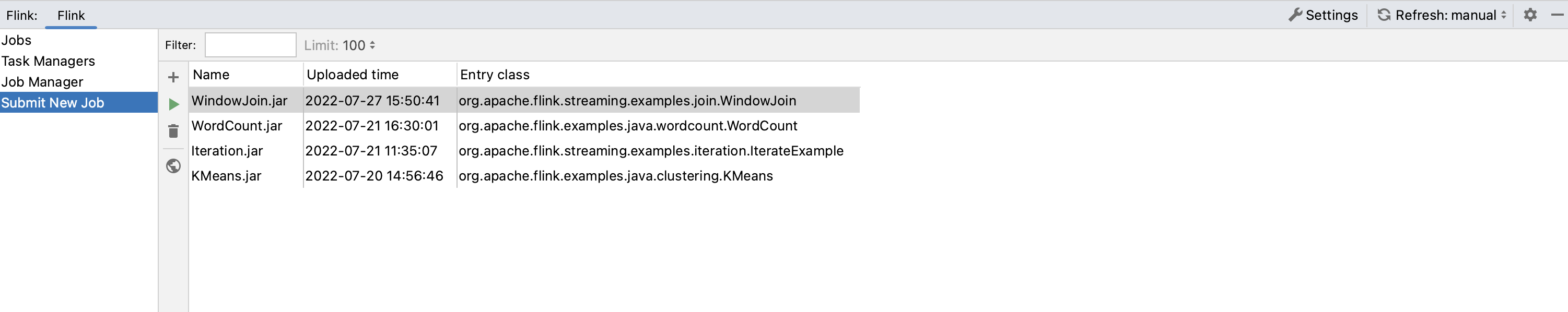

View uploaded JAR files, filter them by name or entry class, and submit new Flink jobs.

Submit New Job

In the Flink tool window, open the Submit New Job tab.

If a JAR file of your application is not uploaded yet to the Flink cluster, click

and select a new file.

Select the uploaded file and click

.

In the Submit JAR file window that opens, configure the following parameters:

Allow non-restored state: allow skipping state of the savepoint that cannot be mapped to the new program (equivalent of the allowNonRestoredState option).

Entry class: enter the program entry class.

Program arguments: enter the program arguments.

Parallelism: enter a number of parallel instances of a task. Leave it blank if you need only one task instance.

Savepoint path: enter the path of the job’s execution state image (savepoint).

Once you have submitted the job, you can preview its status, start time, and other parameters in the Jobs tab.