Manage AI Enterprise

AI Enterprise lets you use different providers of AI services across your organization — JetBrains AI or a custom solution, such as:

You can enable all options and then choose a preferred provider for specific user profiles.

Enabling AI Enterprise for your organization involves the following steps:

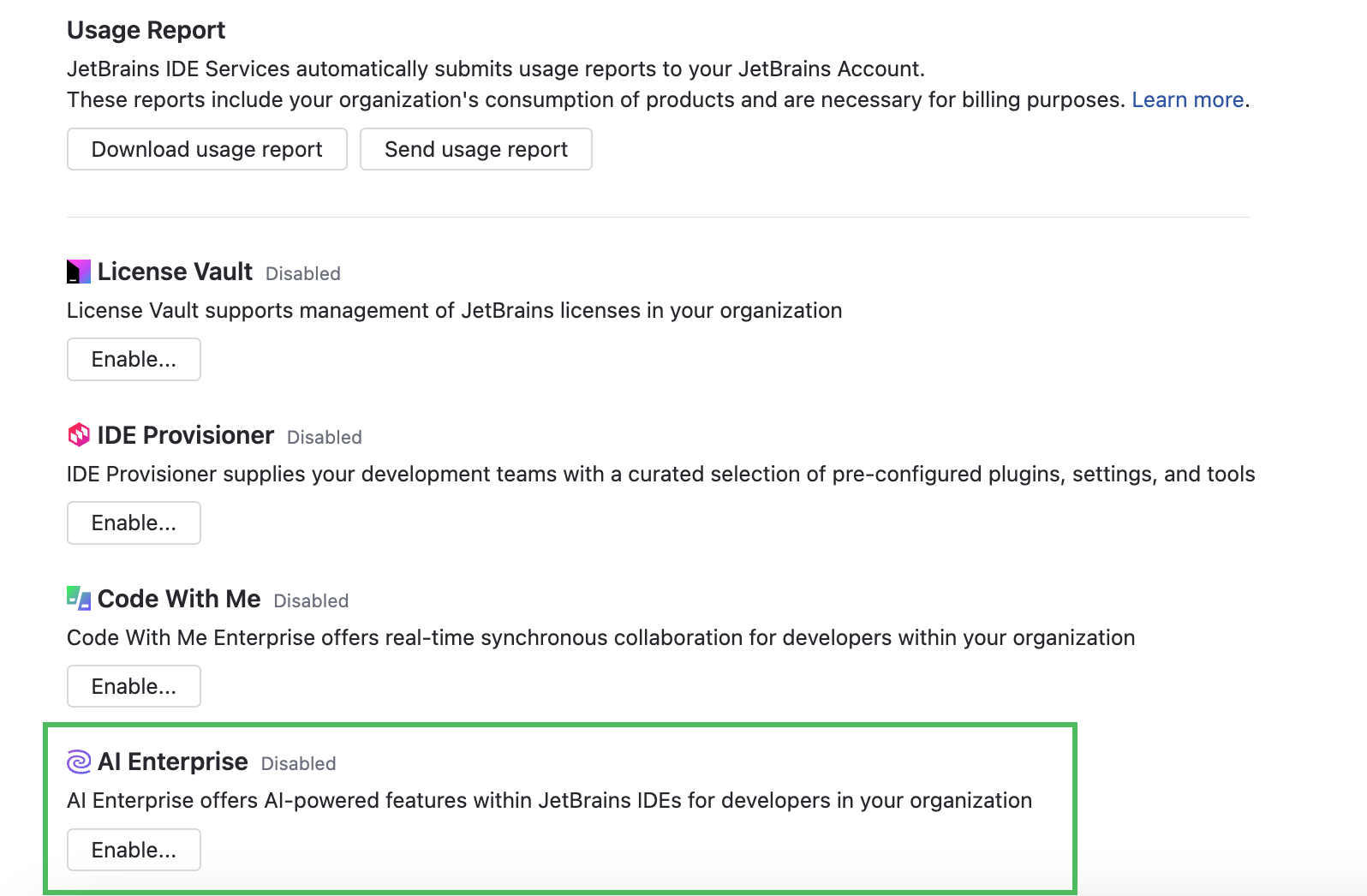

In the Web UI, open the Configuration page and navigate to the License & Activation tab.

Scroll down to the AI Enterprise section and click Enable:

In the Enable AI Enterprise dialog, choose one of the AI providers:

If you'd like to use different AI providers for specific profiles, you can easily add and enable an additional provider at any time.

After enabling AI Enterprise in your organization, you need to select and enable an AI provider for relevant profiles. Until then, developers won't have access to AI features and the AI Assistant plugin.

Make sure developers are connected to IDE Services Server through the Toolbox App, otherwise they won't be able to use the provisioned AI features.

Use the JetBrains AI service

By default, the AI features in JetBrains products are powered by the JetBrains AI service. This service transparently connects you to different large language models (LLMs) and enables specific AI-powered features within JetBrains products. It's driven by OpenAI and Google as the primary third-party providers, as well as several proprietary JetBrains models. JetBrains AI is deployed as a cloud solution on the JetBrains' side and does not require any additional configuration from your side.

Enable AI provider: JetBrains AI

In the AI Enterprise section, click Enable.

In the Enable AI Enterprise dialog, select the JetBrains AI provider.

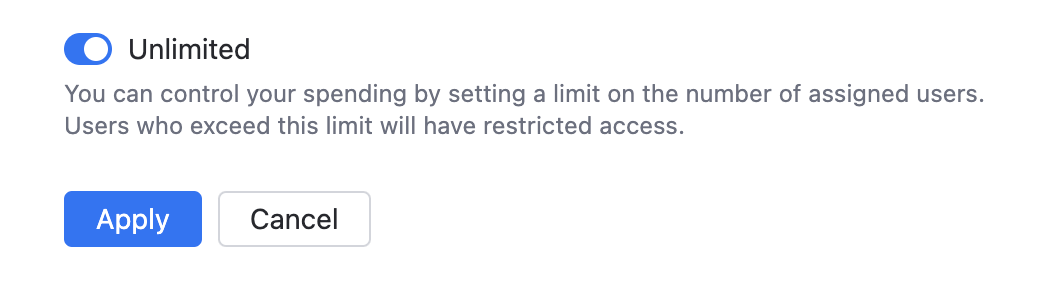

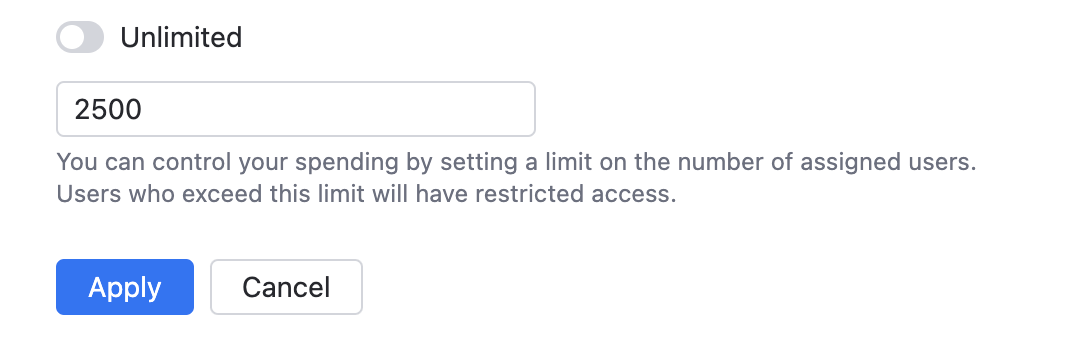

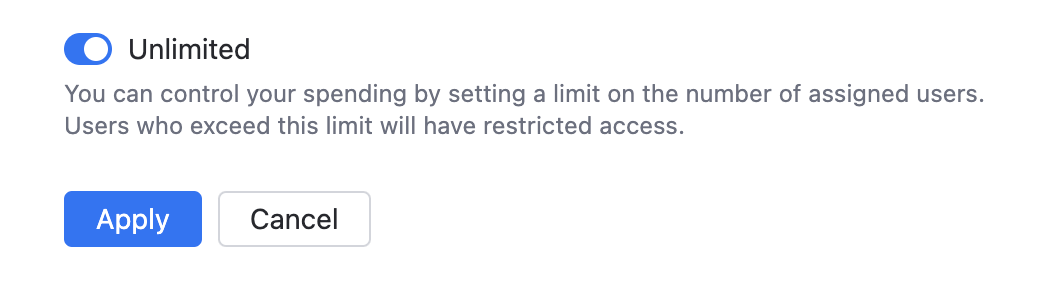

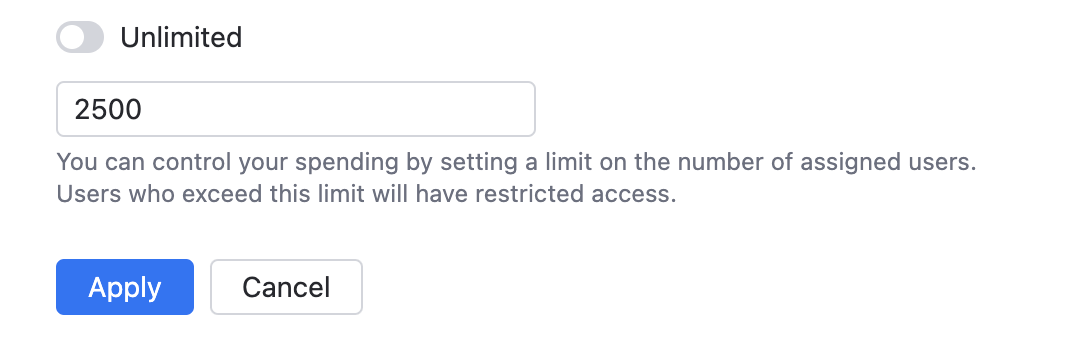

Set the usage limit for AI Enterprise.

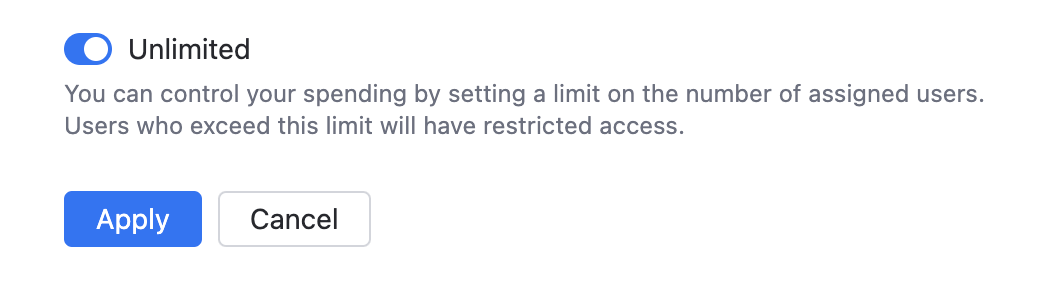

Enable the Unlimited option.

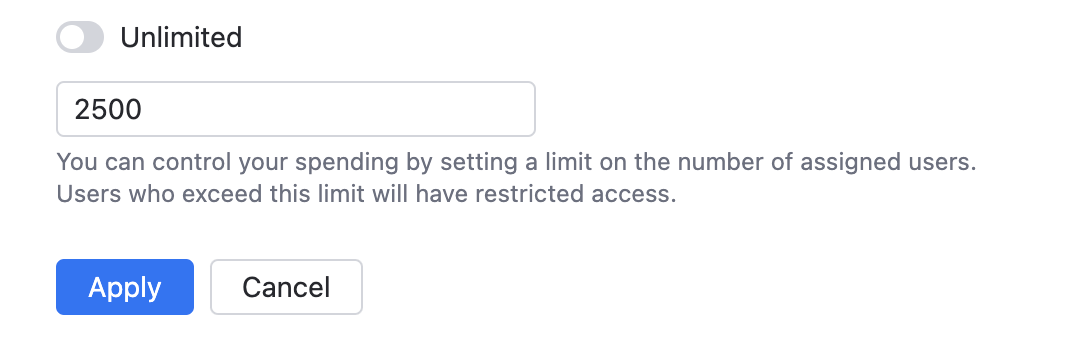

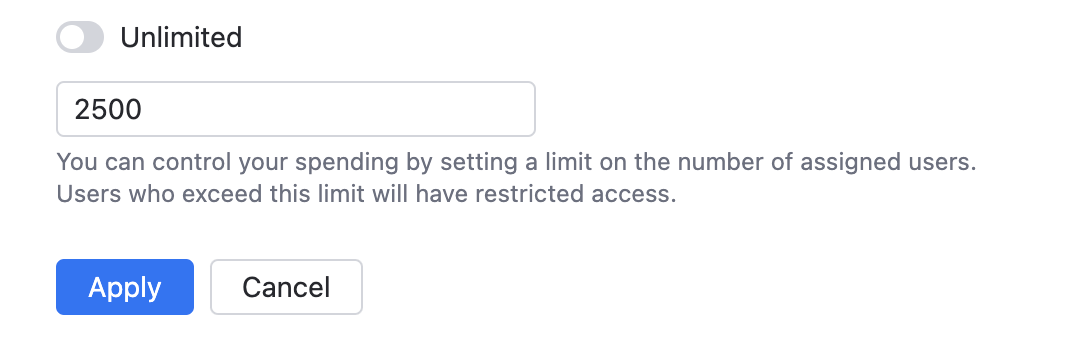

Disable the Unlimited option and specify the limit on the number of AI Enterprise users.

Click Apply.

Use custom models

AI Enterprise works with Google Vertex AI, Amazon Bedrock, and selected presets powered by OpenAI. You can also connect to the on-premises LLMs using Hugging Face.

OpenAI Platform

Before starting, make sure to set up your OpenAI Platform account and get an API key for authentication. For more information, refer to the OpenAI documentation.

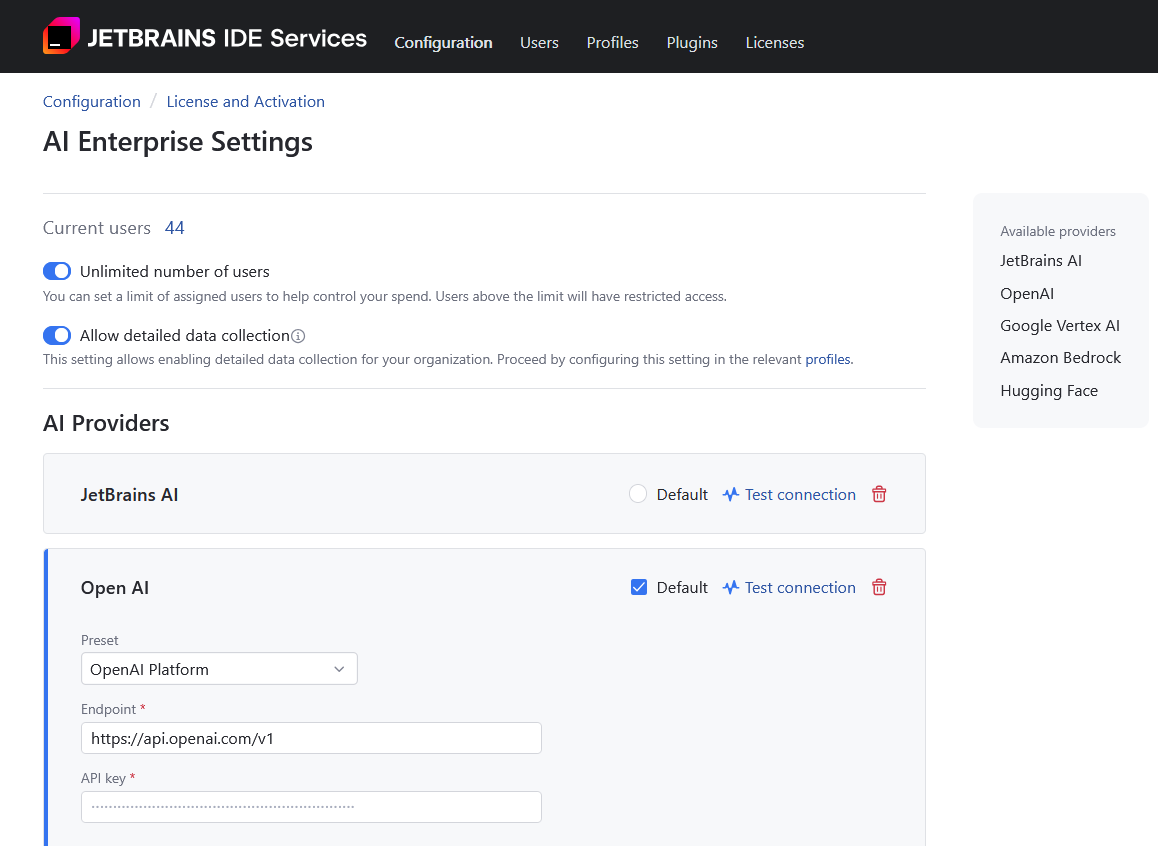

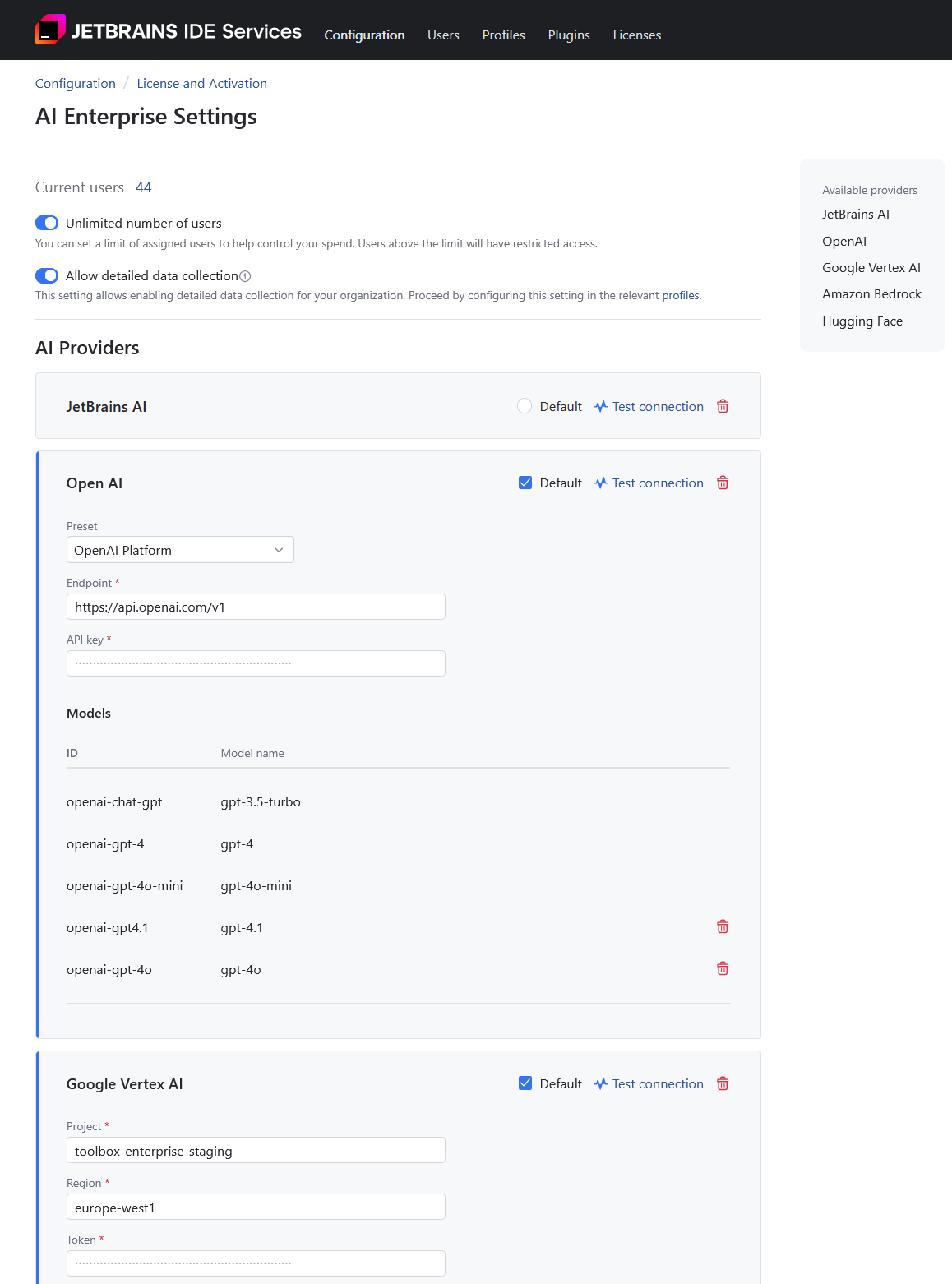

Enable AI provider: OpenAI Platform

In the AI Enterprise section, click Enable.

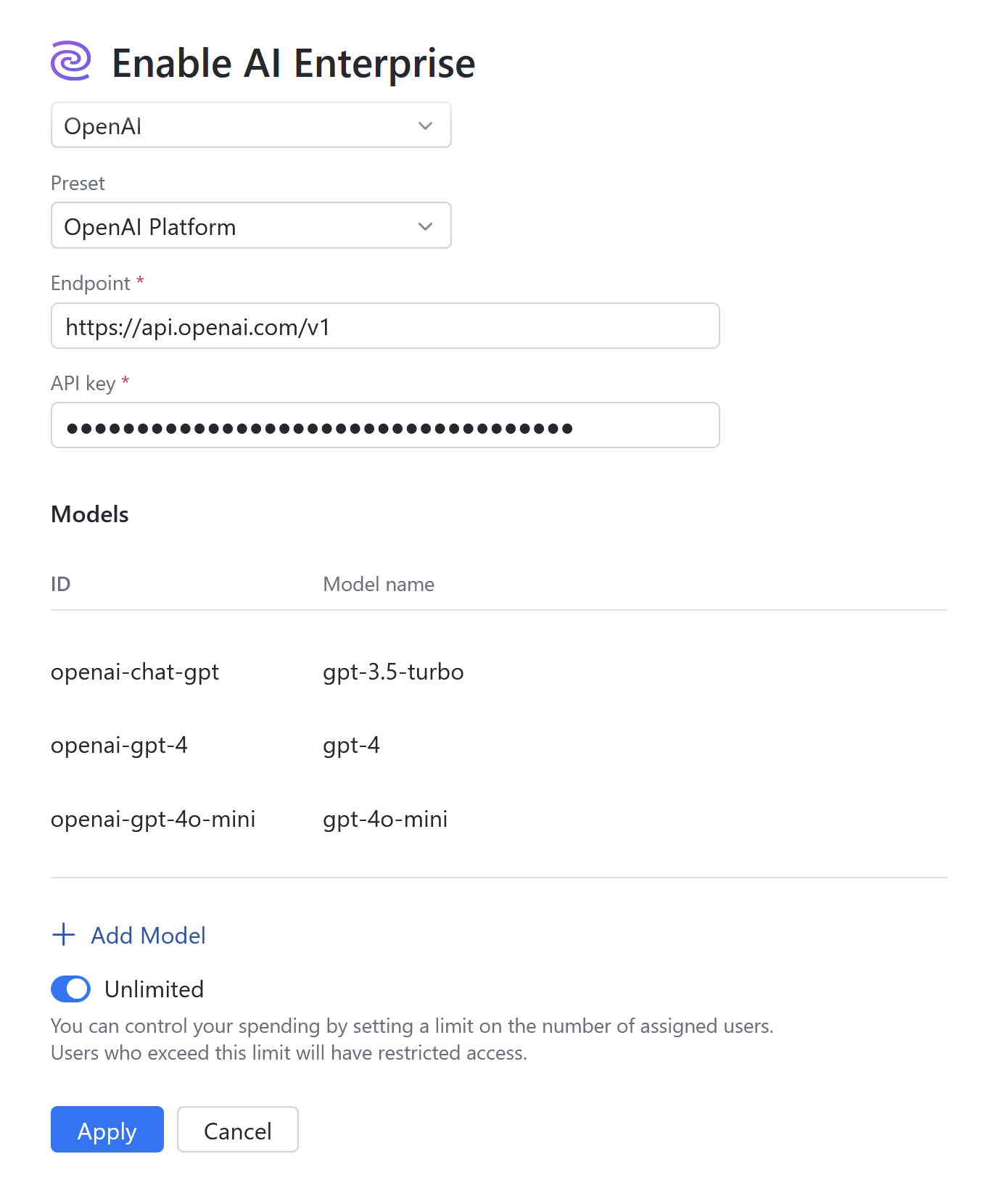

In the Enable AI Enterprise dialog, specify the following details:

Select the OpenAI provider.

Select OpenAI Platform from the Preset list.

Provide an endpoint for communicating with the OpenAI service. For example,

https://api.openai.com/v1.Provide your API key to authenticate to the OpenAI API. For more details, refer to the OpenAI documentation.

(Optional) AI Enterprise uses the GPT-3.5-Turbo, GPT-4, and GPT-4o mini models for AI-powered features within JetBrains products. However, if you have the GPT-4o model available on your account, we recommend adding it to the list by clicking Add optional model.

Set the usage limit for AI Enterprise.

Enable the Unlimited option.

Disable the Unlimited option and specify the limit on the number of AI Enterprise users.

Click Apply.

Azure OpenAI

Before enabling Azure OpenAI as your provider, you need to complete the following steps:

Deploy the required models: GPT-3.5-Turbo, GPT-4.

Obtain the endpoint and API key. Navigate to

Obtain the deployment names of your models. You can find them at

Once you have completed the above preparation steps, you can enable Azure OpenAI in your IDE Services.

Enable AI provider: Azure OpenAI

In the AI Enterprise section, click Enable.

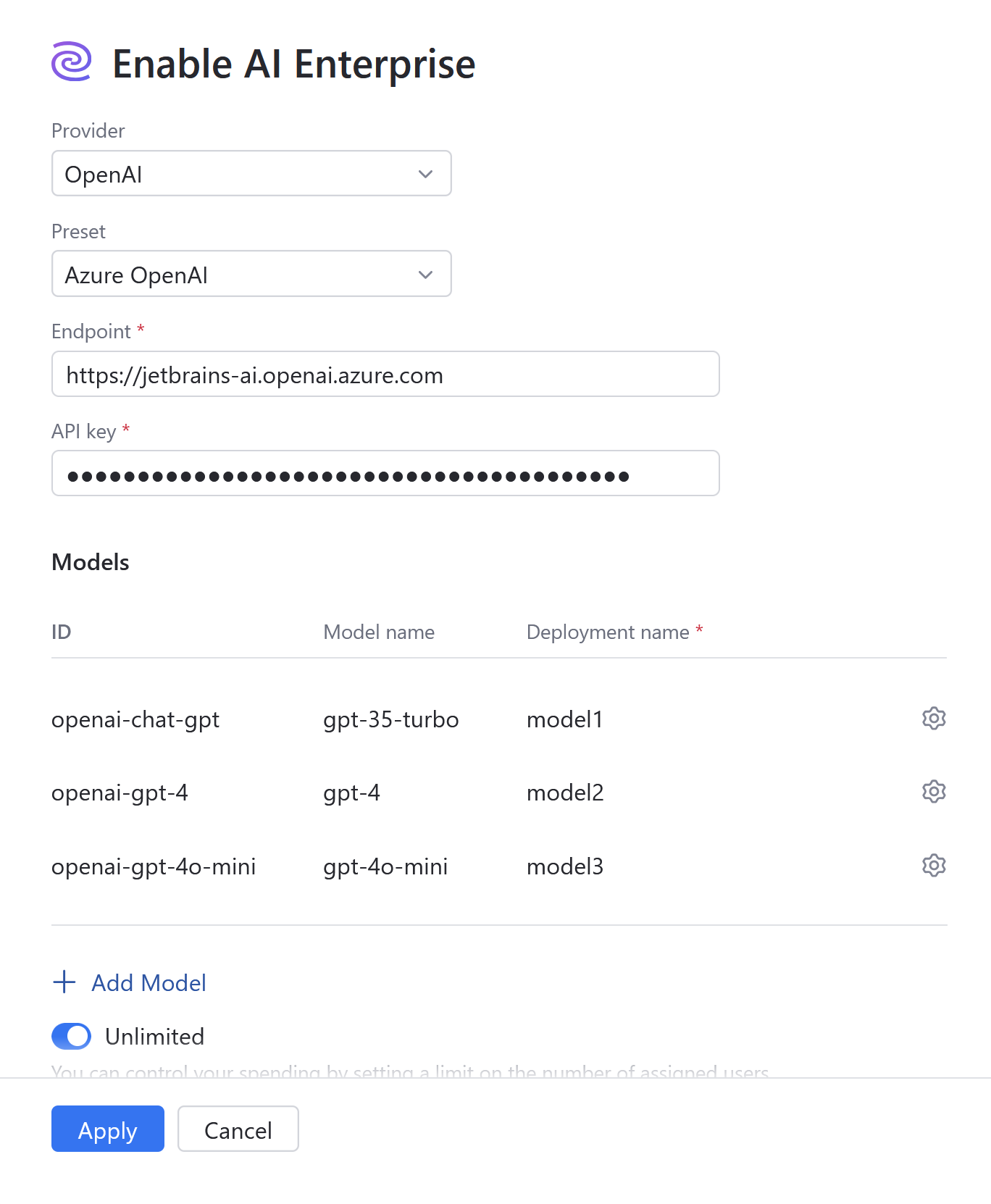

In the Enable AI Enterprise dialog, specify the following details:

Select the OpenAI provider.

Select Azure OpenAI from the Preset list.

Provide an endpoint for communicating with the Azure OpenAI service. For example,

https://YOUR_RESOURCE_NAME.openai.azure.com.Provide your API key to authenticate to the Azure OpenAI API.

Specify the deployment names of your models. Click the gear icon next to each model to enter its name.

(Optional) AI Enterprise uses the GPT-3.5-Turbo, GPT-4, and GPT-4o mini models for AI-powered features within JetBrains products. However, if you have the GPT-4o model available on your account, we recommend adding it to the list by clicking Add optional model.

Set the usage limit for AI Enterprise.

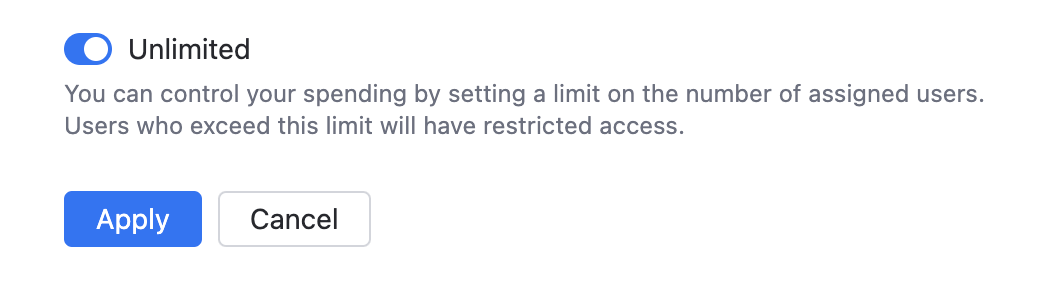

Enable the Unlimited option.

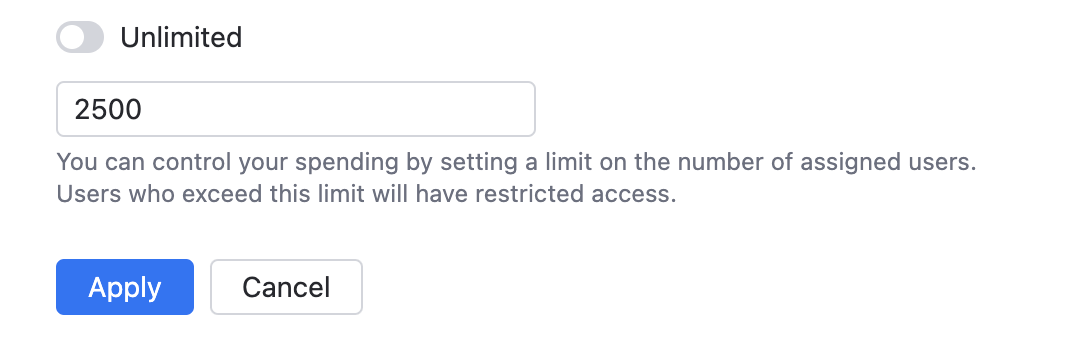

Disable the Unlimited option and specify the limit on the number of AI Enterprise users.

Click Apply.

Google Vertex AI

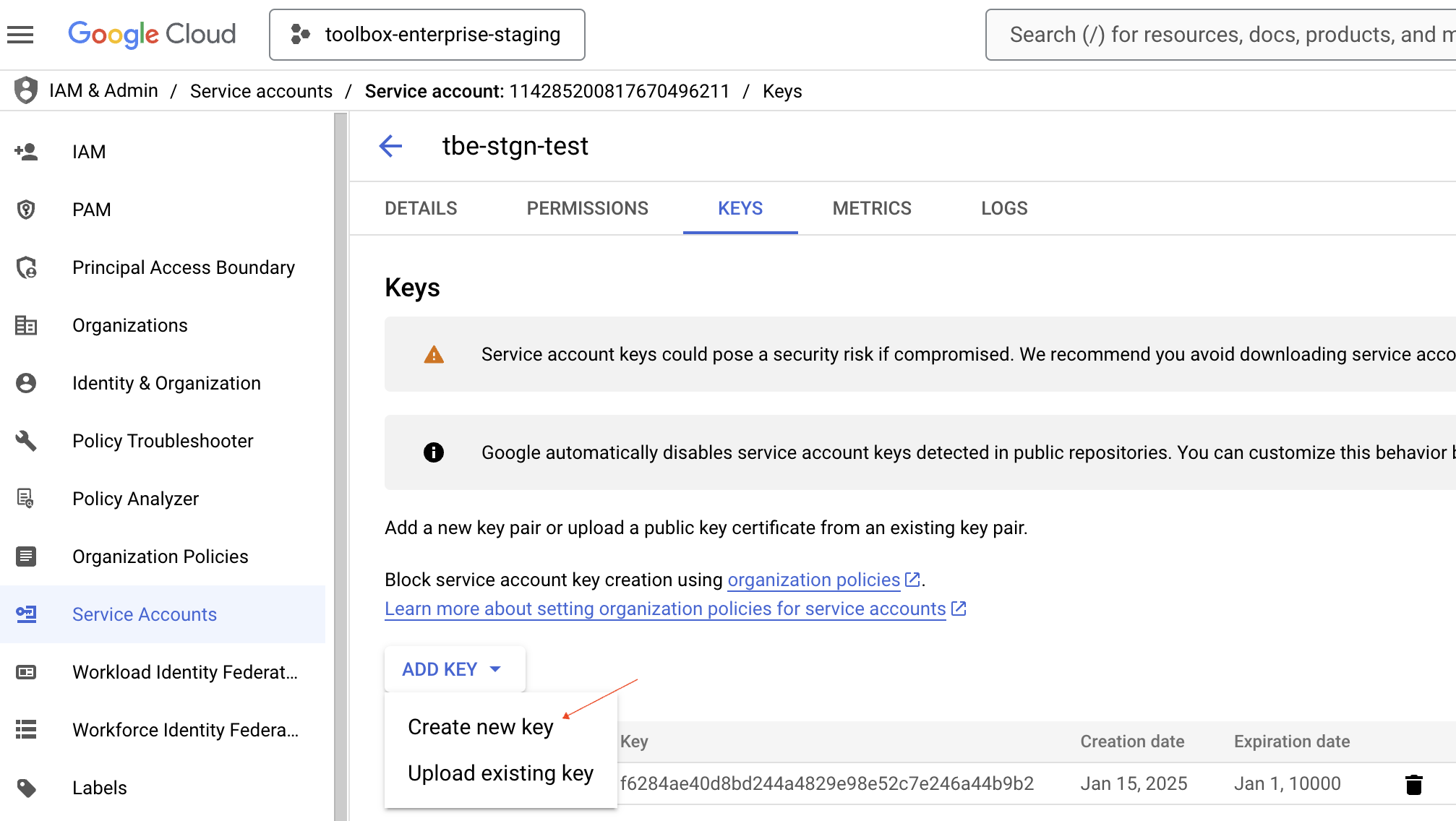

Before enabling Google Vertex AI as your provider, you need to complete the following steps:

Once you have completed the above preparation steps, you can enable Google Vertex AI in your IDE Services.

Enable AI provider: Google Vertex AI

In the AI Enterprise section, click Enable.

In the Enable AI Enterprise dialog, specify the following details:

Select the Google Vertex AI provider.

In the Project field, specify the name of the Google Cloud project.

In the Region field, specify the Google Vertex AI region.

In the Token field, specify the service account key in the JSON format which you have created earlier.

Set the usage limit for AI Enterprise.

Enable the Unlimited option.

Disable the Unlimited option and specify the limit on the number of AI Enterprise users.

Click Apply.

Amazon Bedrock

AI Enterprise provides an integration with Amazon Bedrock, a fully managed service that provides access to a variety of high-performing foundation models. In the current version, AI Enterprise supports the Claude 3.5 Sonnet V2, Claude 3.5 Haiku, and Claude 3.7 Sonnet LLMs to use in the AI Assistant.

Before configuring Amazon Bedrock as an AI provider in IDE Services, you need to set up your access rights and get an access key.

Configure Amazon Bedrock on the AWS side

Follow the Getting Started instructions to:

Create an AWS account (if you don't already have one).

Create an AWS Identity and Access Management role with the necessary permissions for Amazon Bedrock.

Request access to the foundation models (FM) that you want to use.

Access AWS IAM Identity Center, find your user, and review the Permissions policies section.

In addition to the default permission policy

AmazonBedrockReadOnly, add a new inline policy for the Bedrock service.Configure the new inline policy to have the Read access level for the InvokeModel and InvokeModelWithResponseStream actions.

Generate an access key for your user.

When creating an access key, specify Third-party service as a use case.

The access key ID and secret are necessary for configuring Amazon Bedrock in IDE Services. Make sure to save these values.

Request access to the Claude 3.5 Sonnet V2, Claude 3.5 Haiku, and and Claude 3.7 Sonnet models.

Enable AI provider: Amazon Bedrock

In the AI Enterprise section, click Enable.

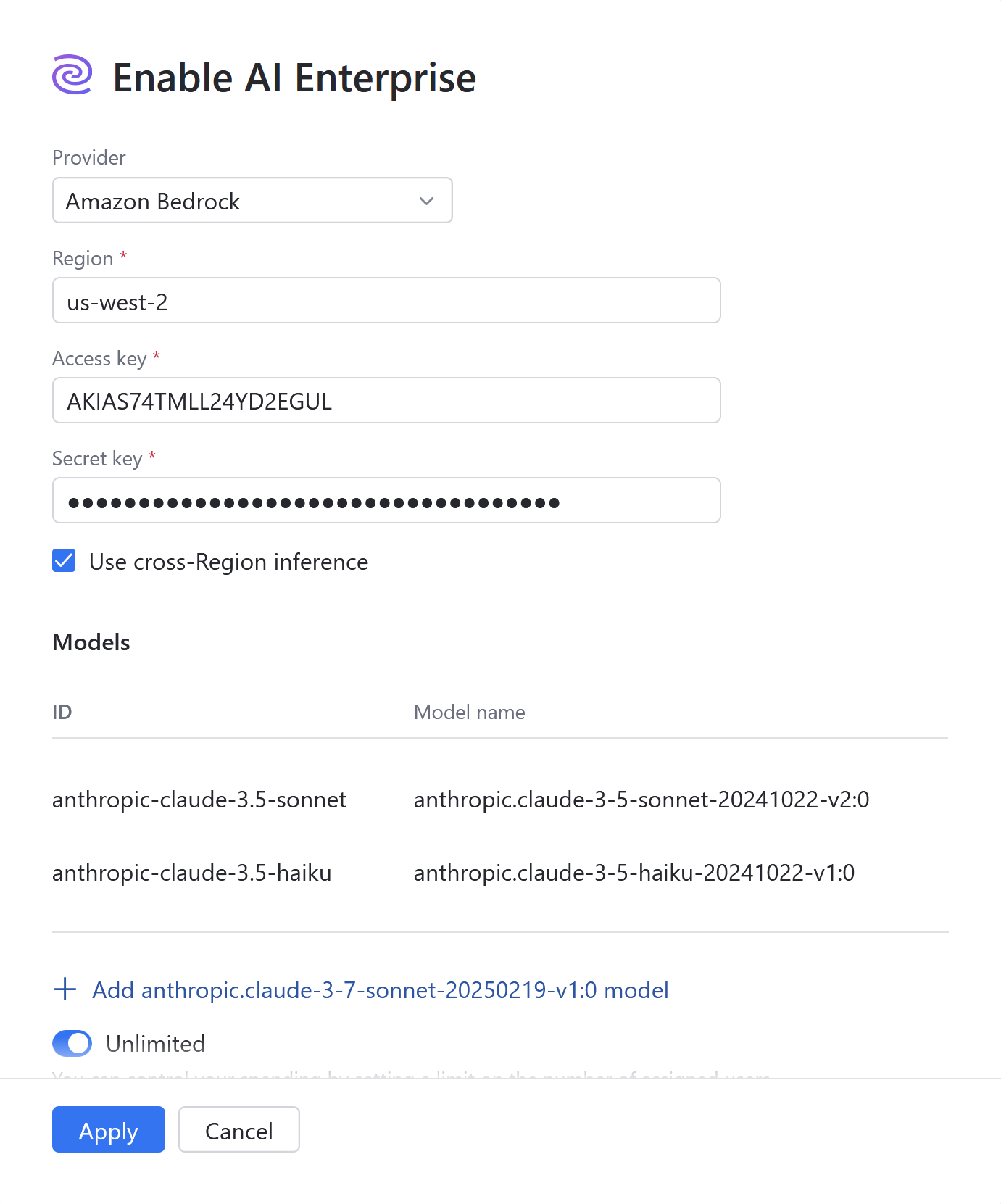

In the Enable AI Enterprise dialog, specify the following details:

Select the Amazon Bedrock provider.

In the Region field, specify the AWS region that supports Amazon Bedrock.

In the Access key field, specify the access key ID.

In the Secret key field, specify the access key secret.

Use cross-Region inference. This option automatically chooses the most suitable AWS Region within your geographic area to handle user requests. This enhances the experience by optimizing resource utilization and ensuring high model availability. (Required for Claude 3.7 Sonnet model)

(Optional) Add anthropic-claude-3.7-sonnet model if you are going to enable Junie.

Set the usage limit for AI Enterprise.

Enable the Unlimited option.

Disable the Unlimited option and specify the limit on the number of AI Enterprise users.

Click Apply.

Hugging Face

AI Enterprise allows you to use on-premises models, such as Llama 3.1 Instruct 70B powered by Hugging Face, for air-gapped operations.

For deploying and serving Llama 3.1 Instruct 70B, use the instructions provided in the official Hugging Face documentation. Refer to this section to learn about model-specific requirements.

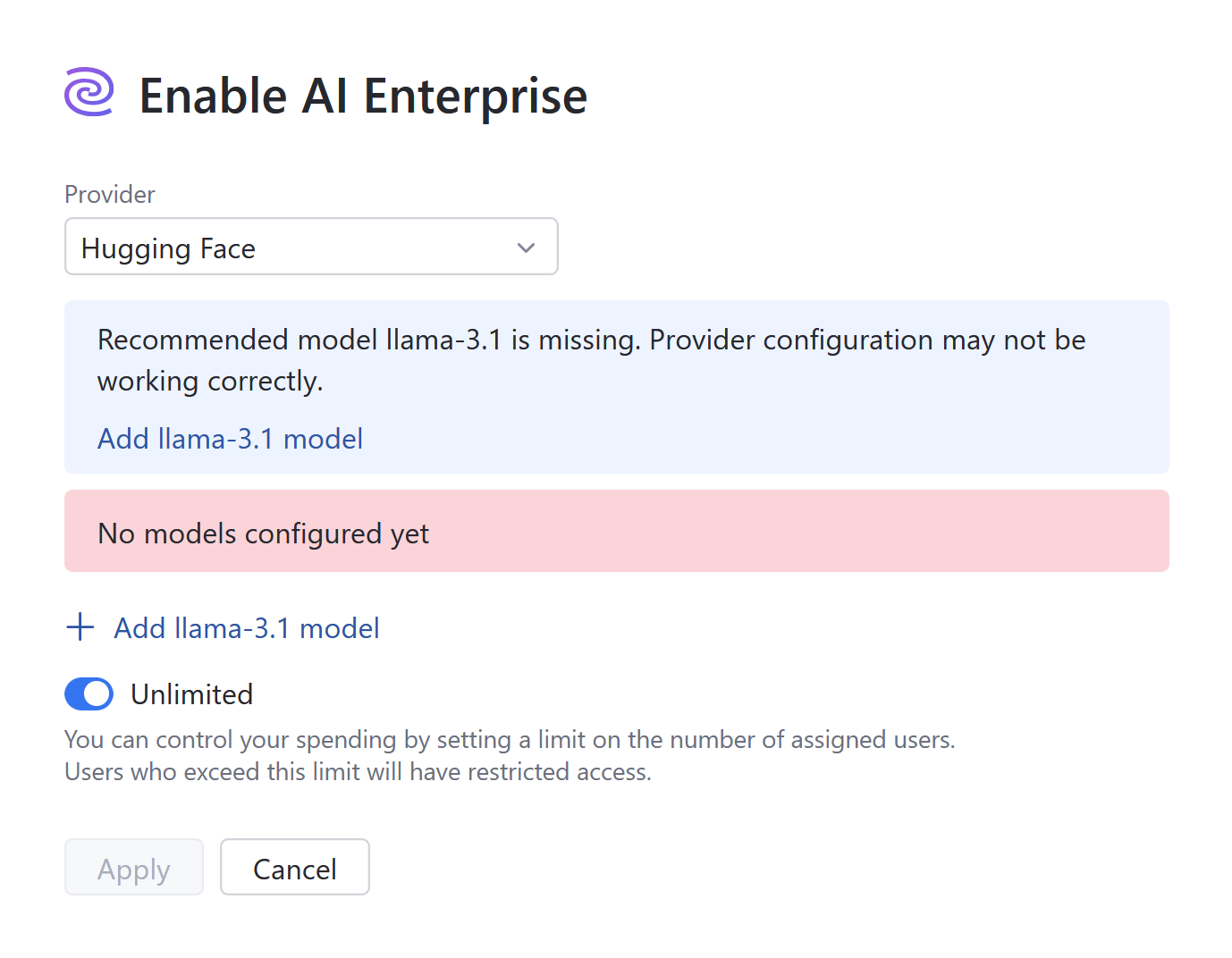

Enable AI provider: Hugging Face

In the AI Enterprise section, click Enable.

In the Enable AI Enterprise dialog, specify the following details:

Select the Hugging Face provider.

Click Add llama-3.1 model.

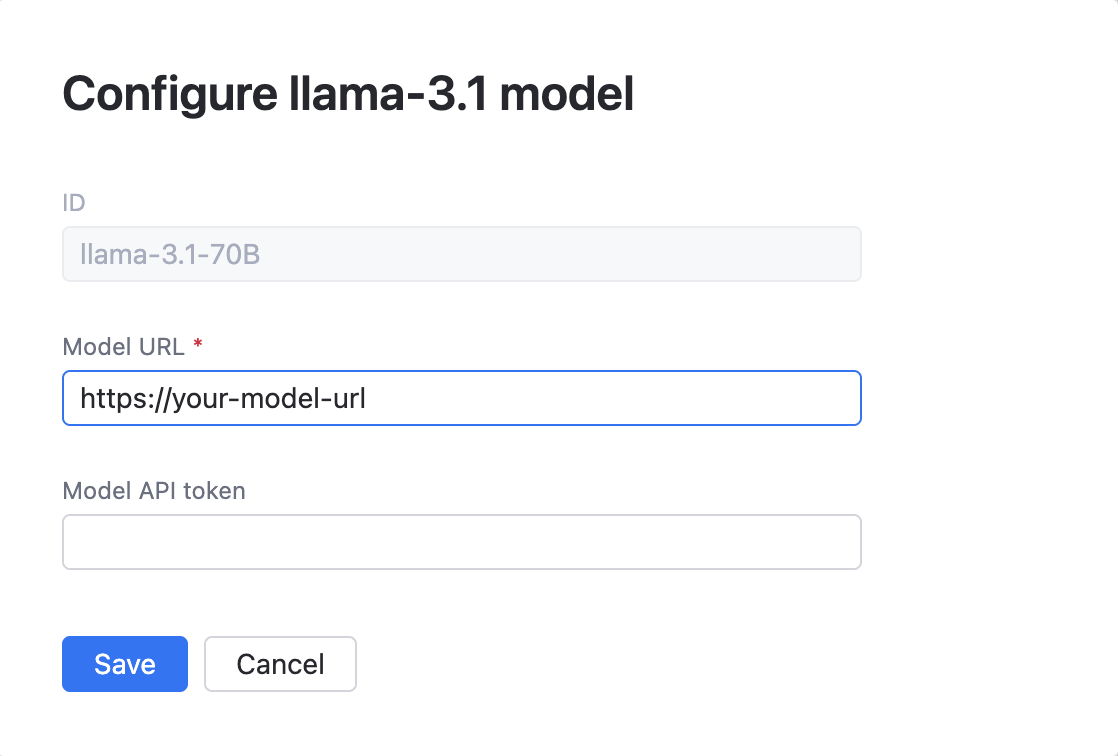

Specify the Model URL and Model API token in the Configure llama-3.1 Model dialog. Click Save.

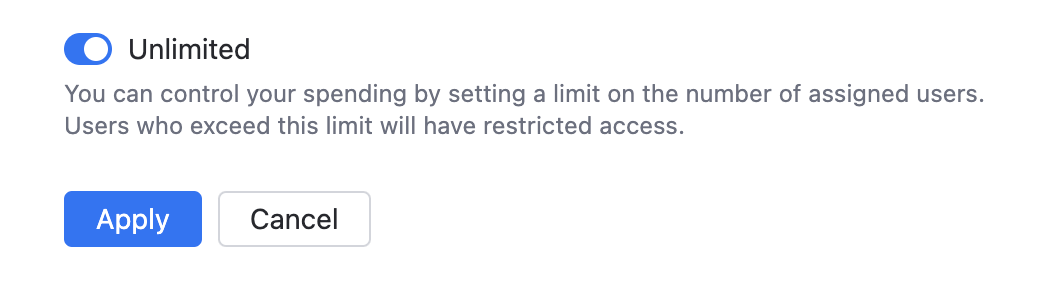

Set the usage limit for AI Enterprise.

Enable the Unlimited option.

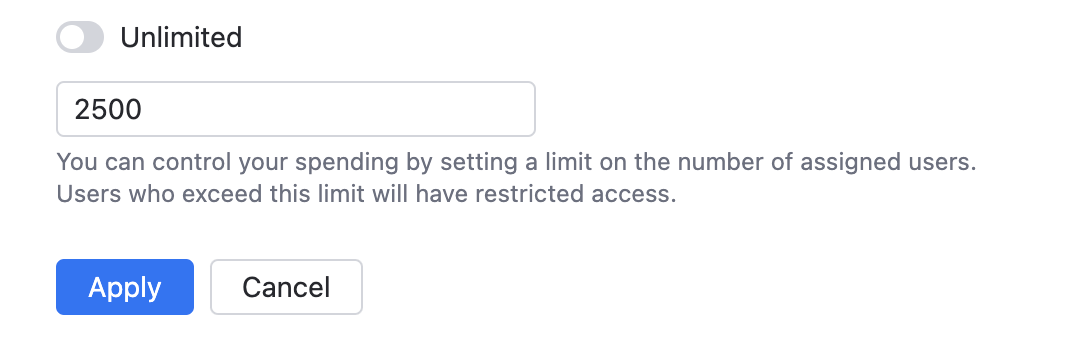

Disable the Unlimited option and specify the limit on the number of AI Enterprise users.

Click Apply.

Junie coding agent

JetBrains Junie is an AI-powered coding agent designed to work directly within supported JetBrains IntelliJ IDEA-based IDEs and is available as a plugin.

Unlike traditional code assistants, Junie can autonomously perform tasks such as generating code, running tests, fixing errors, and adapting to project-specific guidelines—all while keeping the developer in control. It understands project context, supports collaborative workflows, and aims to enhance both productivity and code quality.

Junie can be powered by large language models (LLMs) provided by the JetBrains AI service, or, as an alternative, it can use a specific set of models available through OpenAI and Amazon Bedrock.

Enable Junie

If you have JetBrains AI service enabled, no further configuration of AI Enterprise settings are required. To make Junie available to developers, proceed to enable it in selected profiles.

If JetBrains AI service is not enabled, you should select Azure OpenAI or Open AI Platform and Amazon Bedrock as your providers and configure as follows:

On your dashboard (home page), locate the AI Enterprise widget and click Settings.

Enable and configure Azure OpenAI or Open AI platform.

Add the following models to the configuration:

GPT-4o

GPT-4o-Mini

GPT-4.1

Enable and configure Amazon Bedrock.

Select Use cross-Region inference.

Add the anthropic.claude-3-7-sonnet model to the configuration.

To make Junie available to developers, proceed to enable it in selected profiles.

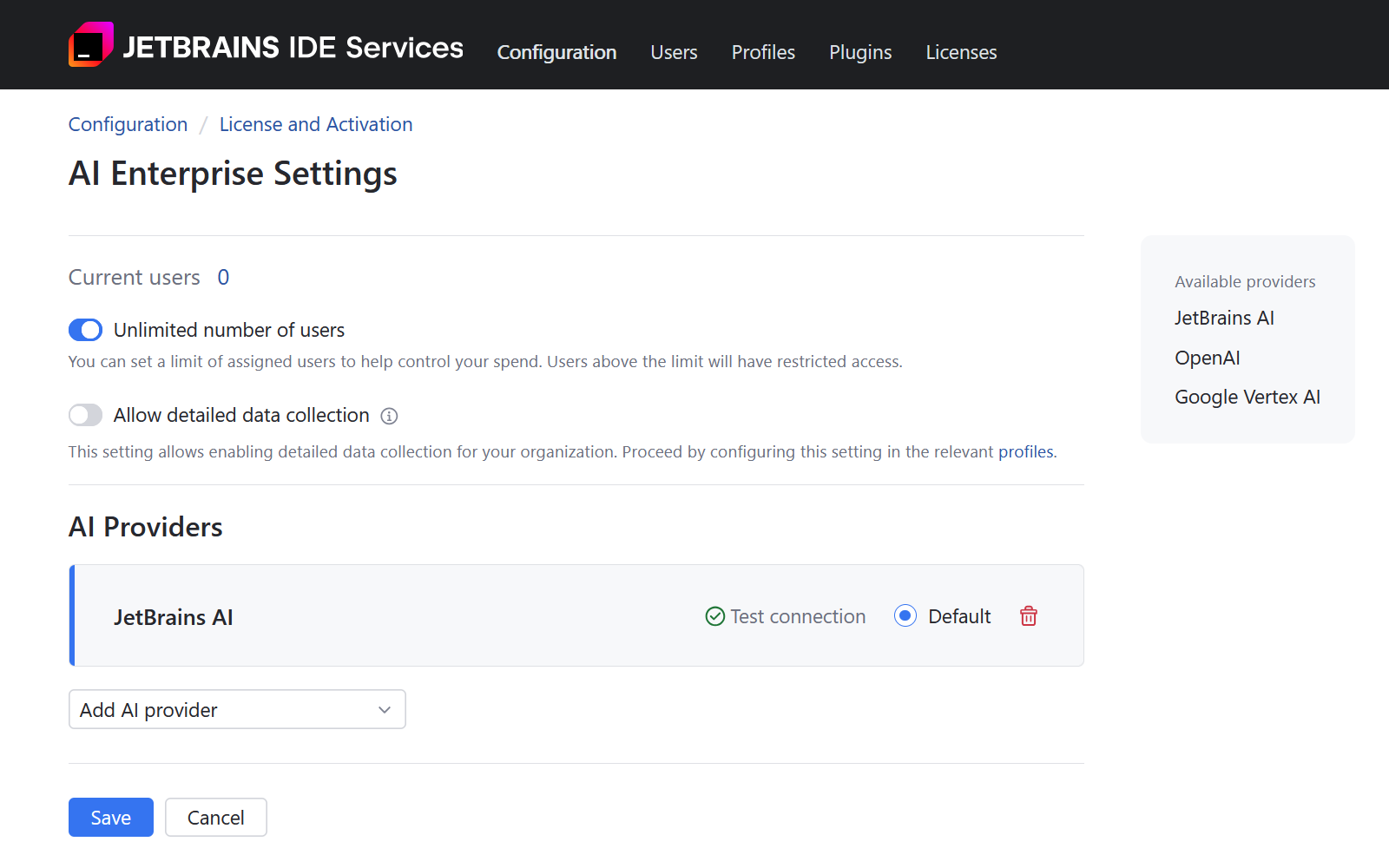

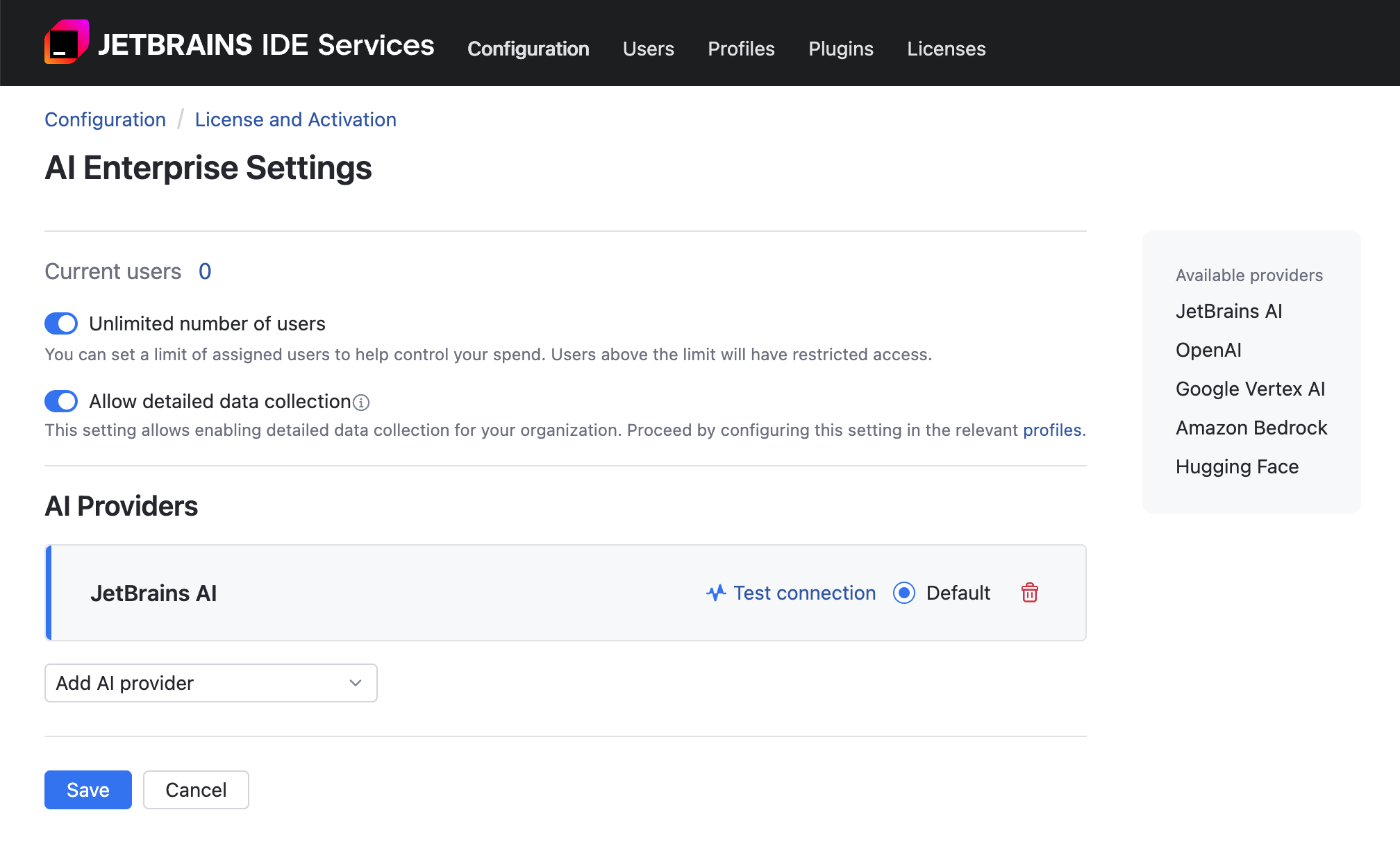

AI Enterprise Settings

Enable additional AI providers

When enabling AI Enterprise for your organization, you get to choose only one AI provider. To enable an additional provider:

Navigate to .

Scroll down to the AI Enterprise section and click Settings.

Click Add provider and choose one from the menu.

If you're adding a Google Vertex, OpenAI, or AWS Bedrock provider, refer to the specific configuration instructions for further steps.

Test connection to AI provider

If AI models stop responding, test the connection. The problem could be related to authentication, such as an expired token, or configuration changes on the AI provider's end. A failed connection test returns an error with a description to help you identify and fix the issue.

You can also check the connection when adding an AI provider to make sure your configuration is correct and that the API key or token you entered is valid.

To test the connection:

Navigate to .

Scroll down to the AI Enterprise section and click Settings.

On the AI Enterprise Settings page, click Test connection next to the AI provider you want to check:

Set default AI providers

If you have more than one AI provider enabled for your organization, the providers you set as default will be preselected when you enable AI Enterprise in profiles. Additionally, it allows you to centrally switch providers for all profiles that have the Default provider option currently selected.

You can set multiple default providers, except when JetBrains AI is selected — in that case, it must be the only default provider.

To choose default providers:

Navigate to .

Scroll down to the AI Enterprise section and click Settings.

Select one or more of the listed AI providers as Default, then confirm and save your selection.

Update the AI Enterprise usage limit

Navigate to .

Scroll down to the AI Enterprise section and click Settings.

On the AI Enterprise Settings page, configure the usage limit for AI Enterprise:

Enable the Unlimited number of users option to let all users with AI Enterprise enabled on the profile level gain access to the AI features.

Disable the Unlimited number of users option and specify the limit on the number of AI Enterprise users. Users above this limit will have restricted access to the product features.

Click Save.

Allow detailed data collection

Navigate to .

Scroll down to the AI Enterprise section and click Settings.

On the AI Enterprise Settings page, use the Allow detailed data collection option to enable or disable detailed data collection in your organization. When you enable this option, users will be asked to grant permission for data sharing. Collecting AI interaction data helps improve LLM performance.

Click Save.

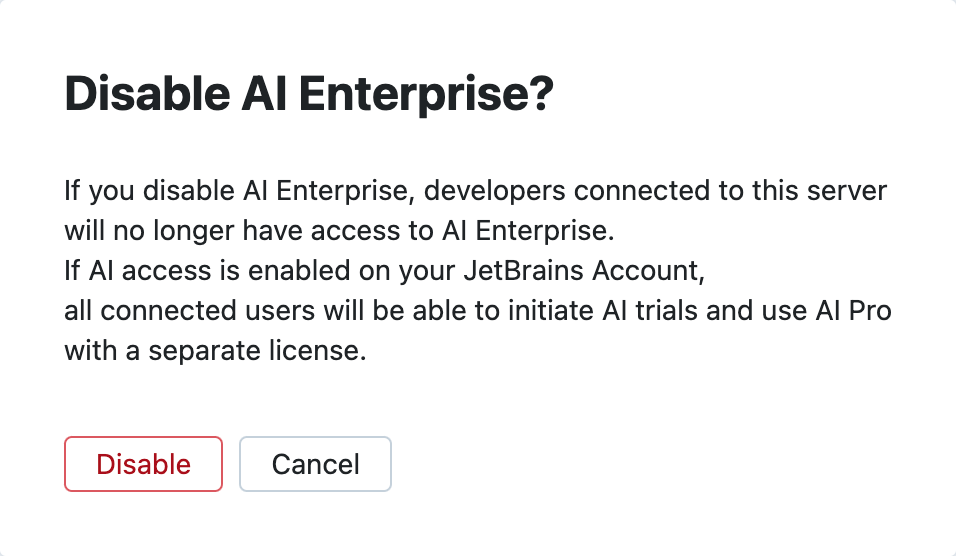

Disable AI Enterprise for your organization

In the Web UI, open the Configuration page and navigate to the License & Activation tab.

In the AI Enterprise section, click Disable.

In the Disable AI Enterprise? dialog, click Disable.