Tips for improving performance of your generator

Accelerate Your MPS Generators and Increase Your Build Speeds by 400%

By Daniel Stiegler (Modellwerkstatt) and Václav Pech (JetBrains)

Daniel Stieger is a partner at modellwerkstatt.org, an Austrian company that provides the Java low-code platform MoWare Werkbank. The platform focuses on the efficient development of business applications, which means custom applications to support the specific business processes of a given company. The low-code platform comprises three tightly integrated domain-specific languages (DSLs) and is wholly developed within JetBrains MPS.

Introduction

In this article, we’ll tell you about the largest project we ever applied our DSLs to – a merchandise management system (MMS). Rather than merely giving a superficial overview, however, we’ll be delving deep into the details of JetBrains MPS. Specifically, we’ll explore our experience with the MPS code generator and how we were able to more than triple the code generation speed. We start 13 years ago, at the beginning of our MMS story, and give some practical tips for working efficiently with the JetBrains MPS code generator.

The task at hand

Thirteen years ago, we were hired by MPREIS, one of the largest food retailers in Austria, to develop a merchandise management system (MMS) for their close to 300 branches. The primary task of such a system is to track all goods movements, including incoming and outgoing, as well as write-offs (spoilage, theft, etc.). The MMS can then be used to keep a merchandise inventory that takes into account both monetary value and quantity. Furthermore, business processes such as manual procurement (i.e., the ordering of goods), must be fully supported. As this project was an in-house development, MPREIS wanted to pay special attention to its own specific requirements. Going forward, they wanted to be able to react quickly and dynamically to changes in business processes. Additionally, they wanted the system to enable experimentation with innovative store management concepts. The in-house development should strengthen branch-based retailing – for at least the next 30 years. It should ensure a high degree of flexibility, allowing process changes to be made quickly and efficiently.

Management called for a new and innovative approach to developing the strategically important MMS. An orientation towards modern (Java) open-source technologies was desired. Additionally, we suggested working with DSLs to elevate the development of the MMS to a more abstract level. A higher level of abstraction should not only increase productivity but also shift developers' attention more toward the business domain itself, the relevant data, the inherent constraints, and the end users. Ideally, though, the developers should not be distracted by technical necessities and the underlying technical base platform in the first place.

When we started the project in 2010, we identified JetBrains MPS as the leading tool for implementing the DSLs and the necessary code generators. Compared to other tools, we were particularly convinced by the projectional editor and the possibilities it offers, especially working with several DSLs at the same time. This enables you to design multiple smaller DSLs for more specific tasks and still allows for the DSLs to be tightly integrated. Out of the box, MPS ships with a full implementation of the Java language as a reusable DSL! Back then, this was probably of the greatest importance to us.

You can find a short video regarding the projectional editor

With jetbrains.mps.baselanguage, the complete Java language is implemented as an extendable DSL. That means you can simply extend that language or reuse specific concepts for your own DSLs. Typical candidates are general expressions or the type-system, which you would otherwise have to create yourself, and that would be quite a complex task. For us, Java expressions, as well as basic control structures like an “if” or a “for” statement, were particularly interesting. Looking back, this decision to make our DSLs Java-flavored really helped to lower the entry barriers for other developers. After all, Java is a widespread and well-known language.

Curiously, JetBrains MPS had extended its own Java DSL with multiple other DSLs by then. In 2010, we were particularly impressed by the functional collection language for Java (jetbrains.mps.baselang.collections). This DSL allows you to write operations on collections in a purely functional style, similar to Java Streams, which did not exist at that time. In fact, we even perceived the DSL syntax to be more concise than Streams. Comprehensive closure (jetbrains.mps.baselang.closure) support was also readily available back then.

MMS projects, DSLs, and the status quo

We developed the entire MMS and its components exclusively in the JetBrains MPS IDE. We conceived and implemented three DSLs in parallel. The three DSLs used in the MMS project are:

org.modellwerkstatt.manmap | Manmap is an object-relational mapper with a code generator for SQL databases, allowing users to retrieve and store entities easily. We chose to focus on manmap because of its transparent and “easy to understand” behavior. |

|---|---|

org.modellwerkstatt.objectflow | Objectflow is a DSL for modeling domain models, for example, with aggregates, entities, and valueobjects (following tactical domain-driven design). In addition to services, it provides the concept of a command, simplifying the description of end-user interaction. |

org.modellwerkstatt.dataUx | DataUx is used for technology-independent modeling of end-user UIs, including the well-known master-detail pattern and necessary menu structures from which commands originate. DataUx ensures that the whole UI technology can be easily replaced years later. |

All three DSLs are based on the JetBrains MPS Java DSL (BaseLanguage), from which we have also taken the type system and the basic semantic orientation. We make a strong case for DSL reusage with this article here. Extending the Java BaseLanguage did increase our own DSL development significantly. We make intensive use of JetBrains' collection language to formulate quantity-based business logic with a nice, functional syntax. To date, we haven’t noticed any shortcomings. On the contrary, we perceive JetBrains MPS as a stable and mature Language Workbench even in 2023.

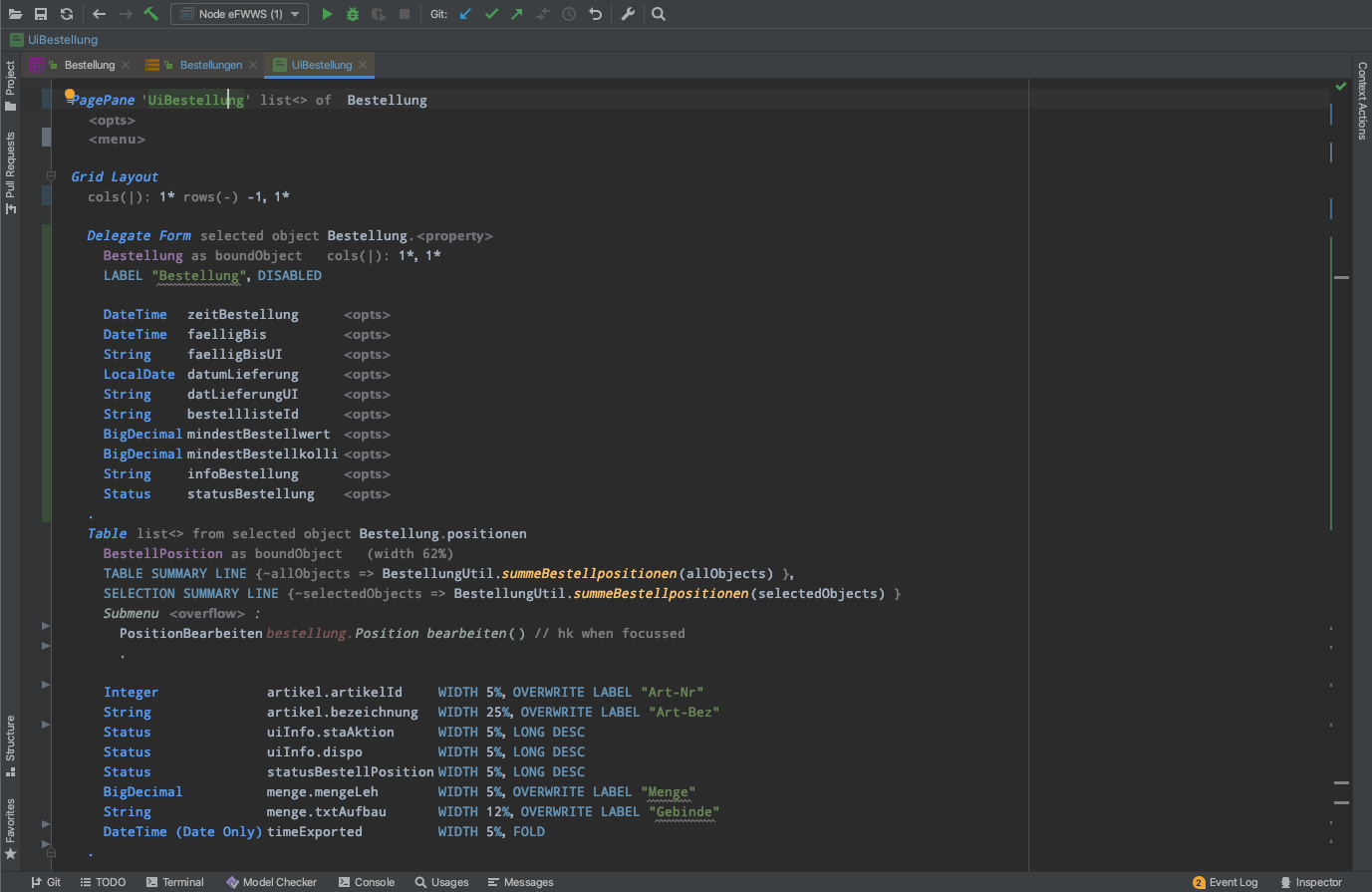

Figure 1 gives you an impression of the DataUx DSL, which is used to model necessary UIs in a technology-independent manner. In a grid layout, seven fields of an order (Bestellung in German) are displayed in two columns, laid out in a form (disabled). The form is in the first row of the grid with a minimum height (-1). A second row shows a table with a maximum height (1*). A more detailed but older article on UI modeling with our DataUx DSL can be found on DZONE.

As of the summer of 2023, the MMS consists of 374 UI pages similar to the one shown above. Necessary UI controllers are also specified via DSL and contribute another 488 so-called root concept instances. The domain models of the MMS include 128 Entities and 87 Value-Objects, which are used by 245 services and 169 repositories. From these higher-level concepts, our code generators produced 2,886 Java files with a total of 1,130,000 lines of productive code (i.e., excluding tests and related code). The system runs very stable on about 550 Android devices with barcode scanners and almost 500 desktop computers.

So far, everything sounds good! However, from a developer’s point of view, working on the MMS and launching it directly within MPS has gotten slower and slower over the years. Regenerating the executable code for the MMS used to take approximately 3–4 minutes on a standard computer. Initially, the number of models was small, and the generation and startup time of the application were very fast. After 13 years of development, the project has accumulated 139 MPS models across multiple solutions. The developers have now started reading newspapers while regenerating the application. Therefore, one of our main aims for summer 2023 is to reduce the generation time by a significant amount.

Optimizing the code generators

Our DSL stack encompasses multiple code generators. Each of our DSLs comes with its own code generator. The JetBrains baselang, the collection, and closure languages also provide one each. If we add the baseLanguageInternal generator, we get a sum total of seven different code generators. Since we did not pay any special attention to generation speed when conceiving the DSLs, we were sure to find some no-go tasks and various MPS generator particularities. In the back of our mind, we suspected that we might be breaking some of the generator’s recommended practices or rules or structuring the generator rules and templates in a sub-optimal way.

Before analyzing our generators and the general setup, we were somewhat astonished by the number of lines we generated. Overall, the MMS consists of 2,192 root concepts, which translate to 2,885 Java files. The content of the files totaled more than one million lines of code. These are some genuinely big numbers, so it’s no wonder the generation takes so much time.

Measure 1: Develop a clever runtime and generate code that leverages that runtime

Now, in retrospect, we followed an obvious pattern when initially designing the code generators. Basically, all the code was fed directly into the Generator Template, whether this was necessary or not. The following simple example can easily illustrate our former approach.

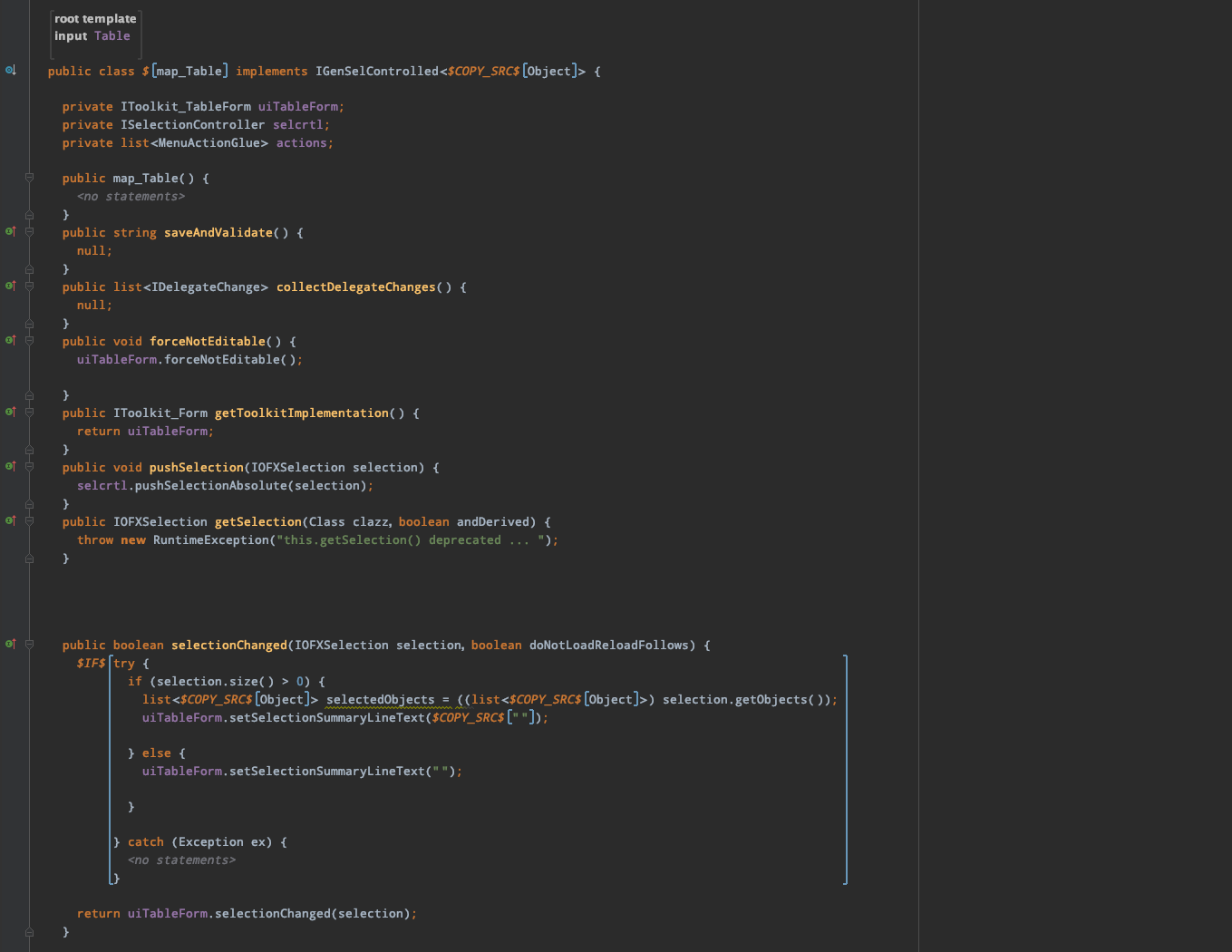

The Java class in the generator template of Figure 2 implements the IGenSelControlled interface directly. Boilerplate methods like saveAndValidate() or collectDelegateChanges() are directly in the template, although there is no variation in the behavior of the methods depending on the input model. The implementation of the methods does not vary in any way at all. In fact, the implementation is always the same. As one can also see in the same Figure 2, there are multiple methods of the same characteristic, not just one.

Figure 3 shows a refactored version of that generator template. The Java class has now extended another Table class. All that static code mentioned above was implemented in the extended class. Furthermore, behavior that is affected by the input model was implemented in the form of Boolean properties. This can be seen in the constructor declaration in Figure 3. Three different Boolean switches with property macros applied are available to configure the behavior of the Table class. Additionally, the former method selectionChanged() illustrates how a more complex generator fragment was refactored. While the static parts of the code moved to the runtime Table class, the dynamic parts moved into calcSelectionSummeryLineText(). The method comes with a parameter and a return value. The method itself is very compact. An expression calculating some domain logic is passed forward from the input model into the generated class. Now, that method is called by the Table class, resembling the same behavior from Figure 2.

Overall, similar refactorings in our own code generators reduced the number of lines generated from over one million down to 484,000 lines. By moving static code from the Generator Templates into a runtime, the amount of code to be generated and compiled was halved. This led to a generation and compile time improvement of approximately two minutes on our developer machines. A very significant improvement. Therefore, we strongly recommend building a powerful runtime environment in which to generate code.

Measure 2: Do not reference concepts by name

MPS has supported model generation performance reports for some time now. This report type gives generator designers performance insights on the various generation steps employed per model. The report is sorted in such a way that it lists the generator steps consuming the most time first. One can easily identify time spent on generator steps and the activities within a single step. It also shows the time spent on weaving rules, reduction rules, and delta changes. Of particular value to us was the time spent restoring references.

Restoring references is the process of looking up the actual target concept when working only with target names in reference macros. MPS reference macros are capable of returning target concepts (node<> or node-ptr<>) or simply names (strings). When working with plain names, MPS will take over the work of locating the referenced targets in the output model. However, this additional work might take some time. This is the same period of time that is reported as “restoring references”.

In the context of our MMS project, the time spent on restoring references was not significant at first glance. However, one or two seconds restoring references accumulates when you’re working with 140 models and multiple generation steps. Therefore, we tried to address referencing target concepts generally in another refactoring of our generators. There are multiple possible methods of doing this.

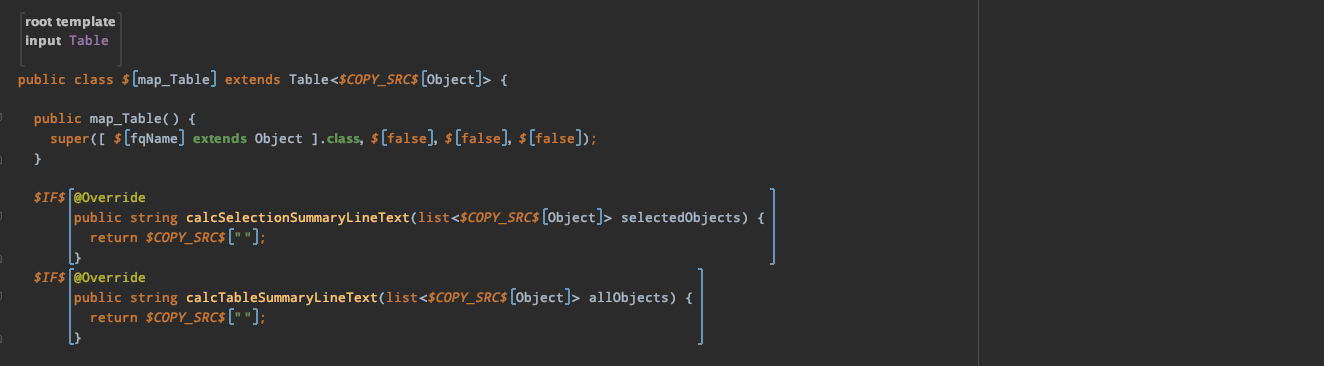

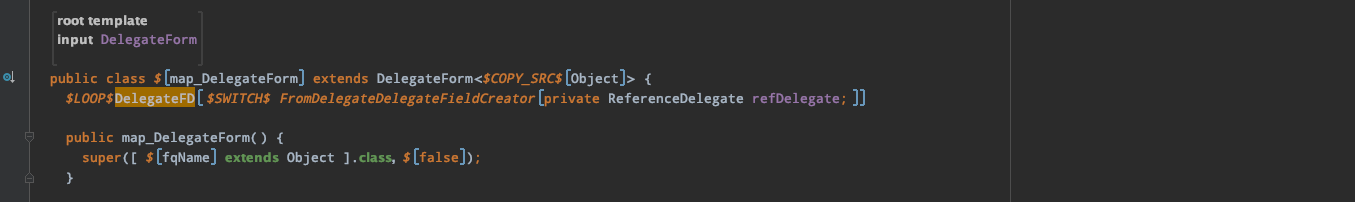

The primary measure around referencing by name is actively using generator mapping labels. As shown in Figure 4 below, the mapping label “DelegateFD” is attached to the loop macro, labeling each generated class field in the class. That labeling process creates a relationship between an input concept instance and a generated concept instance. Later, the generator designer can look up generated concepts by input concept via those labels. This is typically what reference macros are for. Based on a specific input concept instance, we want to reference a related generated target in the output model. In the example illustrated in Figure 4, the generator designer might look up one of those generated class fields.

Trying out mapping labels is a straightforward process. All you have to do is label some generated code and use those labels in reference macros – it’s as simple as that. As long as labeled generated code resides in the same output model as the code with reference macros applied, mapping labels do not add any additional burden. Things get a bit more complicated when referencing instances across models. By default, labels are not accessible across different input and output models. In the case of our generators, for example, such a situation quickly arises. In model A, a “service component” S is declared, whereby that service component S is also used and accessed from model B. The generated code in model B has to reference the generated code in model A. There are two solutions to this scenario.

Restoring references becomes obsolete when directly generating text instead of doing model-to-model transformations. This is what we have resorted to when referencing across models in our generators. In the superclass constructor call in Figure 4, a concept from the JetBrains BaseLanguageInternal language is used to determine the class instance of a Java class at runtime. The concept is different from the standard Java .class expression. It accepts a fully qualified name as text and a classifier to determine the preliminary type of the concept in the output model. The corresponding generator of that concept – a JetBrains TextGen – translates straight to Java text by appending a .class to the given name. As can be seen in Figure 4, a property macro is used instead of a reference macro. Thus, restoring references becomes obsolete when working with this BaseLanguageInternal concept.

There are more similar baseLanguageInternal concepts that translate directly to text with TextGen. We relied on some of those in our generators when cross-model references are needed. This saved us approximately another 45 seconds in the generation process for the entire MMS. While the BaseLanguageInternal stuff seems to be somewhat “internal”, we did not experience any significant changes to those concepts that would demand generator refactorings. This approach of generating text directly is a viable solution to the cross-model reference scenario in our situation. Be aware that no other transformations can be applied after text has been generated.

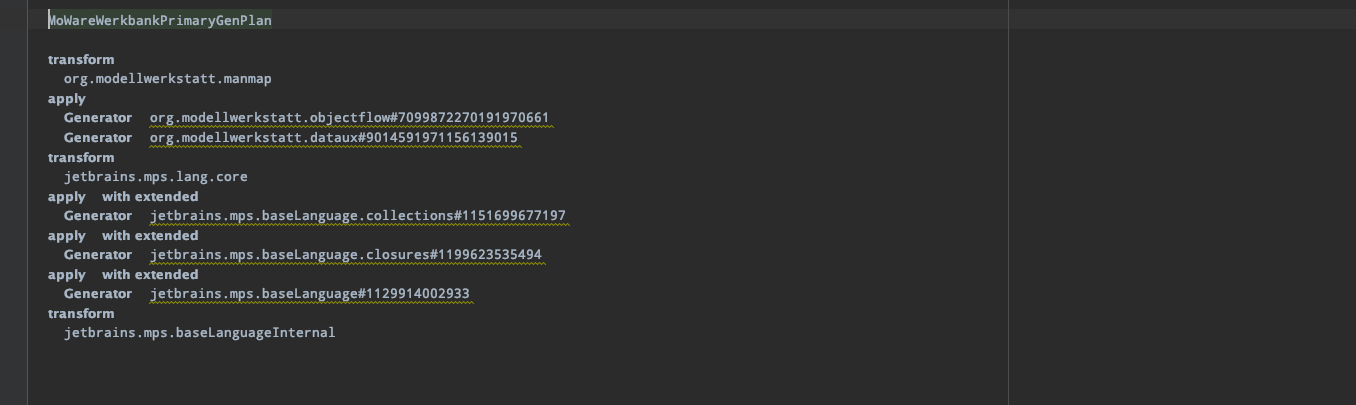

There is also a newer recommended approach to handle model cross-referencing situations: a generation plan in combination with checkpoints. Generation plans allow developers to specify the desired order of generation for their models explicitly and thus gain better control over the generation process. Generation plans list all the languages that should be included in the generation process, order them appropriately, and optionally specify checkpoints at which the generator shall preserve the current transient models. These models can then be used for automatic cross-model reference resolution further down the generation process. Although we specified “generators’ priorities” for our generators in the traditional approach, we additionally gave the generator plan a try. Setting up a generation plan is indeed quite easy. One can specify all the priorities by listing the generators from the top down. The complete plan for our language stack and its generators is shown in Figure 5.

As can also be seen, we did not specify any checkpoints in the plan since we rely on the BaseLanguageInternal approach when it comes to model cross-referencing. However, we created a simple language for demonstration purposes to resemble the situation where a “service component” S is declared in model A, whereby that service component S is also used and accessed from model B. Quite simplistic, the “service component” was transformed into a Java class with the generator. Thus, the generated code from model B has to reference that very class in the generated code of model A. With a checkpoint added in the generation plan (i.e., simply adding the “checkpoint” keyword after our demo language’s “apply generator” command, everything is already done. MPS automatically takes the checkpoint models into account when looking up generated concepts by label and input. The mechanism is exactly the same as with the intra-model reference resolution. As a generator designer, there are no additional things to consider. In fact, setting up a generation plan with checkpoints in our demo language turned out to be no hassle at all. Furthermore, cross-model referencing with labels worked out of the box.

Measure 3: Be careful with '.type' calculations in the generator

The jetbrains.mps.lang.typesystem language provides a .type operation to determine the type of an arbitrary node in the generator. This is extremely useful when handling expressions since various decisions are typically made on the basis of the expression type. For example, methods applicable to an expression depend on the type of that expression and not on whether the outermost concept of that expression is a variable reference or another method call.

It is not untypical to perform different transformations in a generator based on the type of a given node. However, type calculations with .type are rather CPU-intensive operations. We have taken some measurements on our developer machines. As a rough indicator, single .type calculations have taken 2–30 milliseconds. This does not appear to be particularly concerning at first, but when we consider that the whole MMS consists of 400,000 nodes, times for .type calculations in a generator might add up when not applied carefully.

The time spent on .type calculations in a generator is also reported in the aforementioned “model generation performance report” when we select “time spent on type calculations” in the settings. Unfortunately, in this case, the generator designer will be overwhelmed with performance information. As you can imagine, it’s quite a cumbersome process to check through all those performance hints.

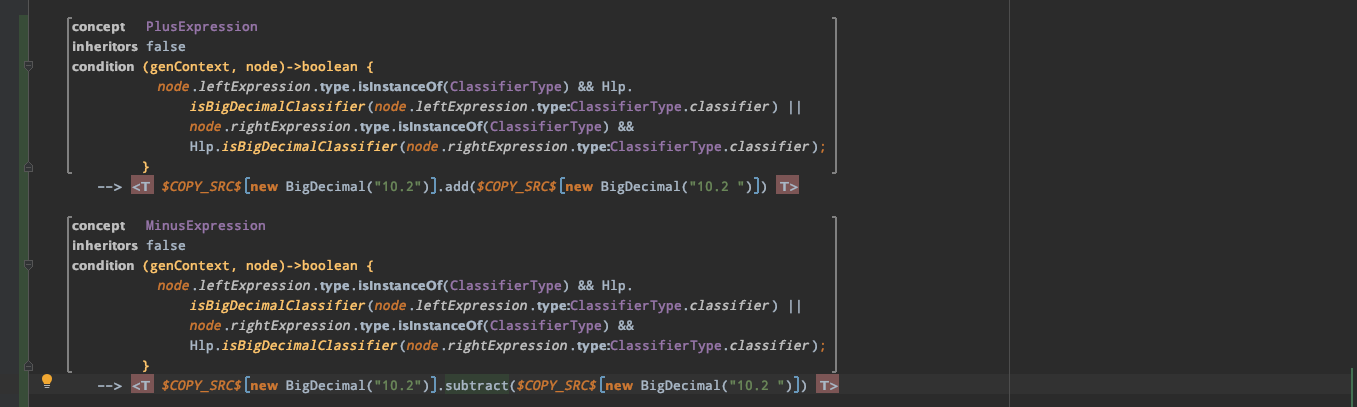

One of our refactorings aimed specifically at minimizing the generation time by preventing type calculations for the very same expression multiple times. The case is as follows: One of our languages introduced arithmetic operations for non-primitive values (e.g., the Java BigDecimal class). The user of the language can simply use “+” instead of a method call to add(). The code generator will reformat the code slightly by inspecting the type of expressions in the code. If the type is of BigDecimal and “+” is applied, the expression has to be transformed.

Since we also allowed for comparison operations (e.g., == / < / <=, etc.) to be part of our language, they had to be translated to the calls to the compareTo() method. The same reformat logic had to be applied to all comparisons and all arithmetic operations in the language. In a naive approach, we created one different MPS generator reduction rule for each different method call to translate to (see Figure 6). Since each such reduction rule contained a condition with .type calculations, we ended up with multiple calculations on the same parts of expressions from the input model.

We introduced a step-by-step process with refactoring to minimize those calculations. When some form of operation with a left and a right part is applied to BigDecimal, we save the type calculated in a transient object in the generation context and reuse that information. That is to say, only one reduction rule with “inheritors” enabled for the general BinaryOperation is used to check the type for the left expression in its condition. If that type is of BigDecimal or Int, the type is stored, and the right expression is evaluated and stored. That information is passed forward to an MPS template switch, which transforms the operation accordingly. The template switch does not contain any .type calculations, nor do we have multiple calculations on the same expressions any longer.

.type calculations in generators are CPU-intensive operations, which can slow down generation significantly. In our case, we only experienced a small performance increase with this refactoring. However, the most significant change to our generators was that it minimized the total amount of code generated. Naturally, this, in turn, significantly reduced the number of inspected expressions during generation, which helped to alleviate the problem of repeated type calculations in the generation process.

Generation speed and developer satisfaction

Theoretically, the advantages of DSLs are manifold. They promise to increase productivity when developing solutions. The number of program lines that have to be read, written, and understood simply decreases. Elegant and concise syntax increases readability. DSLs allow extensive validation and verification of content, as they are usually semantically more expressive if they directly map the essential concepts of a domain. Last but not least, DSLs are extremely helpful for communication within the development team and with other experts in the respective domain.

Despite these general advantages of DSLs, one should not only focus on the final solution for the end-users. The satisfaction of the development team when applying DSLs is also crucial. A motivated developer team is a prerequisite to benefit from the advantages associated with using DSLs in a project.

We developed the MMS system exclusively within MPS, applying in parallel three DSLs that we conceived over the course of the project. After 13 years of development, the MMS project itself, notwithstanding the DSLs, has grown to 139 models with more than 400,000 nodes. These nodes are transformed into Java code by a stack of seven different code generators; three were created by ourselves, and four were taken from existing JetBrains languages. For developers working on the MMS project, the generation speed became more and more of an issue over time, demanding more patience while regenerating the whole solution. A situation that might impair the developer's satisfaction.

While the generation mechanism in JetBrains MPS cannot be characterized as slow in general, code generators for huge projects must be designed carefully. In this article, we have introduced ideas and measures to accelerate the generation process. We reported on the lessons we learned during the course of one of our largest projects. After a major refactoring of the generators, we were able to bring down the generation and the compile time for a complete project rebuild from 3–4 minutes to just one minute on our standard development machine. Using this method, we can provide a highly expressive language for developers while maintaining a pleasant experience in day-to-day work with the tooling.