Configure Big Data Tools environment

Before you start working with Big Data Tools, you need to install the required plugins and configure connections to servers.

Install the required plugins

Whatever you do in PyCharm, you do it in a project. So, open an existing project () or create a new project ().

In the Settings/Preferences dialog Ctrl+Alt+S, select .

Install the Big Data Tools plugin.

Restart the IDE. After the restart, the Big Data Tools tab appears in the rightmost group of the tool windows. Click it to open the Big Data Tools window.

Once the Big Data Tools support is enabled in the IDE, you can configure a connection to a Zeppelin, Spark, Google Storage, and S3 server. You can connect to HDFS, WebHDFS, AWS S3, and a local drive using config files and URI.

Configure a server connection

In the Big Data Tools window, click

and select the server type. The Big Data Tools Connection dialog opens.

and select the server type. The Big Data Tools Connection dialog opens. In the Big Data Tools Connection dialog, specify the following parameters depending on the server type:

Notebooks: Zeppelin

Monitoring: Spark, Hadoop

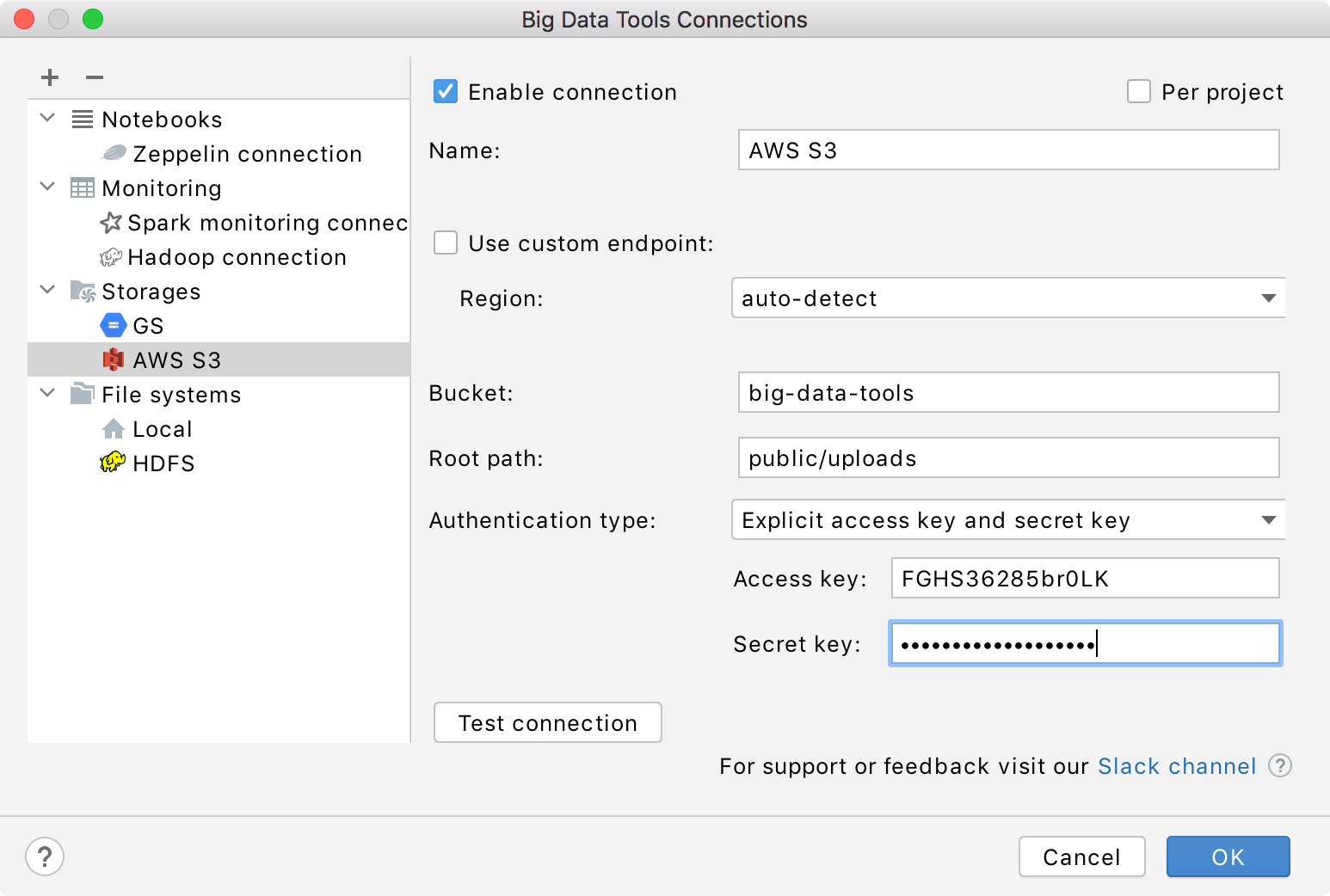

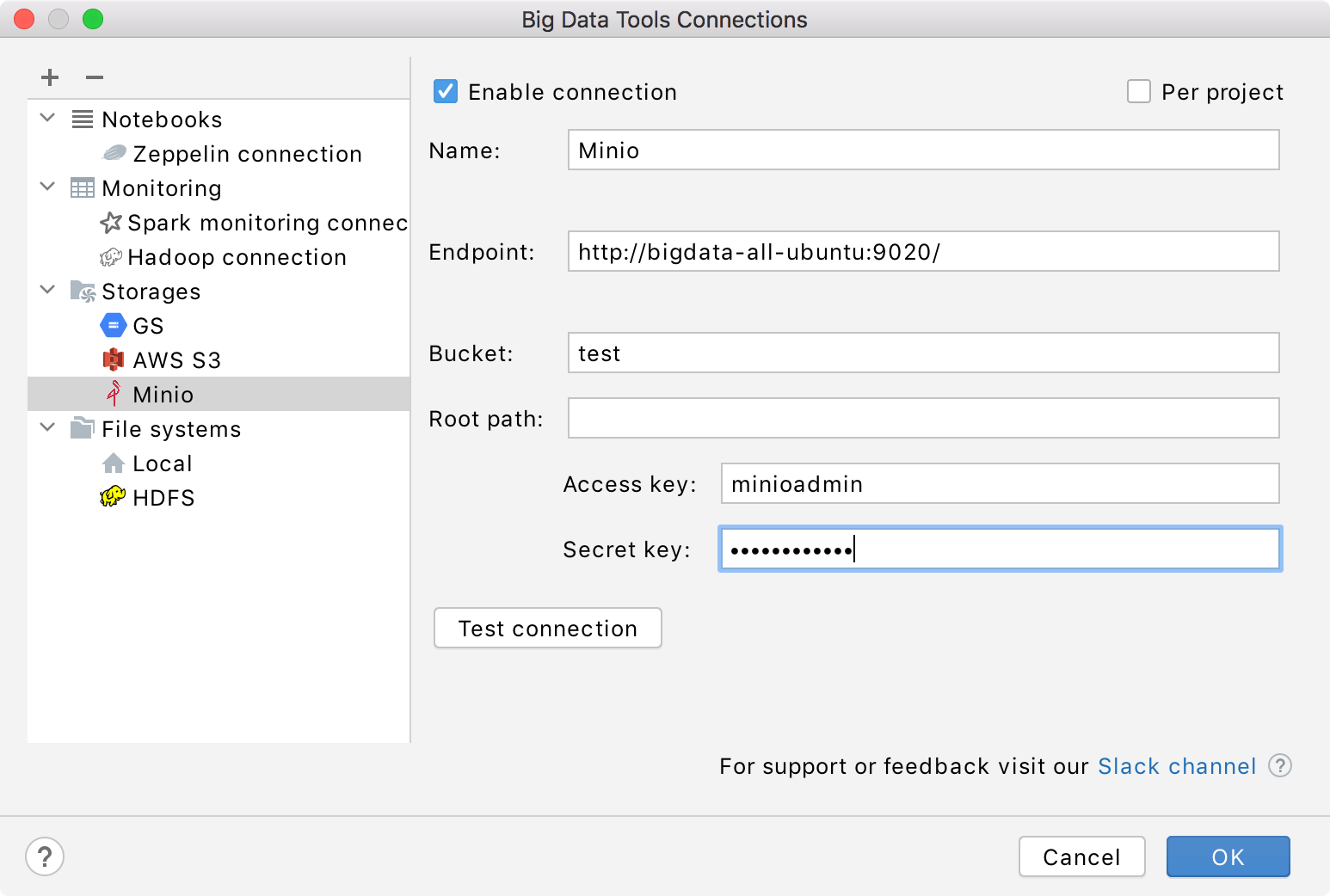

Storages: AWS S3, Minio, Linode, Digital Open Space, GS, Azure

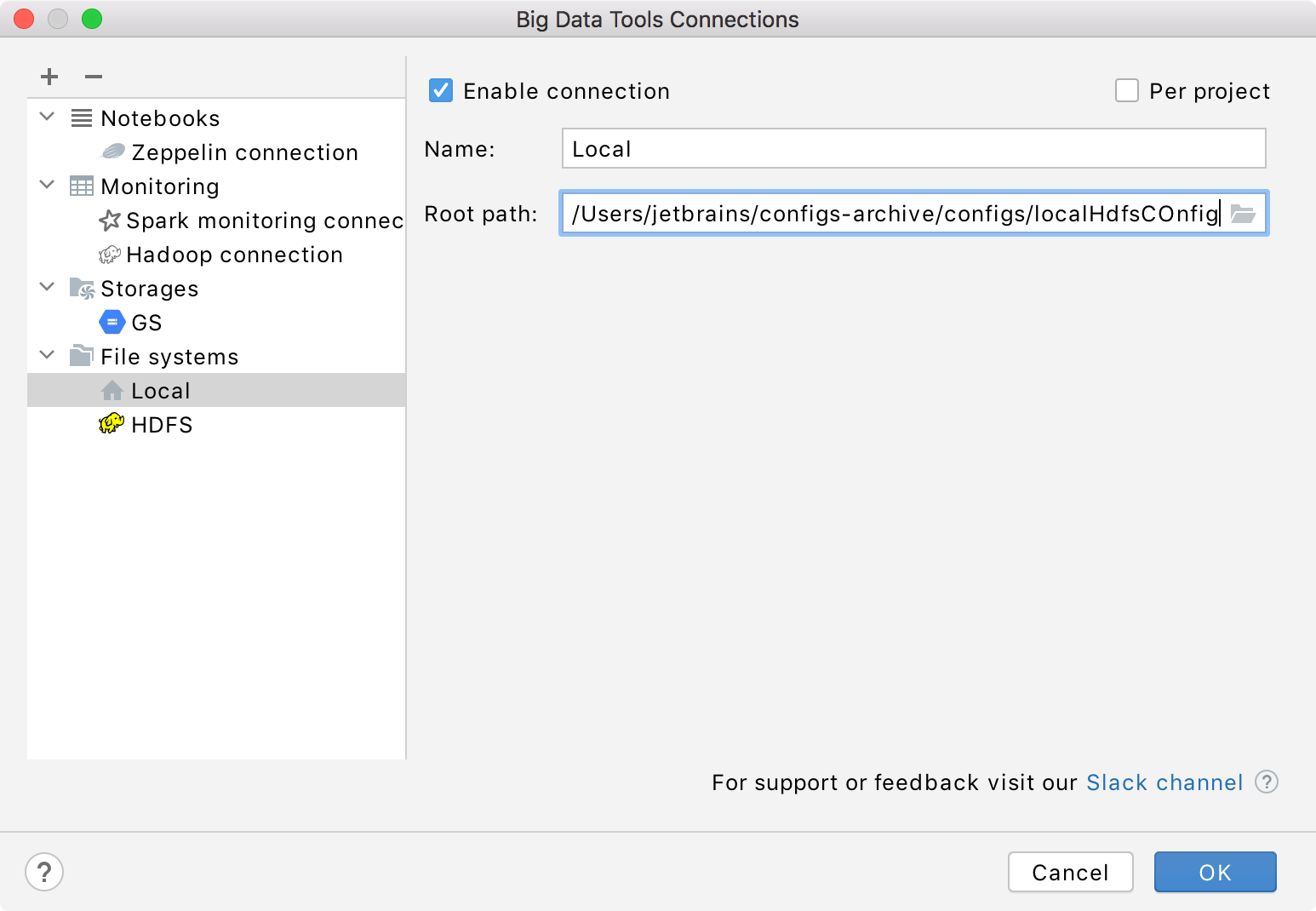

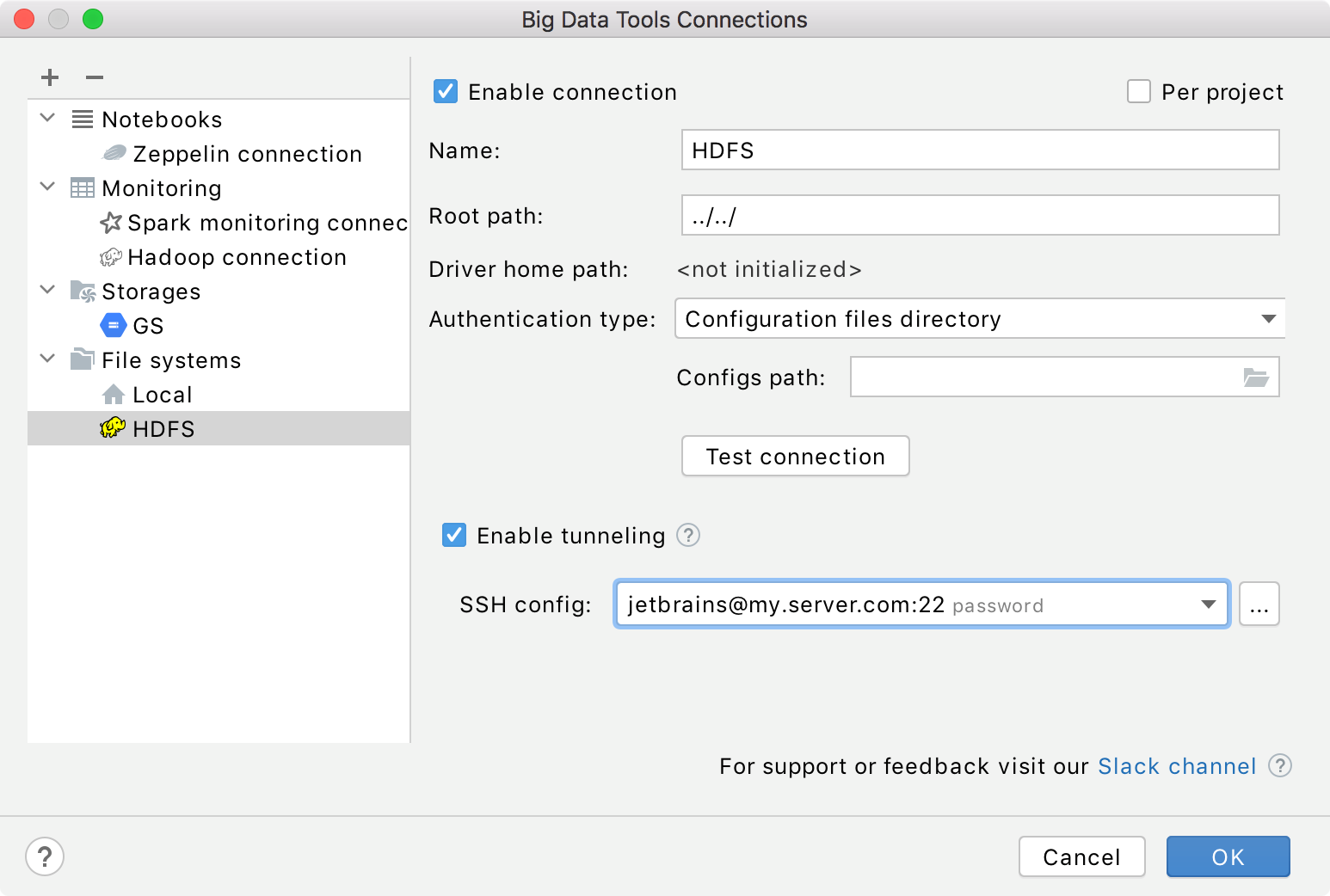

File Systems: FS | Local and FS | HDFS

Mandatory parameters:

Root path: a path to the root directory.

Optionally, you can set up:

Name: the name of the connection to distinguish it between the other connections.

Per project: select to enable these connection settings only for the current project. Deselect it if you want this connection to be visible in other projects.

Enable connection: deselect if, for some reasons, you want to restrict using this connection. By default, the newly created connections are enabled.

Mandatory parameters:

Root path: a path to the root directory on the target server.

Config path a path to the HDFS configuration files directory. See the samples of configuration files.

File system URI an explicit uri of an HDFS server. Once you select this option, you need to specify the file system URI, for example localhost:9000 and a username to connect.

Optionally, you can set up:

Name: the name of the connection to distinguish it between the other connections.

Per project: select to enable these connection settings only for the current project. Deselect it if you want this connection to be visible in other projects.

Enable connection: deselect if, for some reasons, you want to restrict using this connection. By default, the newly created connections are enabled.

Enable tunneling. Creates an SSH tunnel to the remote host. It can be useful if the target server is in a private network but an SSH connection to the host in the network is available.

Select the checkbox and specify a configuration of an SSH connection (press ... to create a new SSH configuration).

Note that the Big Data Tools plugin uses the

HADOOP_USER_NAMEenv variable to login to the server. It this variable is not defined then theuser.nameproperty is used.See more examples of the Hadoop File System configuration files.

Mandatory parameters:

Bucket: a globally unique Amazon S3 bucket name.

Optionally, you can set up:

Name: the name of the connection to distinguish it between the other connections.

Region: an AWS region of the specified bucket. You can select one from the list or let PyCharm to auto detect it.

Root path: a path to the root directory in the specified bucket.

Authentication type: the authentication method. You can use your AWS account credentials (by default), or opt to entering the access and secret keys.

Per project: select to enable these connection settings only for the current project. Deselect it if you want this connection to be visible in other projects.

Enable connection: deselect if, for some reasons, you want to restrict using this connection. By default, the newly created connections are enabled.

Use custom endpoint: select if you want to specify a custom endpoint and a signing region.

Mandatory parameters:

Endpoint: specify an endpoint to connect to.

Bucket: a globally unique Minio bucket name.

Optionally, you can set up:

Name: the name of the connection to distinguish it between the other connections.

Root path: a path to the root directory in the specified bucket.

Access credentials: Access Key and Secret Key.

Per project: select to enable these connection settings only for the current project. Deselect it if you want this connection to be visible in other projects.

Enable connection: deselect if, for some reasons, you want to restrict using this connection. By default, the newly created connections are enabled.

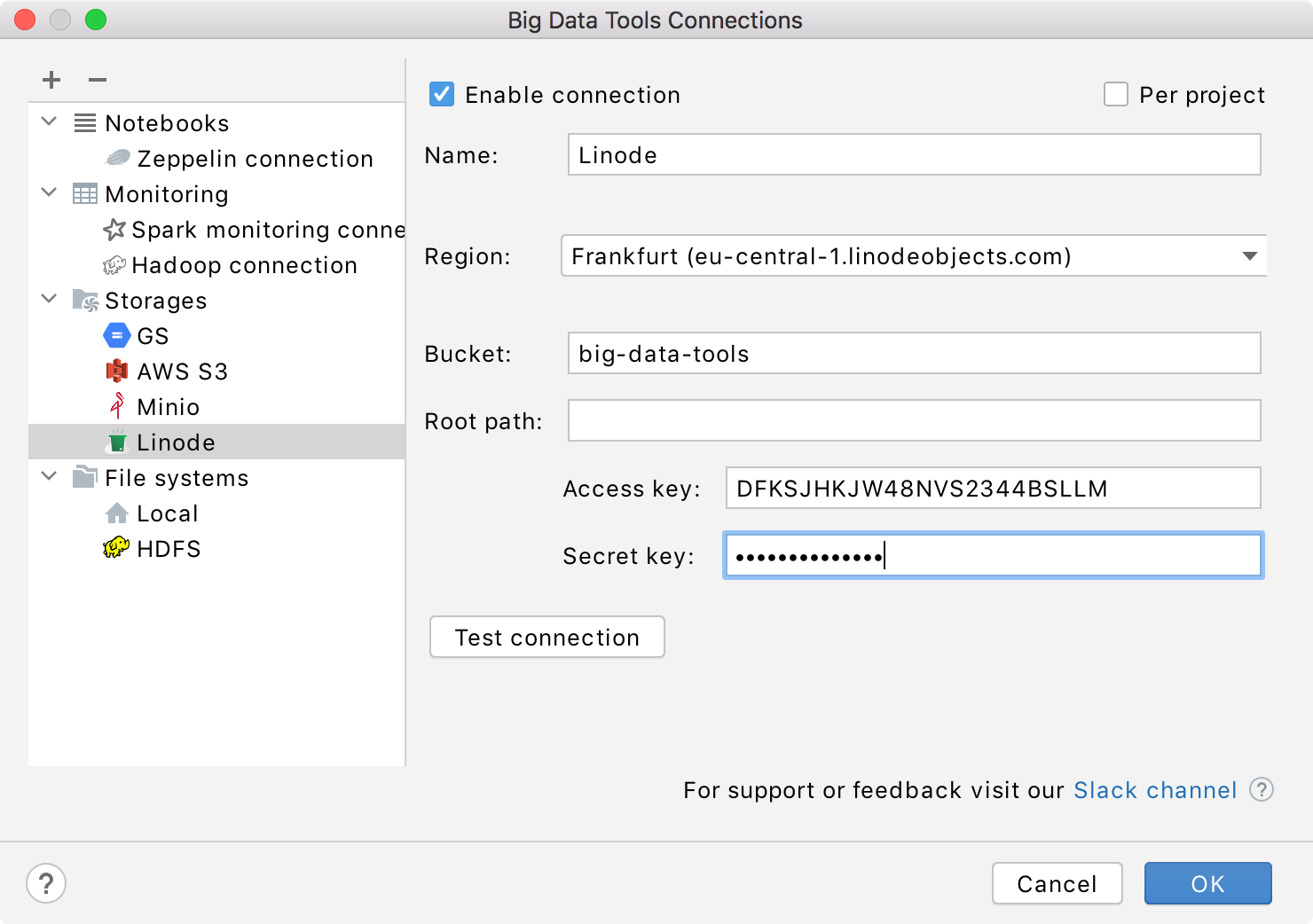

Mandatory parameters:

Bucket: a globally unique Linode bucket name.

Optionally, you can set up:

Name: the name of the connection to distinguish it between the other connections.

Region: a region of the specified bucket. You can select one from the list or let PyCharm to auto detect it.

Root path: a path to the root directory in the specified bucket.

Access credentials: Access Key and Secret Key.

Per project: select to enable these connection settings only for the current project. Deselect it if you want this connection to be visible in other projects.

Enable connection: deselect if, for some reasons, you want to restrict using this connection. By default, the newly created connections are enabled.

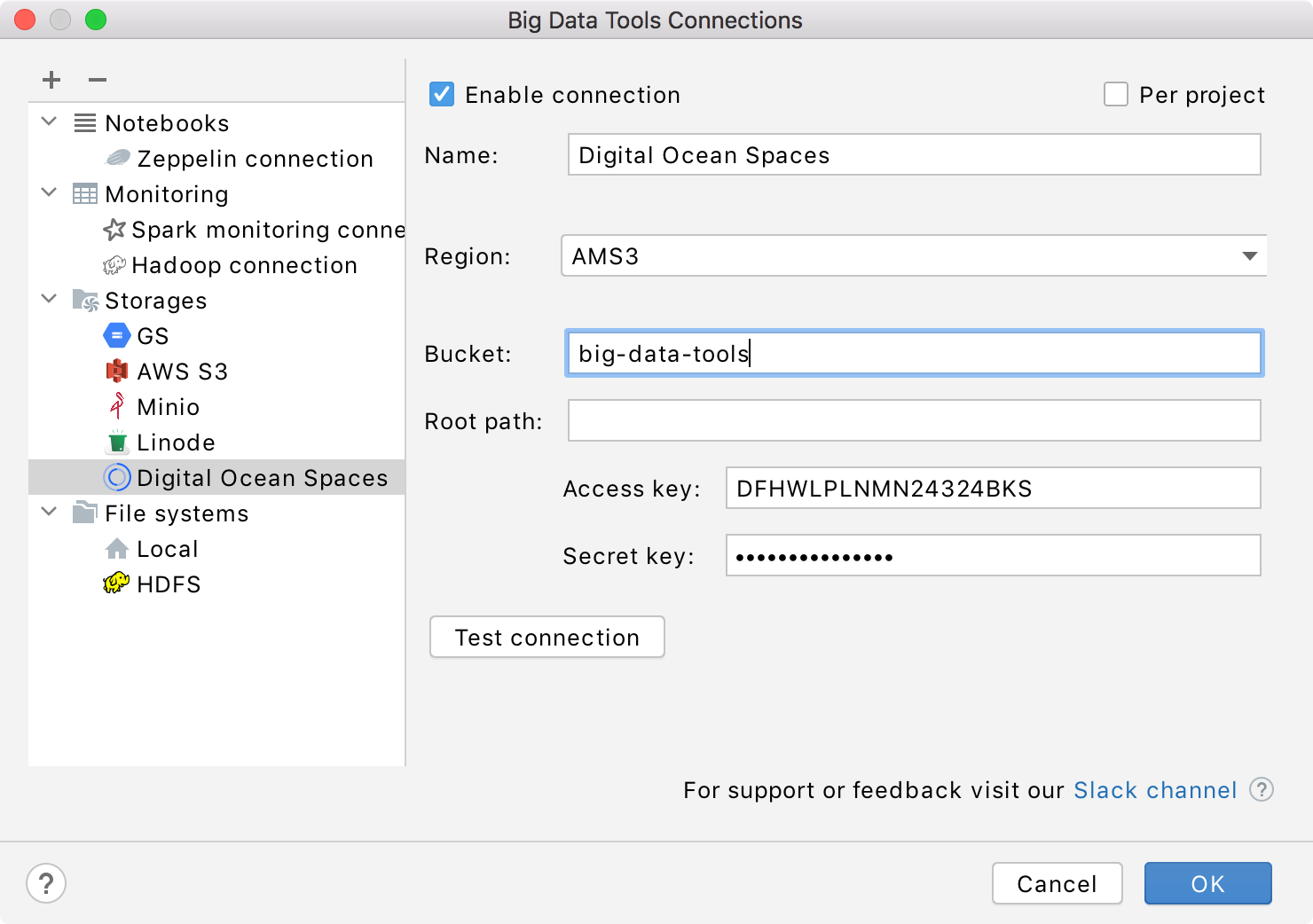

Mandatory parameters:

Bucket: a globally unique Digital Ocean bucket name.

Optionally, you can set up:

Name: the name of the connection to distinguish it between the other connections.

Region: a Digital Ocean region of the specified bucket. You can select one from the list or let PyCharm to auto detect it.

Root path: a path to the root directory in the specified bucket.

Access credentials: Access Key and Secret Key.

Per project: select to enable these connection settings only for the current project. Deselect it if you want this connection to be visible in other projects.

Enable connection: deselect if, for some reasons, you want to restrict using this connection. By default, the newly created connections are enabled.

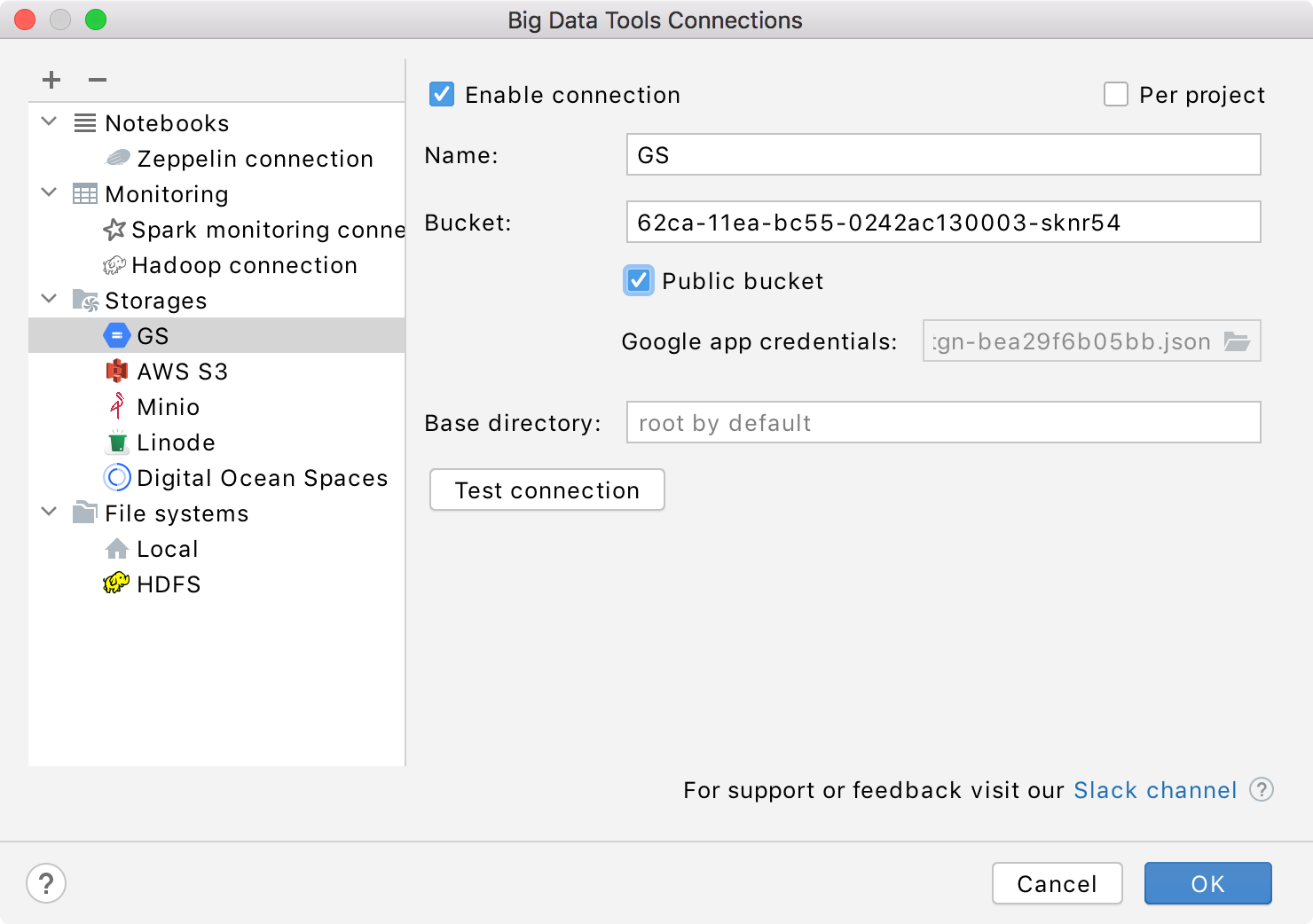

Mandatory parameters:

Bucket: a name of the basic container to store your data in Google Storage.

Google app credentials: a path to the Cloud Storage JSON file. You don't need to specify the credentials if the Public bucket is selected.

Optionally, you can set up:

Name: the name of the connection to distinguish it between the other connections.

Base directory (root by default): storage base directory.

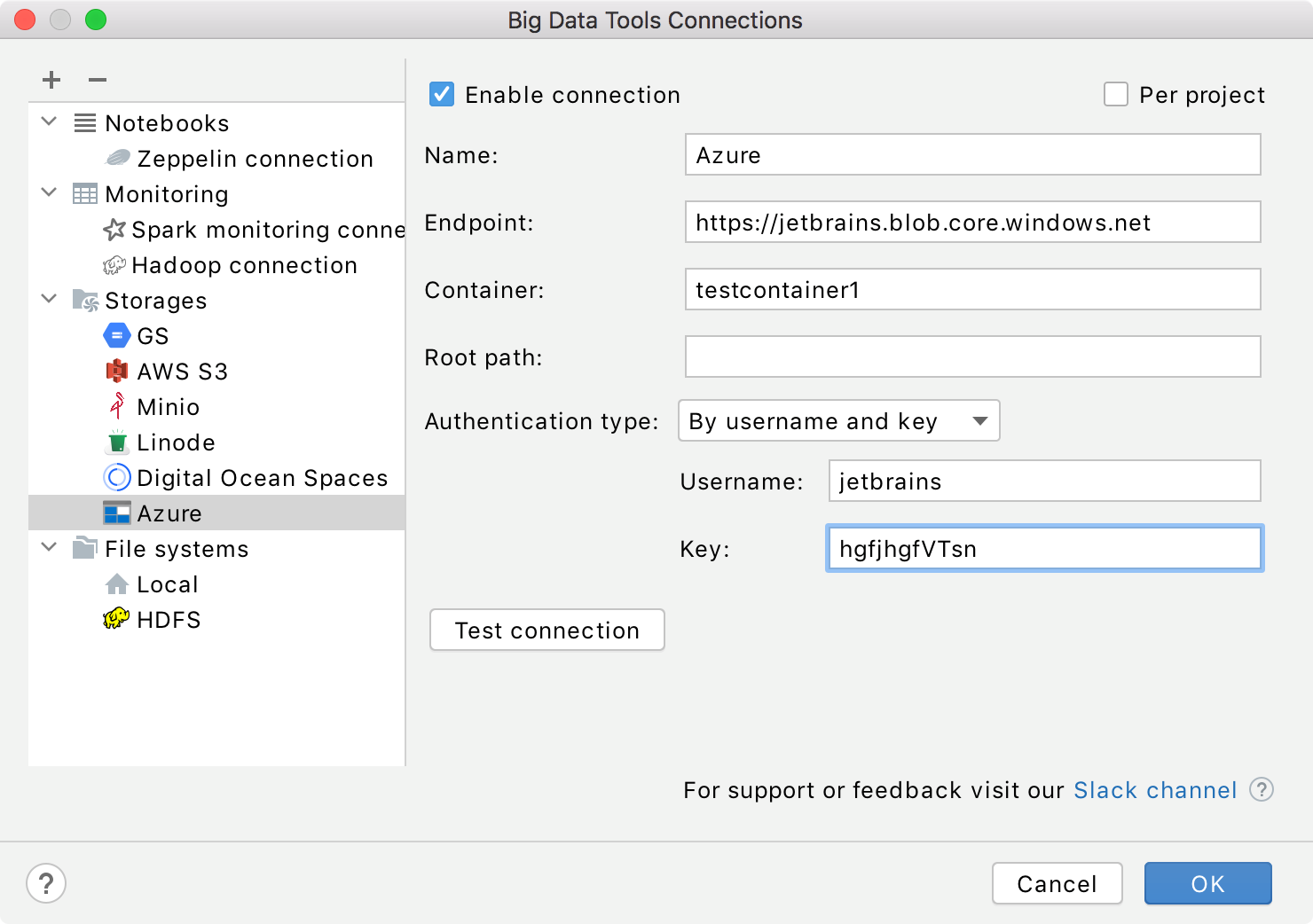

Mandatory parameters:

Endpoint: specify an endpoint to connect to.

Container: a name of the basic container to store your data in Microsoft Azure.

Optionally, you can set up:

Name: the name of the connection to distinguish it between the other connections.

Root path: a path to the root directory in the specified bucket.

Authentication type: the authentication method. You can access the storage by username and key, by a connection string, or using a SAS token.

Per project: select to enable these connection settings only for the current project. Deselect it if you want this connection to be visible in other projects.

Enable connection: deselect if, for some reasons, you want to restrict using this connection. By default, the newly created connections are enabled.

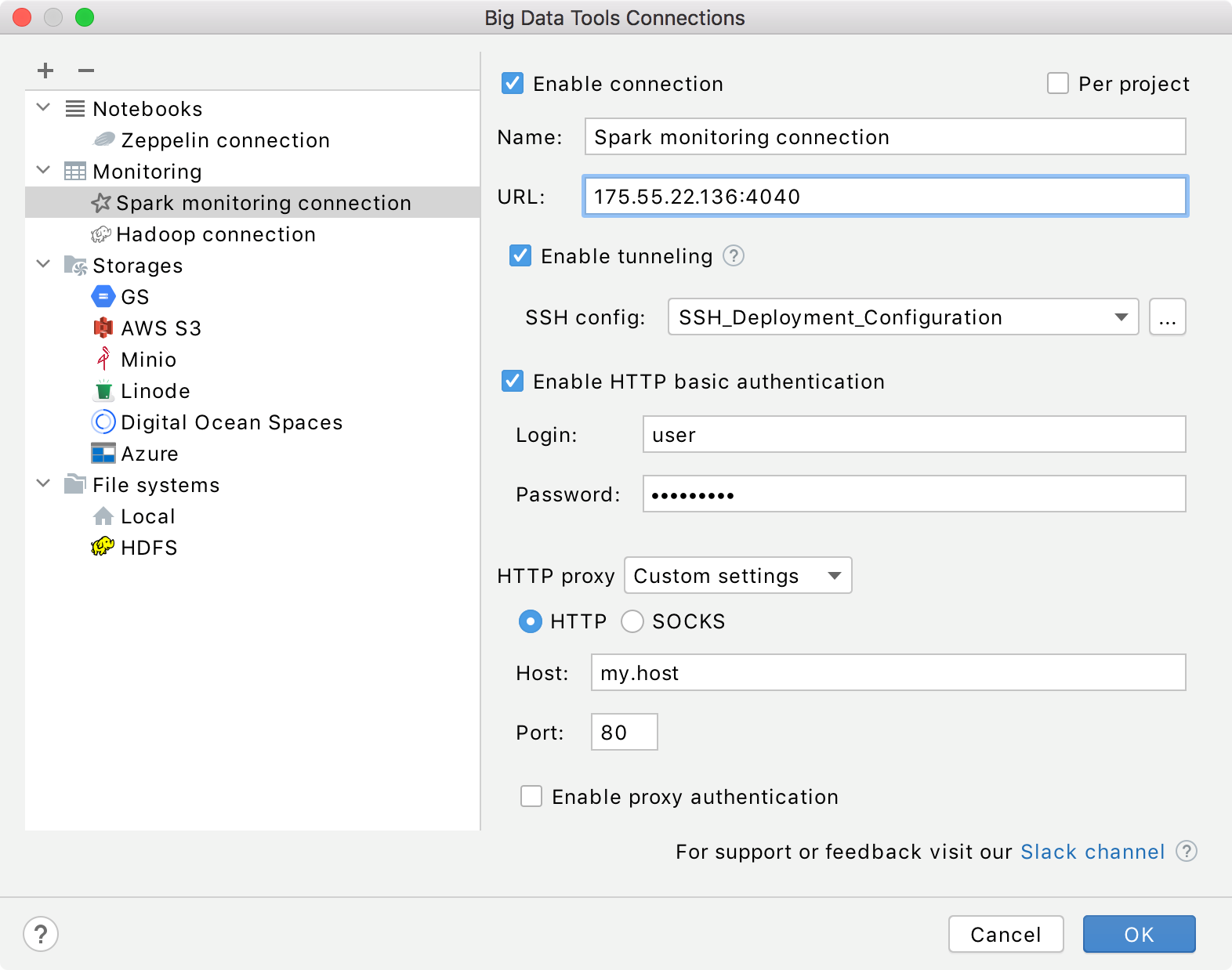

Mandatory parameters:

URL: the path to the target server.

Optionally, you can set up:

Name: the name of the connection to distinguish it between the other connections.

Enable tunneling. Creates an SSH tunnel to the remote host. It can be useful if the target server is in a private network but an SSH connection to the host in the network is available.

Select the checkbox and specify a configuration of an SSH connection (press ... to create a new SSH configuration).

Per project: select to enable these connection settings only for the current project. Deselect it if you want this connection to be visible in other projects.

Enable connection: deselect if, for some reasons, you want to restrict using this connection. By default, the newly created connections are enabled.

Enable HTTP basic authentication: connection with the HTTP authentication using the specified username and password.

Enable HTTP proxy: connection with the HTTP proxy using the specified host, port, username, and password.

HTTP Proxy: connection with the HTTP or SOCKS Proxy authentication. Select if you want to use IDEA HTTP Proxy settings or use custom settings with the specified host name, port, login, and password.

- Kerberos authentication settings: opens the Kerberos authentication settings.

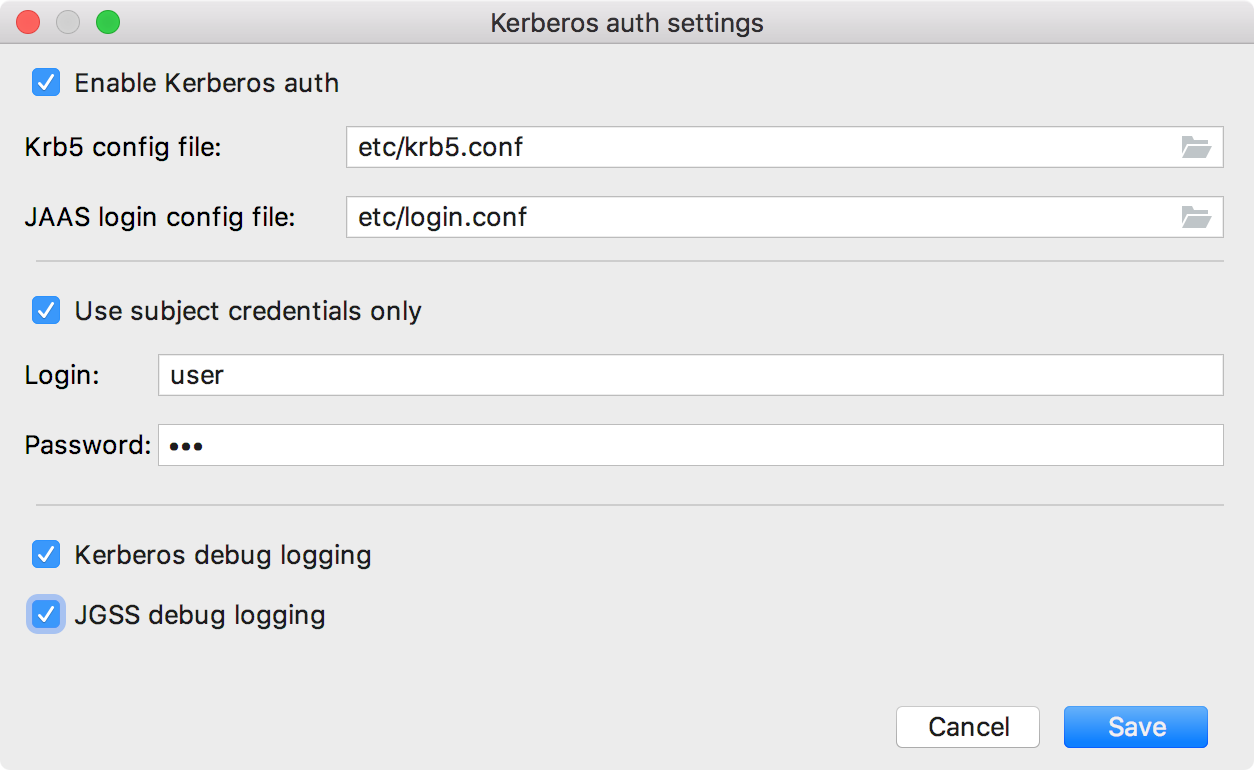

Specify the following options:

Enable Kerberos auth: select to use the Kerberos authentication protocol.

Krb5 config file: a file that contains Kerberos configuration information.

JAAS login config file: a file that consists of one or more entries, each specifying which underlying authentication technology should be used for a particular application or applications.

Use subject credentials only: allows the mechanism to obtain credentials from some vendor-specific location. Select this checkbox and provide the username and password.

To include additional login information into PyCharm log, select the Kerberos debug logging and JGSS debug logging.

Note that the Kerberos settings are effective for all you Spark connections.

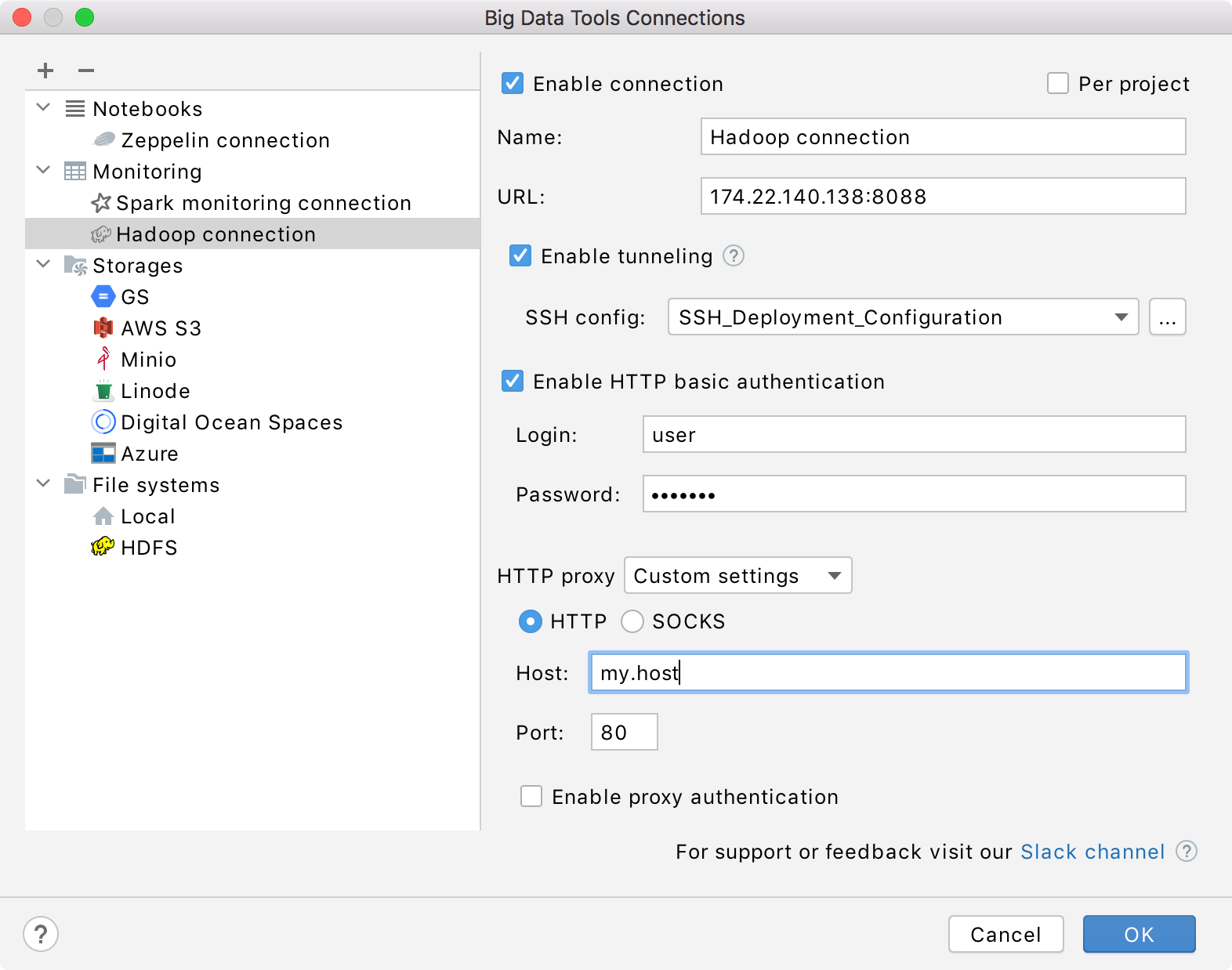

Mandatory parameters:

URL: the path to the target server.

Optionally, you can set up:

Name: the name of the connection to distinguish it between the other connections.

Enable tunneling. Creates an SSH tunnel to the remote host. It can be useful if the target server is in a private network but an SSH connection to the host in the network is available.

Select the checkbox and specify a configuration of an SSH connection (press ... to create a new SSH configuration).

Per project: select to enable these connection settings only for the current project. Deselect it if you want this connection to be visible in other projects.

Enable connection: deselect if, for some reasons, you want to restrict using this connection. By default, the newly created connections are enabled.

Enable HTTP basic authentication: connection with the HTTP authentication using the specified username and password.

Enable HTTP proxy: connection with the HTTP proxy using the specified host, port, username, and password.

HTTP Proxy: connection with the HTTP or SOCKS Proxy authentication. Select if you want to use IDEA HTTP Proxy settings or use custom settings with the specified host name, port, login, and password.

- Kerberos authentication settings: opens the Kerberos authentication settings.

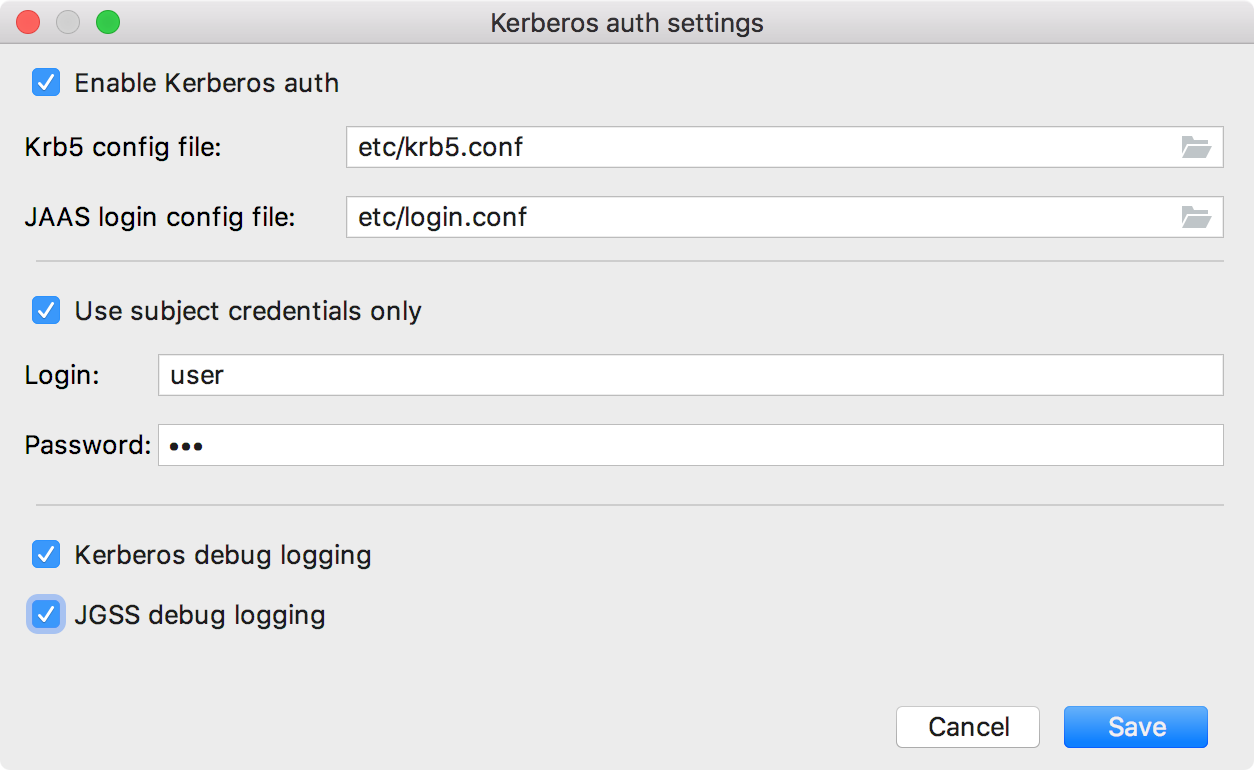

Specify the following options:

Enable Kerberos auth: select to use the Kerberos authentication protocol.

Krb5 config file: a file that contains Kerberos configuration information.

JAAS login config file: a file that consists of one or more entries, each specifying which underlying authentication technology should be used for a particular application or applications.

Use subject credentials only: allows the mechanism to obtain credentials from some vendor-specific location. Select this checkbox and provide the username and password.

To include additional login information into PyCharm log, select the Kerberos debug logging and JGSS debug logging.

Note that the Kerberos settings are effective for all you Spark connections.

You can also reuse any of the existing Spark connections. Just select it from the Spark Monitoring list.

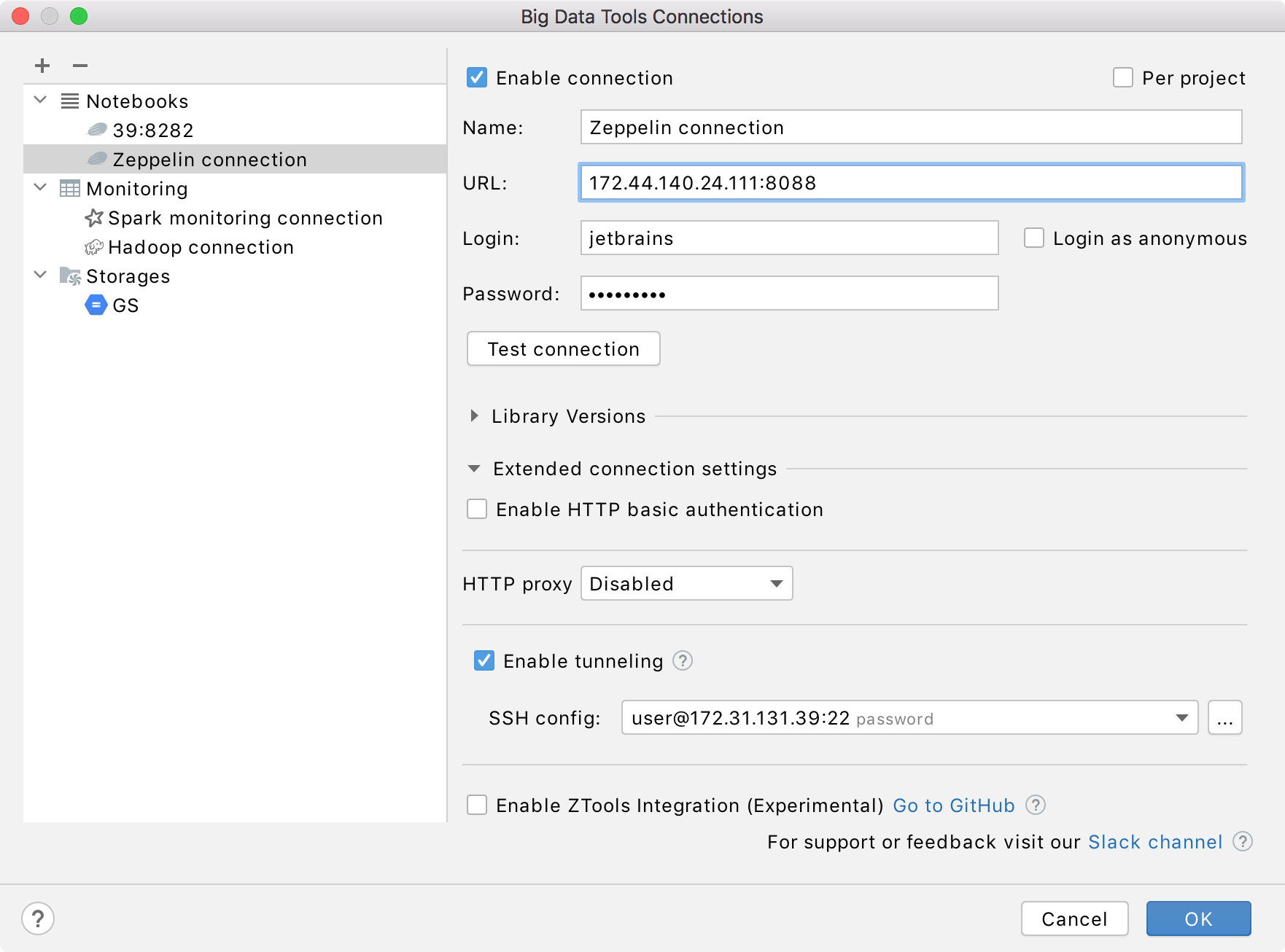

Mandatory parameters:

URL: the path to the target server.

Login and Password: your credentials to access the target server.

Optionally, you can set up:

Name: the name of the connection to distinguish it between the other connections.

Login as anonymous: select to login without using your credentials.

Per project: select to enable these connection settings only for the current project. Deselect it if you want this connection to be visible in other projects.

Enable connection: deselect if, for some reasons, you want to restrict using this connection. By default, the newly created connections are enabled.

Library Versions: Scala Version, Spark Version, and Hadoop Version: these values are derived from the plugin bundles. If needed, specify any alternative version values.

Enable HTTP basic authentication: connection with the HTTP authentication using the specified username and password.

HTTP Proxy: connection with the HTTP or SOCKS Proxy authentication. Select if you want to use IDEA HTTP Proxy settings or use custom settings with the specified host name, port, login, and password.

Enable tunneling. Creates an SSH tunnel to the remote host. It can be useful if the target server is in a private network but an SSH connection to the host in the network is available.

Select the checkbox and specify a configuration of an SSH connection (press ... to create a new SSH configuration), any free port on a local host, address of the target remote host, and the port of the target application.

Once you fill in the settings, click Test connection to ensure that all configuration parameters are correct. Then click OK.

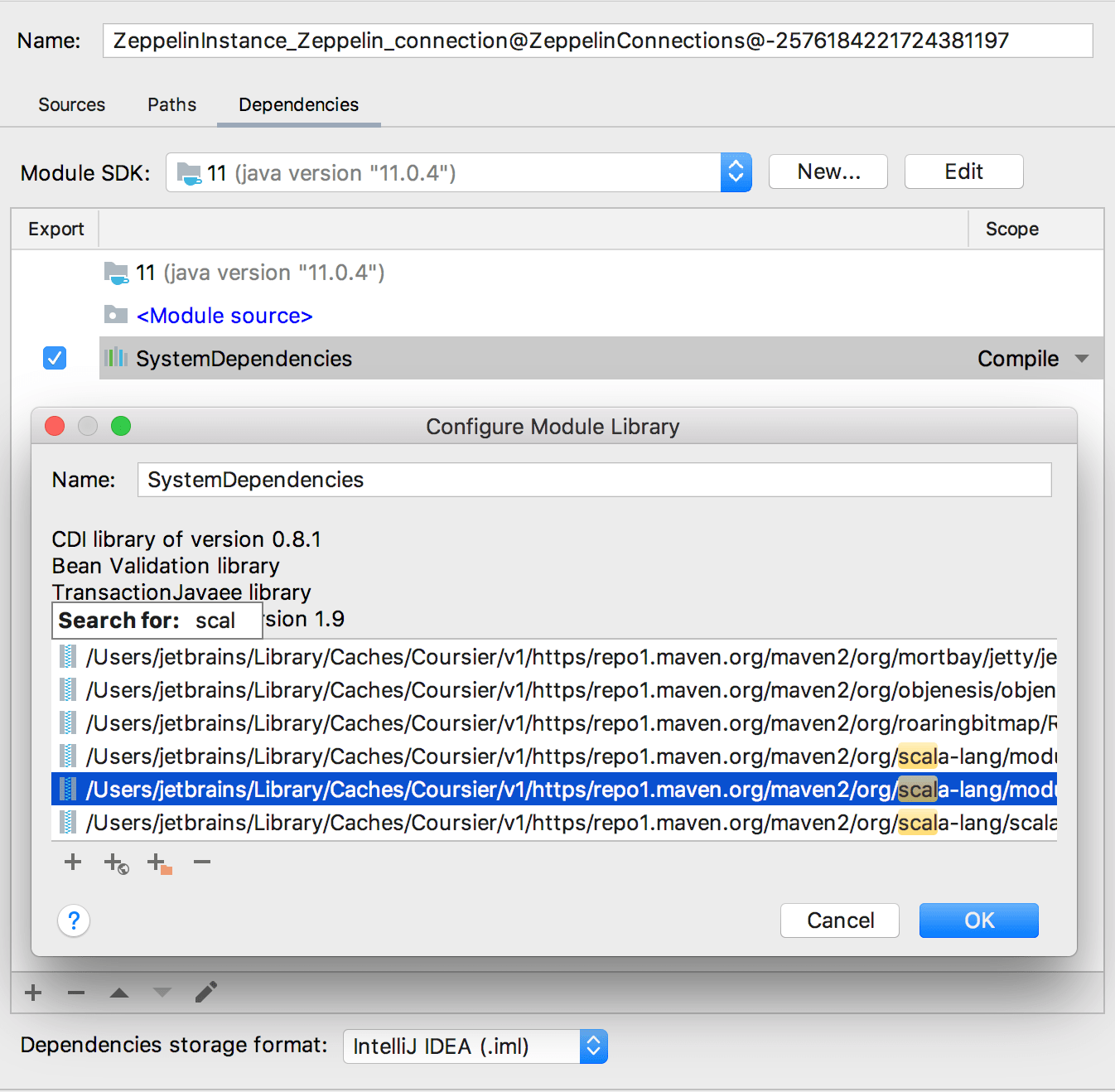

Configure notebook dependencies

From the main menu, select .

In the Project Structure dialog, select Modules in the list of the Project Settings. Then select any of the configured connections in the list of the modules and double-click System Dependencies.

Inspect the list of the added libraries. Click the list and start typing to search for a particular library.

If needed, modify the list of the libraries

Click

to add a new library.

Click

and specify the URL of the external documentation.

Click

to select the items that you want PyCharm to ignore (folders, archives and folders within the archives), and click OK.

Click

to remove the selected ordinary library from the library or restore the selected excluded items. The items themselves will stay in the library.

Samples of Hadoop File System configuration files

| Type | Sample configuration |

|---|---|

| HDFS |

<?xml version="1.0"?>

-<configuration>

-<property>

<name>fs.hdfs.impl</name>

<value>org.apache.hadoop.hdfs.DistributedFileSystem</value>

</property>

-<property>

<name>fs.defaultFS</name>

<value>hdfs://example.com:9000/</value>

</property>

</configuration>

|

| S3 |

<?xml version="1.0"?>

-<configuration>

-<property>

<name>fs.s3a.impl</name>

<value>org.apache.hadoop.fs.s3a.S3AFileSystem</value>

</property>

-<property>

<name>fs.s3a.access.key</name>

<value>sample_access_key</value>

</property>

-<property>

<name>fs.s3a.secret.key</name>

<value>sample_secret_key</value>

</property>

-<property>

<name>fs.defaultFS</name>

<value>s3a://example.com/</value>

</property>

</configuration>

|

| WebHDFS |

<?xml version="1.0"?>

-<configuration>

-<property>

<name>fs.webhdfs.impl</name>

<value>org.apache.hadoop.hdfs.web.WebHdfsFileSystem</value>

</property>

-<property>

<name>fs.defaultFS</name>

<value>webhdfs://master.example.com:50070/</value>

</property>

</configuration>

|

| WebHDFS and Kerberos |

<?xml version="1.0"?>

<configuration>

-<property>

<name>fs.webhdfs.impl</name>

<value>org.apache.hadoop.hdfs.web.WebHdfsFileSystem</value>

</property>

-<property>

<name>fs.defaultFS</name>

<value>webhdfs://master.example.com:50070</value>

</property>

-<property>

<name>hadoop.security.authentication</name>

<value>Kerberos</value>

</property>

-<property>

<name>dfs.web.authentication.kerberos.principal</name>

<value>testuser@EXAMPLE.COM</value>

</property>

-<property>

<name>hadoop.security.authorization</name>

<value>true</value>

</property>

</configuration>

|