AI Playground

Enable the AI Playground plugin

This functionality relies on the AI Playground plugin, which is bundled and enabled in PyCharm by default. If the relevant features are not available, make sure that you did not disable the plugin. For more information, refer to Open plugin settings.

Press Ctrl+Alt+S to open settings and then select .

Open the Installed tab, find the AI Playground plugin, and select the checkbox next to the plugin name.

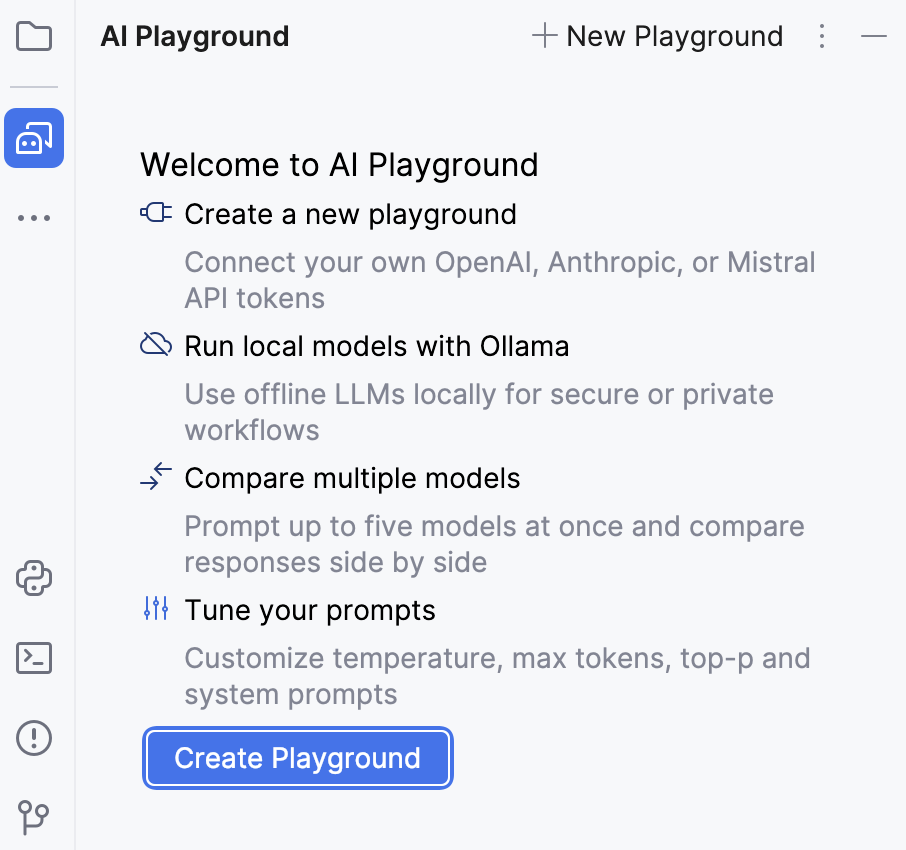

AI Playground is a built-in tool that allows you to experiment with AI models right inside your IDE. It is designed for fast, simple, and seamless prompt testing and model comparison—no complex setup or context switching is required.

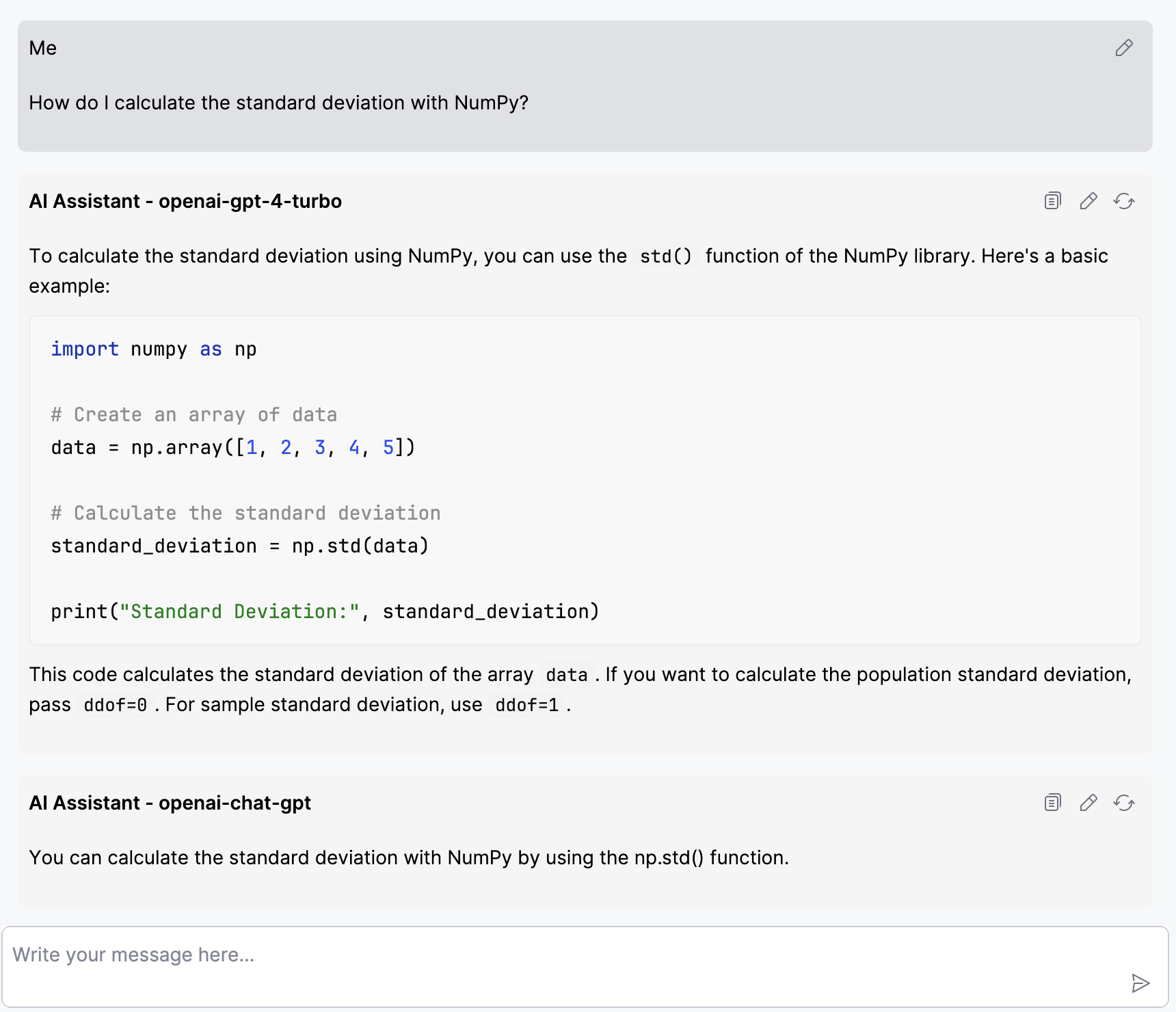

Compare multiple models

Send the same prompt to up to five models at once and compare model responses:

Open the AI Playground tool window ().

Click to create a new playground.

Click

and select a model from the list:

Local model

Model from a JetBrains AI subscription. If you have a JetBrains AI subscription, your models will be automatically available.

Configure prompt settings for each model.

In the input field, type your message and click

Send or press Enter. The responses from each model will appear in the AI Playground chat.

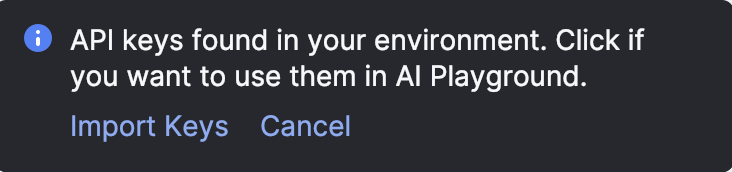

Use your own models

If you have configured one or more API keys from major LLM providers globally or by using a .env file, AI Playground will detect them and prompt you to import the keys into the Playground.

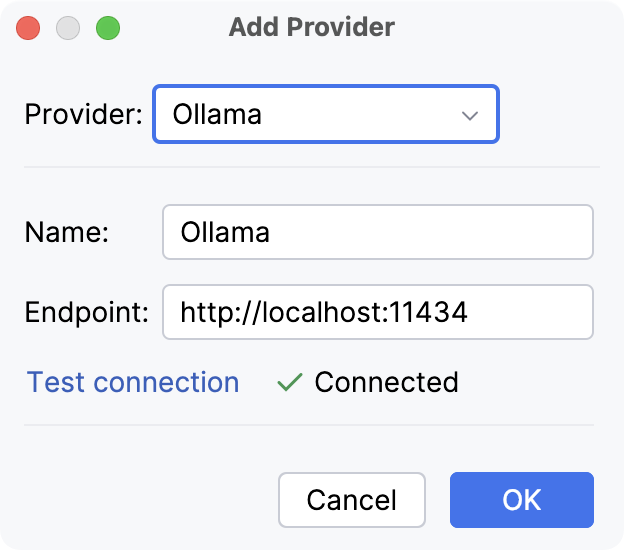

To connect a provider manually:

Click

Add Model, then select Connect Provider.

In the dialog that opens:

Select the provider from the list of available providers.

Specify the name of the provider.

Enter the API key or endpoint if required and click to ensure that the connection is successful.

Click OK.

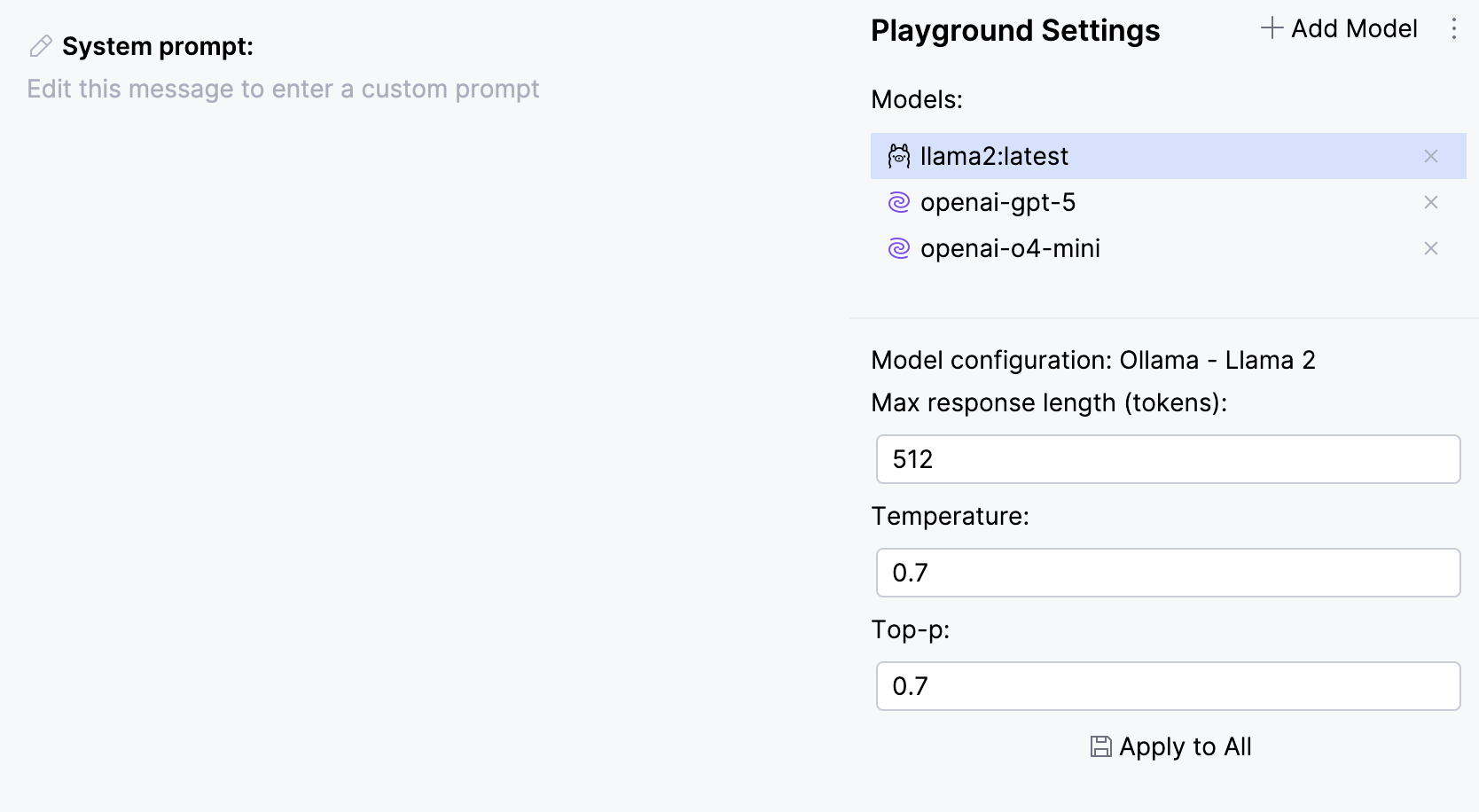

Configure prompt settings

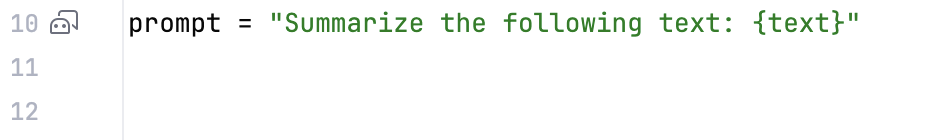

If PyCharm detects a text prompt, a gutter icon will appear next to it in the editor. Click the icon to import the prompt into AI Playground and test it directly.

You can customize settings individually for each model, except for system prompts, which are global across all models.

Available settings | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Describe the context or behavior for the model. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Max response length (tokens) | Set the maximum number of tokens the model can generate in a response. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Temperature | Control randomness: lower values produce more focused outputs, higher values generate more diverse responses. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Top-p | Control nucleus sampling: limit the model’s choices to a subset of the most likely tokens, improving response relevance. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Apply current settings to all models. Unsupported settings for some models will be ignored. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||