Configure Big Data Tools environment

Before you start working with Big Data Tools, you need to install the required plugins and configure connections to servers.

Install the required plugins

Whatever you do in DataGrip, you do it in a project. So, open an existing project () or create a new project ().

Press Ctrl+Alt+S to open settings and then select .

Install the Big Data Tools plugin.

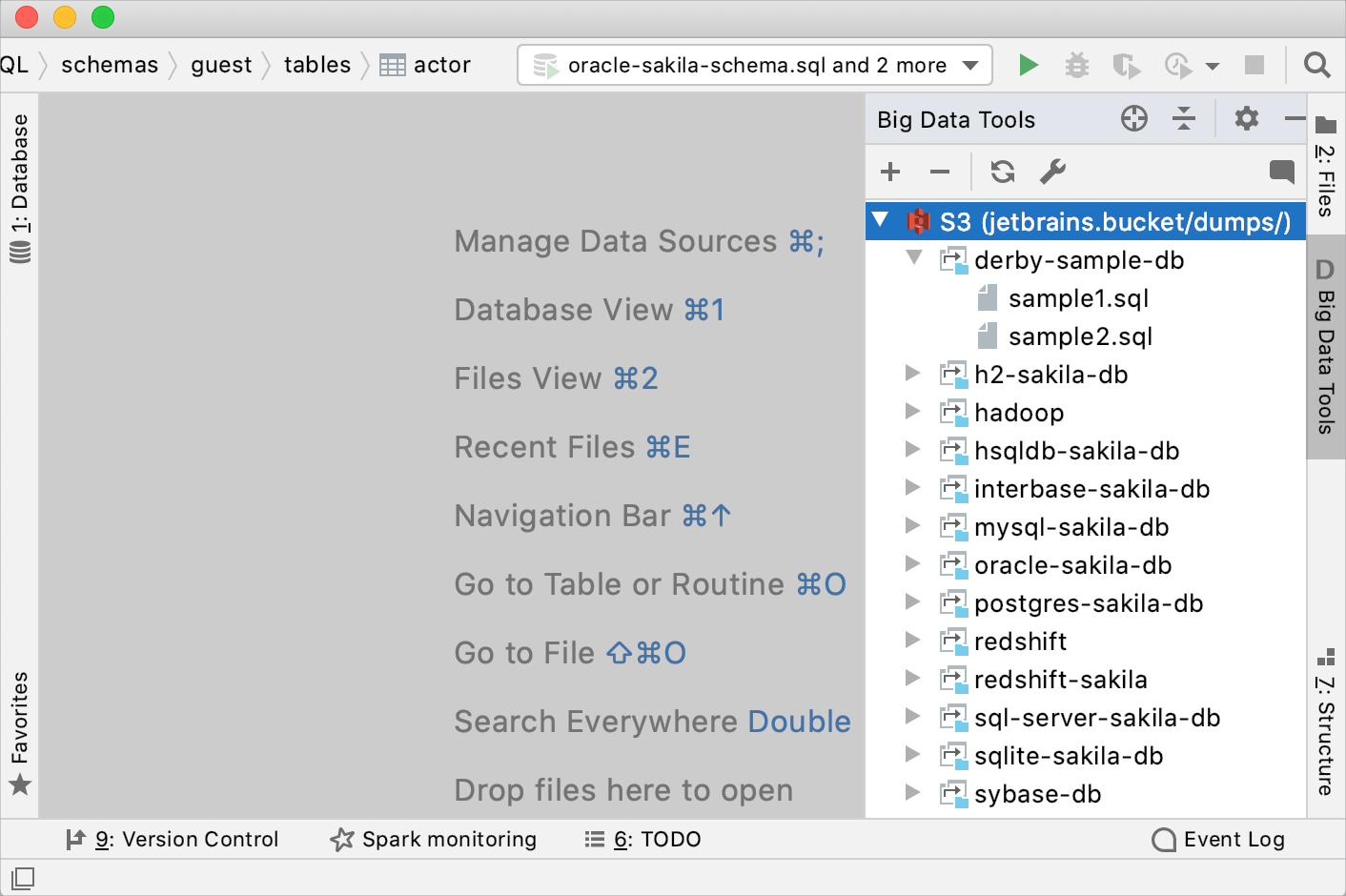

Restart the IDE. After the restart, the Big Data Tools tool window appears in the rightmost group of the tool windows. Click it to open the Big Data Tools window.

You can now select a tool to work with:

17 June 2024