Custom Spark cluster

In the Spark Submit run configuration, you can use AWS EMR or Dataproc as remote servers to run your applications. Besides these two options, you can also configure your own custom Spark cluster: Set up an SSH configuration to connect to a remote server and, optionally, configure connections to a Spark History server and an SFTP connection.

Create a Custom Spark cluster

In the Big Data Tools window, click

and select Custom Spark Cluster.

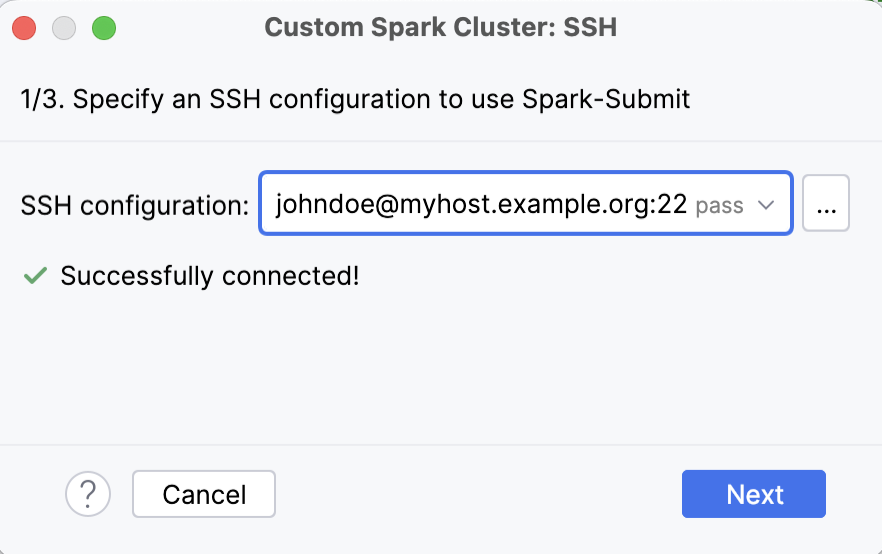

In the first step of the window that opens, select an SSH configuration and click Next. This SSH configuration will be used to connect to a server on which spark-submit is installed.

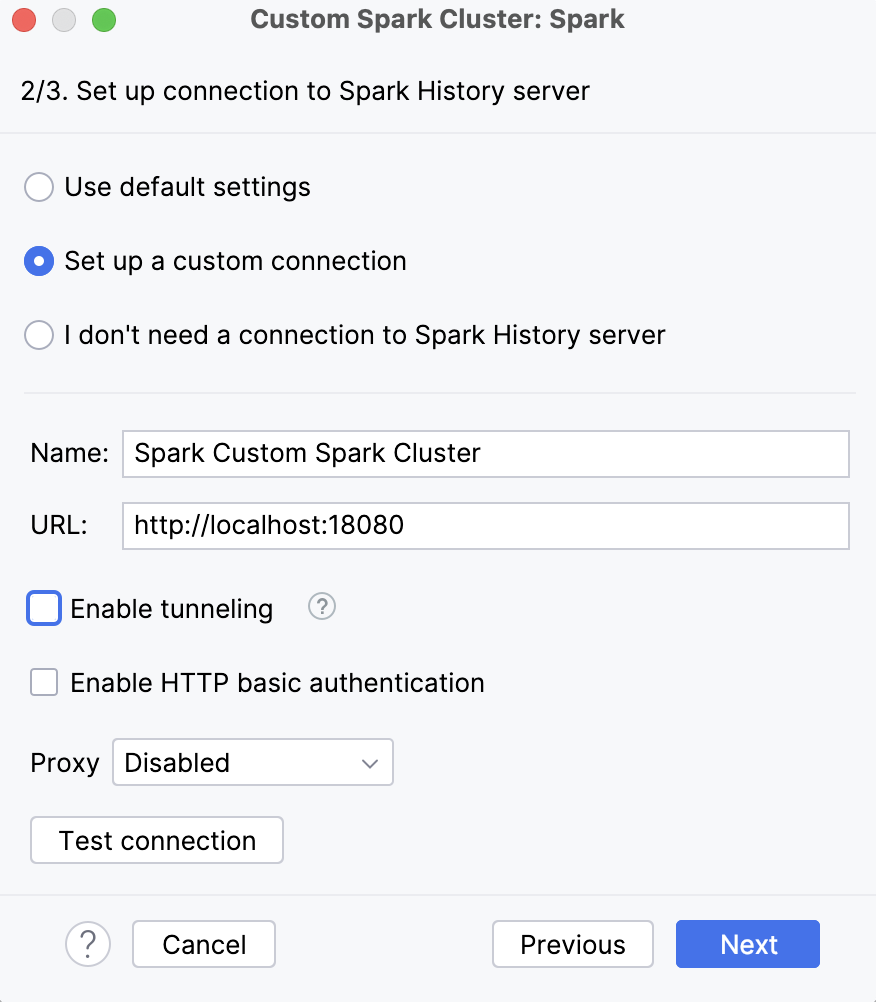

If you want to monitor Spark jobs in the IDE, specify parameters for establishing a connection to a Spark history server in the second step of the wizard. Specify your custom parameters or use default settings, which will create a connection to

localhost:18080using an SSH tunneling.Otherwise, select I don't need a connection to Spark History server.

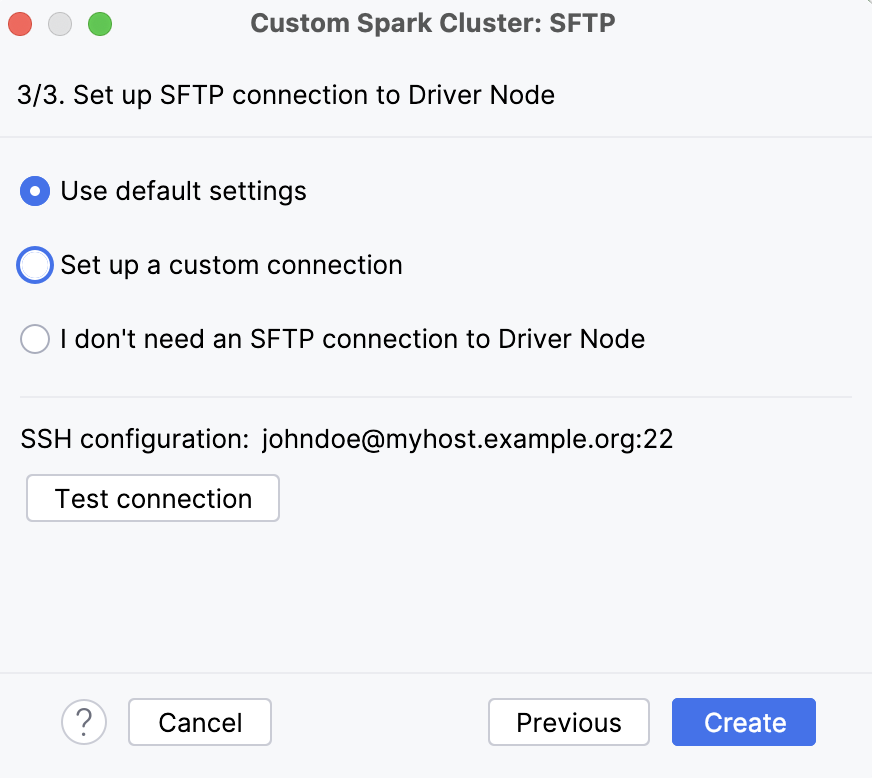

If you need an SFTP connection to your Spark cluster, specify its settings in the third step of the wizard.

Otherwise, select I don't need an SFTP connection to Driver Node.

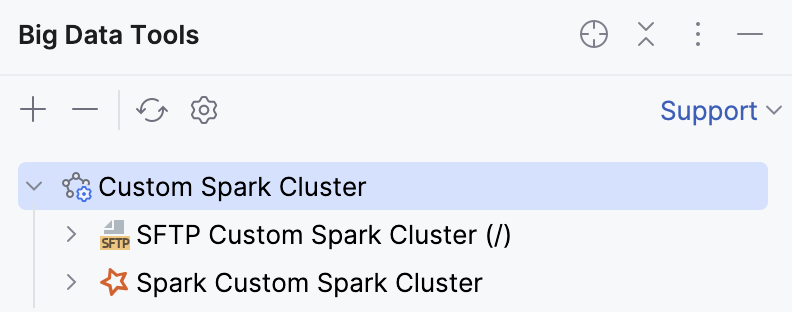

If you've set up both Spark History and SFTP connections, they will be available under Custom Spark Cluster in the Big Data Tools tool window.

You can now select this cluster as a remote target in the Spark Submit run configuration. When you launch this run configuration, you'll be able to open the Spark job in the Services tool window by clicking the link in the application output.