Job Settings

Jobs contain individual build steps that run sequentially. This article covers common settings that control how the sequence is executed.

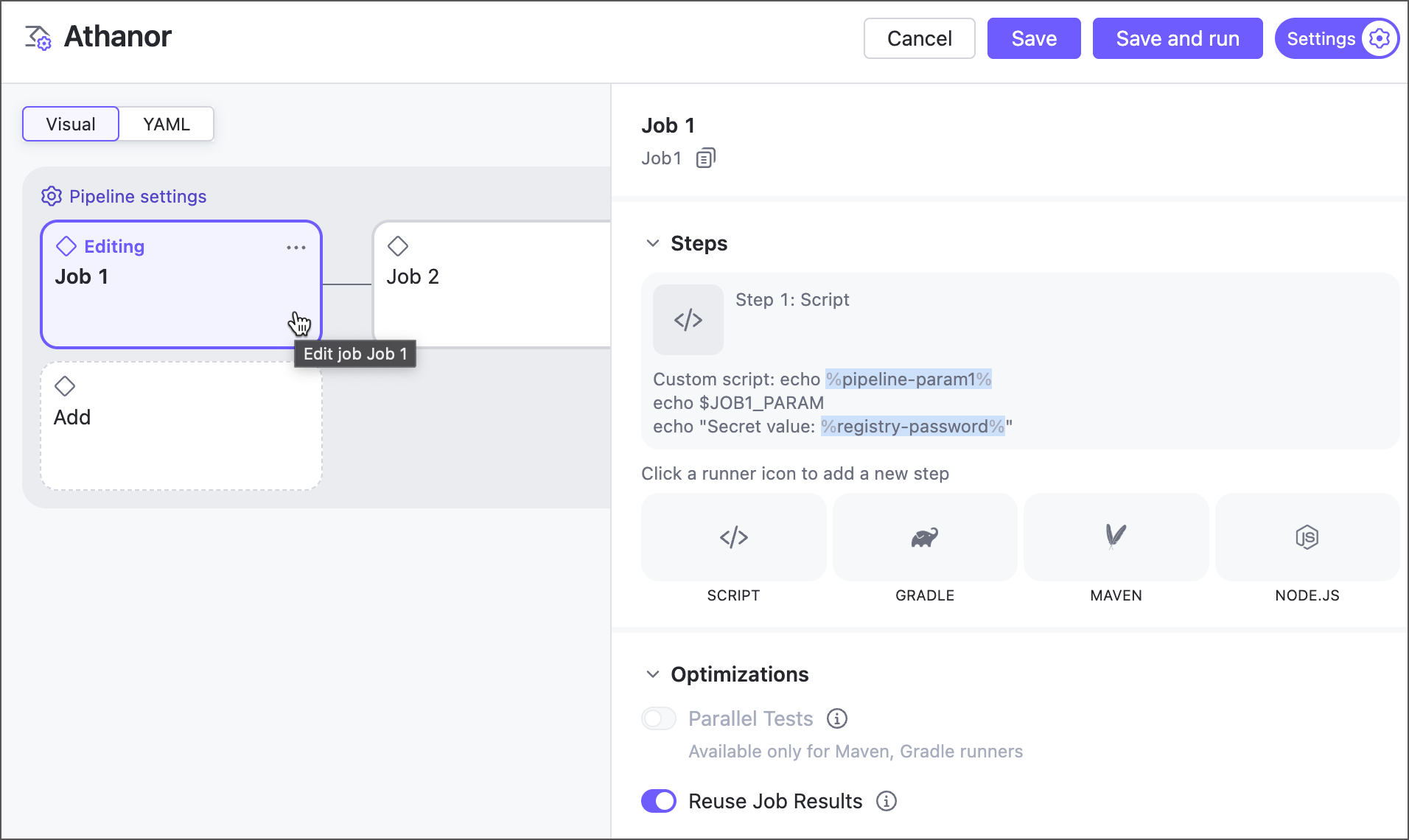

Edit Job Settings

To view and edit job settings, click the Settings toggle in the top right corner, then click any job tile (or the "Add" tile to create a new job).

You can also switch from the visual editor to the code and edit the markup directly.

Steps

Use this section to define what the job does, such as building and testing projects, running custom scripts, uploading Docker images, and so on.

Currently, pipelines support four types of steps you can add. All of them are lightweight versions of corresponding classic build configuration steps

Script

This is a universal step that executes commands directly in the agent machine terminal. As a result, you can interact with any tool installed on the agent: cURL, Python, MSBuild, Homebrew, and so on.

For example, the following step downloads artifacts produced by a target build configuration:

Gradle

Tailored for interacting with the Gradle build tool, this step can build, test, and package Java, Kotlin, Groovy, Scala, Swift, and other projects.

Maven

The Maven build step is designed to process Java, Kotlin, Groovy, and other projects using Apache Maven.

Optimizations

This section covers settings to significantly speed up pipeline runs, saving time, resources, and, for cloud agents, infrastructure costs.

Parallel Tests — Allows Maven and Gradle steps to split test suites into batches, spawning N virtual builds running in parallel on separate build agents.

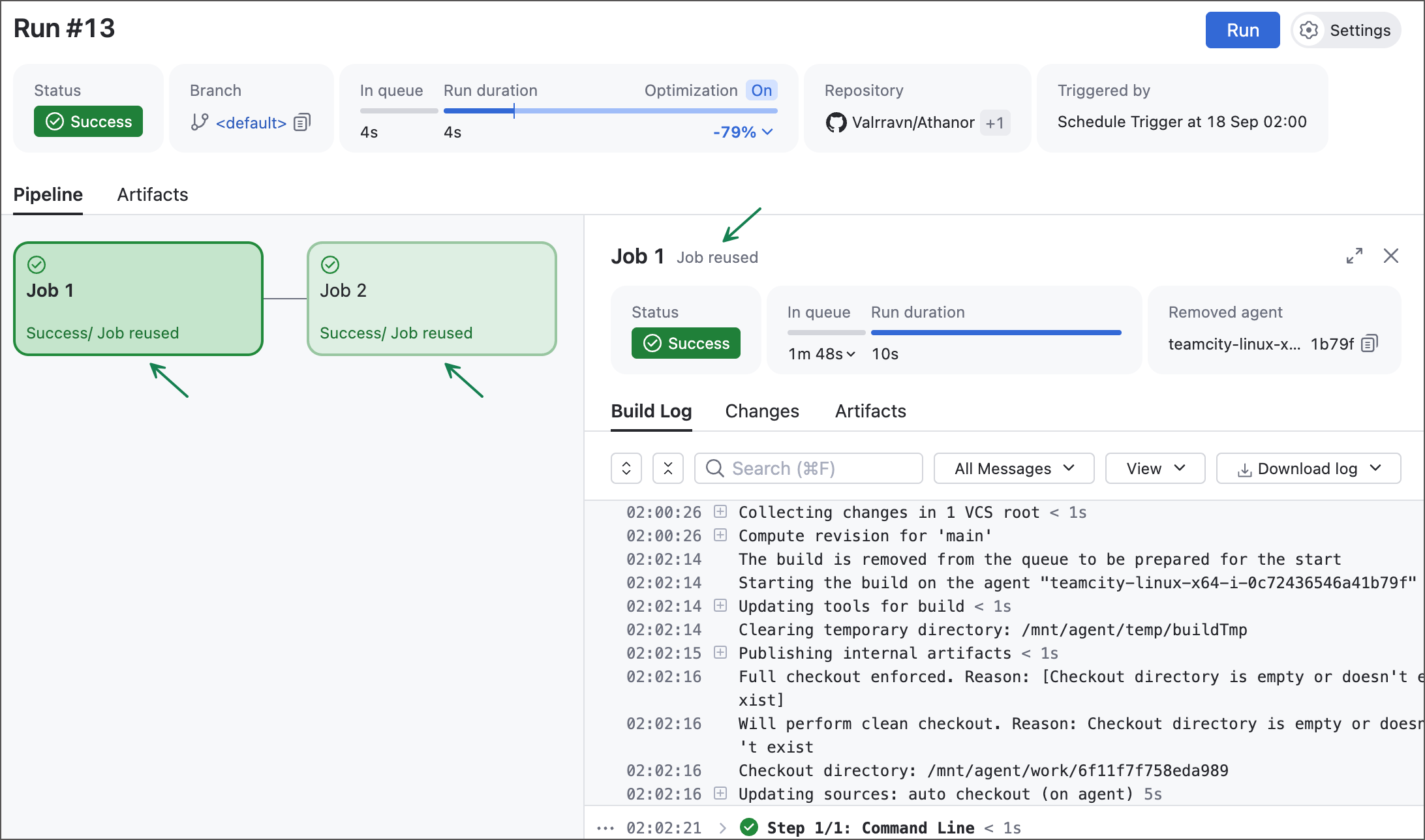

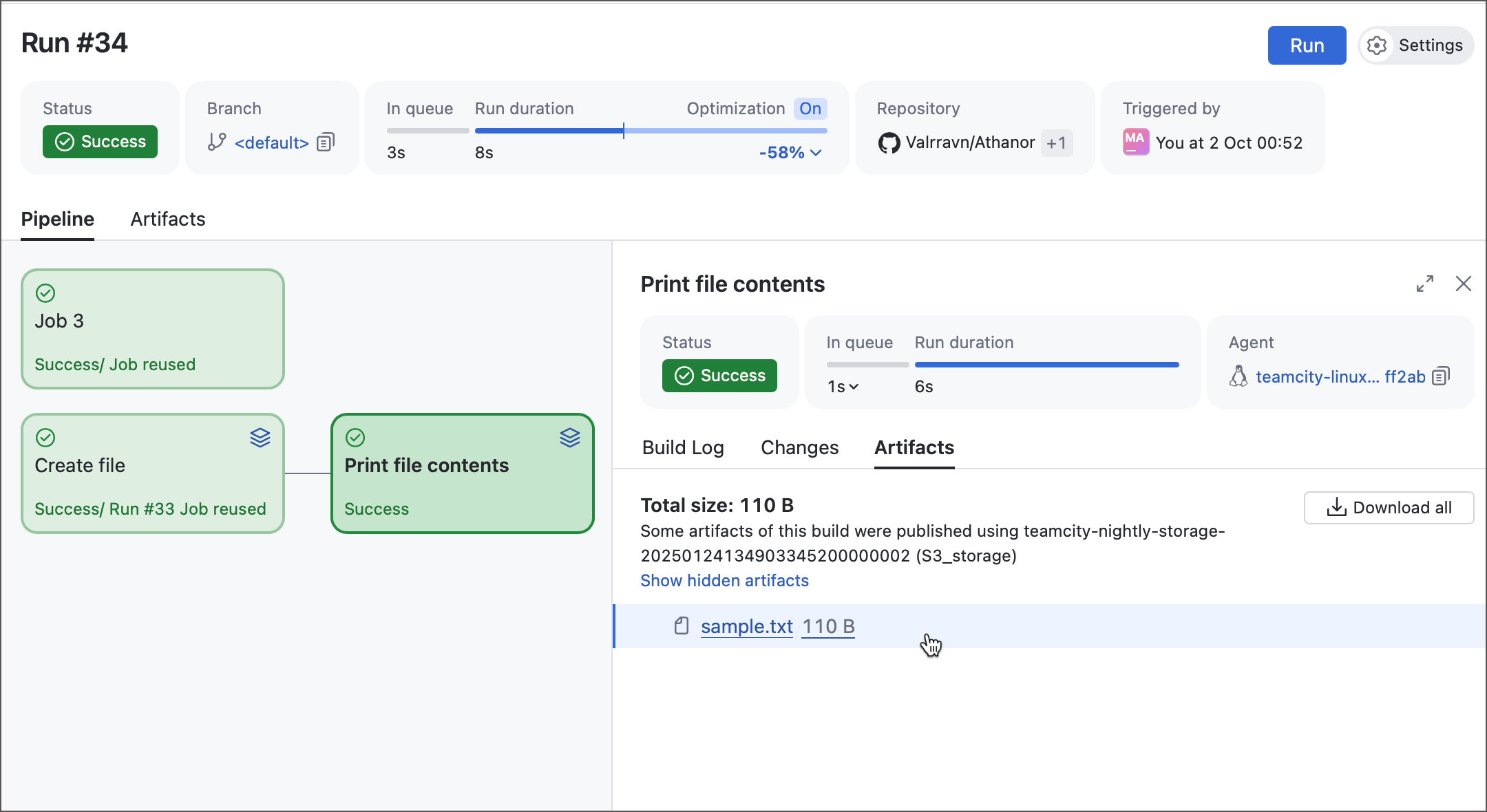

Reuse Job Results — If no enabled repositories contain new changes, TeamCity skips re-running the job and reuses artifacts, status, and results from a previous run. This ensures only jobs affected by recent changes are executed.

Reused jobs are explicitly marked in the UI to avoid any confusion.

Notice the "Optimization" tile at the top: TeamCity completed this run nearly five times faster than the previous one, with reused runs saving almost 80% of the last run’s duration.

Agent Requirements

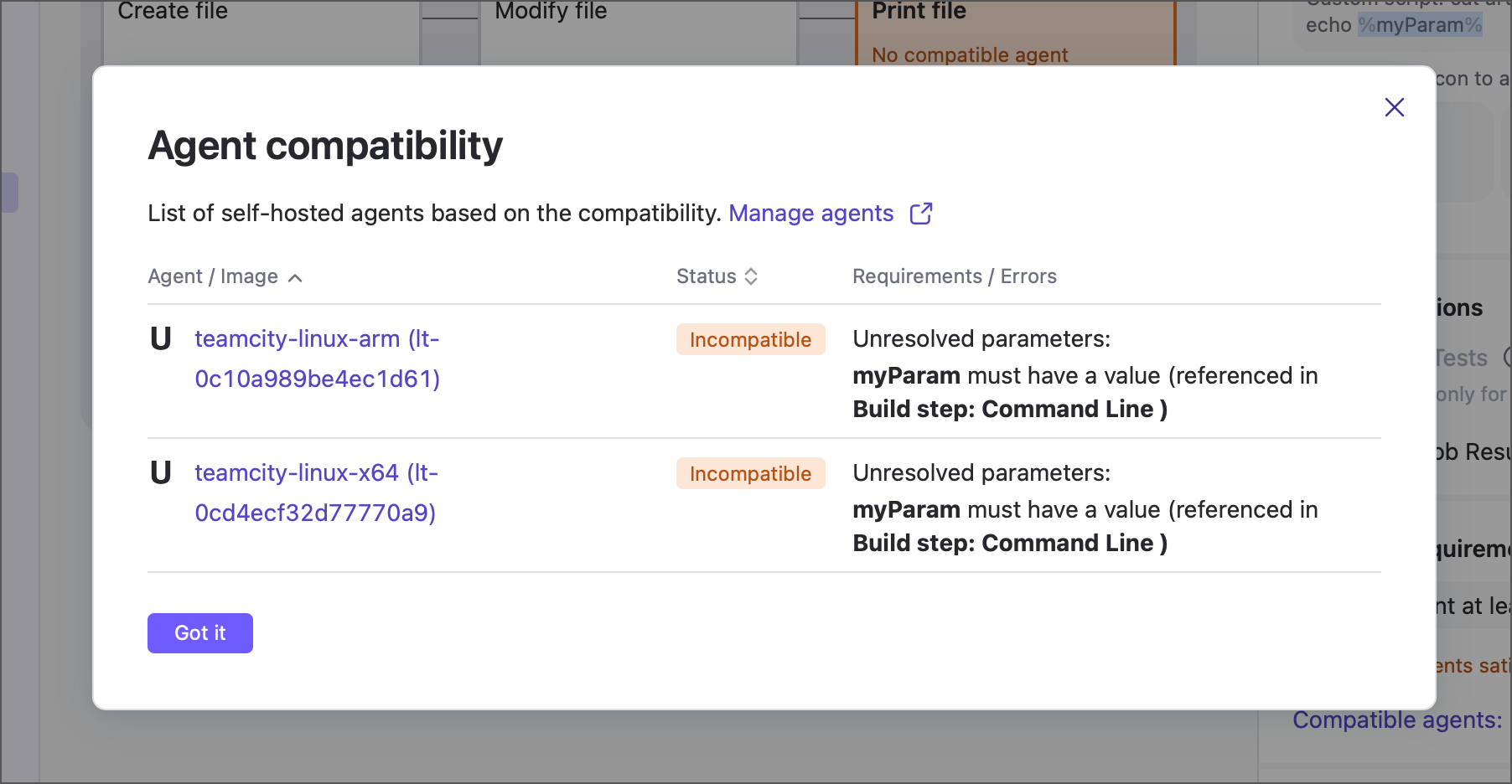

TeamCity automatically tracks agent software to ensure queued runs are assigned only to compatible agents. For example, if a Maven step must run in a container, agents without Docker or Podman are marked incompatible.

Similarly, if a job uses a parameter that is not defined in either pipeline or job Parameters sections, TeamCity checks the agent machine as the last remaining potential source of this parameter value. For example, if the command-line step runs echo %myParam% with an unknown parameter reference, only agents with a non-empty "myParam" parameter can run the job.

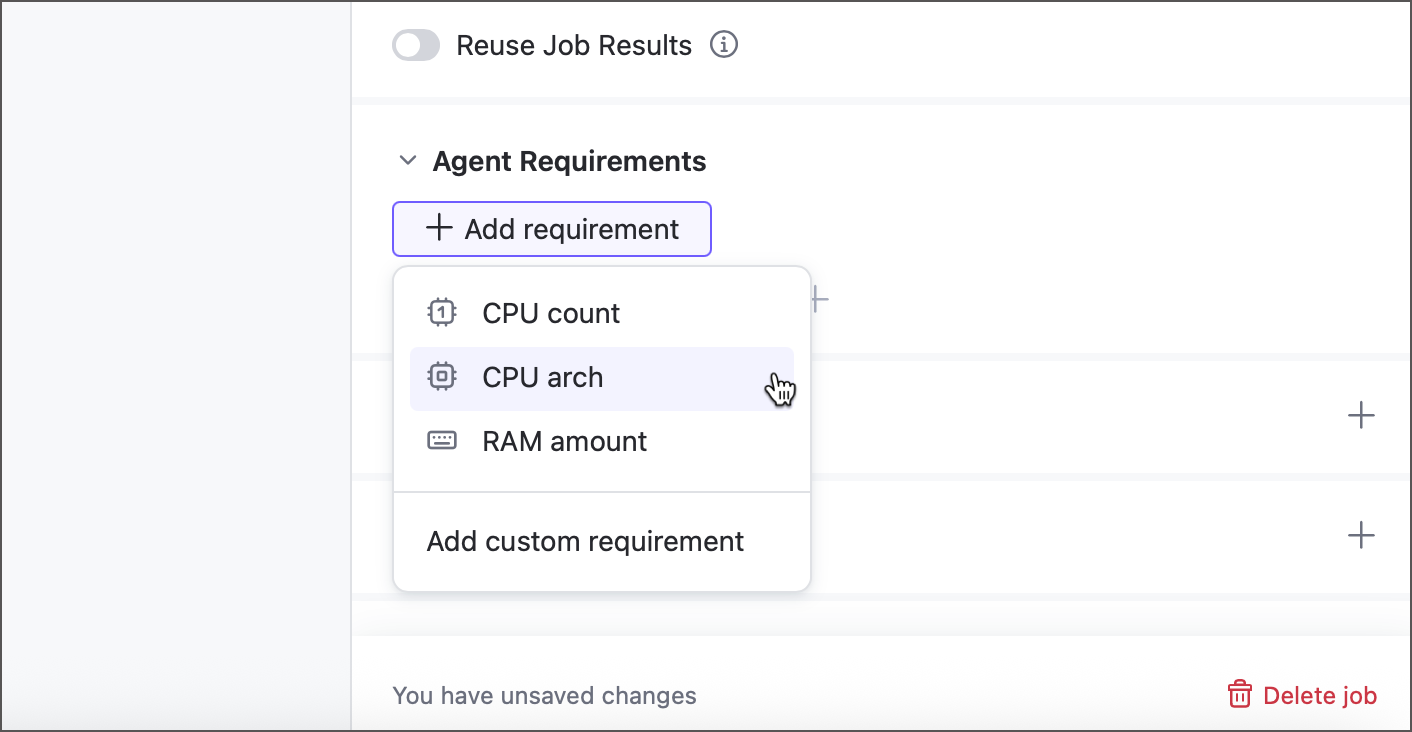

The Agent requirements section allows you to define extra conditions for eligible agents, such as names, hardware specs, or installed tools.

TeamCity displays ready-to-use options for most basic agent hardware requirements: the number of CPU cores, the total amount of agent memory, and the CPU architecture.

Click Add custom requirement to define your own requirements. Each requirement is an <agent.parameter> <operator> [value] expression. TeamCity evaluates these expressions for each authorized agent, marking agents that return "true" as eligible to run the job and labeling the rest as incompatible.

- Agent parameter

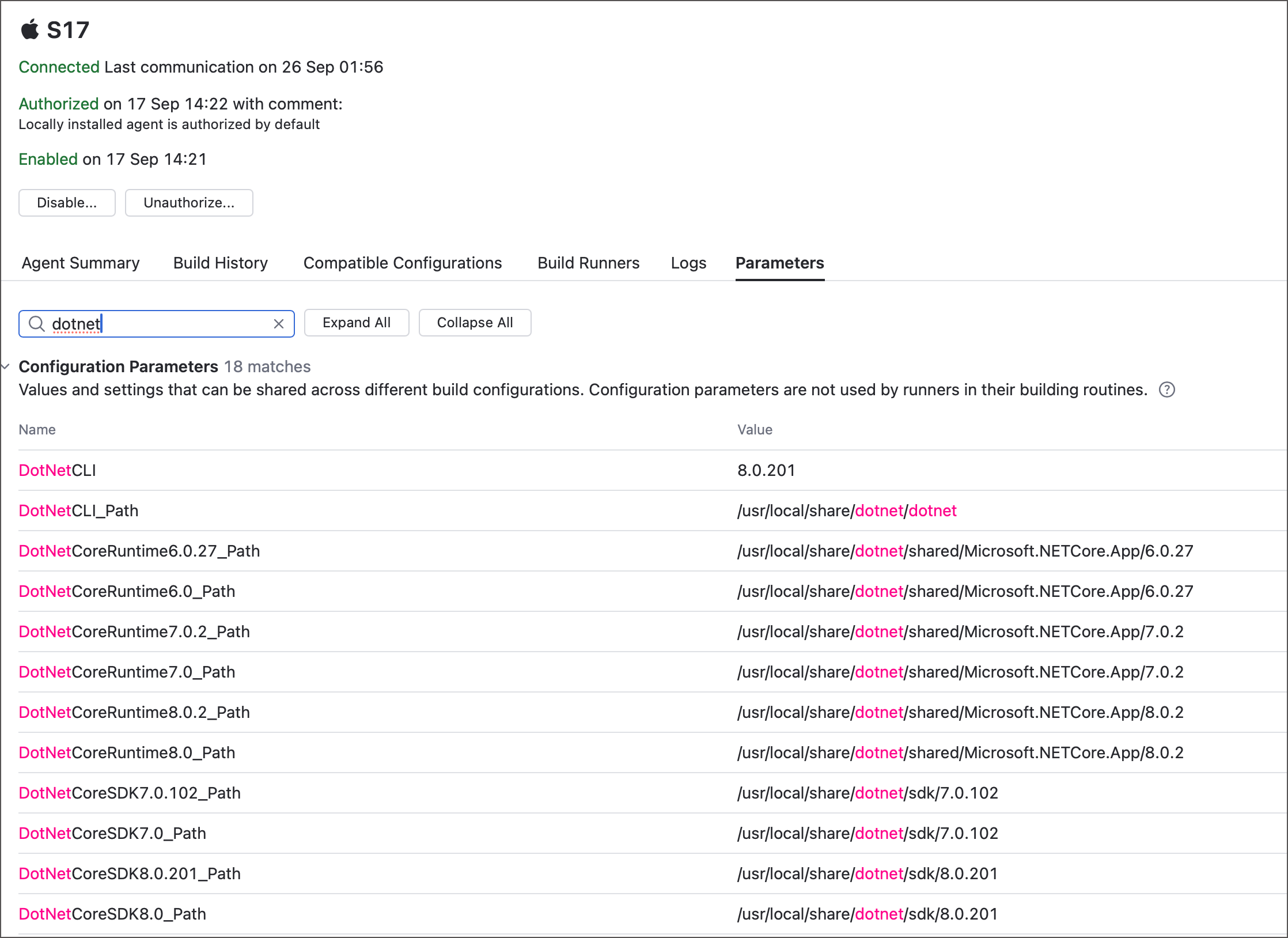

A parameter reported by the agent machine whose value must match the required criteria. Below are a few examples of various agent parameters:

teamcity.agent.jvm.os.arch— reports the agent machine architecture. For example,aarch64for macOS agents running on Apple ARM devices.env.ANDROID_SDK_HOME— returns the path to the Android SDK installed on the agent machine. For example,/home/builduser/android-sdk-linux.teamcity.agent.jvm.user.timezone— stores the timezone of the agent machine. For example,Etc/UTC.MonoVersion— returns the version of the Mono platform. For example,6.12.0.200.

Navigate to Agents | <TeamCity_Agent> | Agent Parameters tab to check what parameters agents report and find those that store agent hardware and software data.

See also: List of Predefined Build Parameters.

- Operator

The logical operator used to compare the actual agent parameter value with the given one. For example, "less than", "starts with", "contains", and so on.

See also: Requirement Conditions

- Value

A custom value to compare against the agent's parameter value. The only operator that does not require a value is

exists, which checks whether the agent reports the required parameter, no matter what actual value it has.

The following YAML sample defines three requirements: 16 GB of RAM, at least 10 GB of free disk space, and Python 3 installed. Standard TeamCity requirements use the shorter alias: value syntax, while custom ones use the complete <parameter> <operator> [value] expressions (with an extra name parameter for the public title).

Parameters

Parameters are name-value pairs designed to substitute raw values with references. When TeamCity encounters a parameter reference (%param-name%), it substitutes it with the actual parameter value.

TeamCity supports two layers of parameters: pipeline parameters and job parameters.

Pipeline parameters are designed to be accessible from any individual job owned by this pipeline.

jobs: Job1: name: Job 1 steps: - type: script script-content: echo %pipeline-param1% Job2: name: Job 2 dependencies: - Job1 steps: - type: script script-content: echo %pipeline-param2% parameters: pipeline-param1: foo pipeline-param2: barTypically, these are configuration parameters (without the

env.name prefix) most commonly used to store values used by multiple jobs, or quickly alter global pipeline settings.Job input parameters are typically environment variables (with the

env.name prefix) available only for this specific job. To pass this value to another job, you need to reference them inside an output parameter.

The sample below shows a pipeline-level secret parameter bearer_token and a job-level environment variable env.SERVER_URL used inside a command-line step. Note that parameters with the env. prefix can be referenced via the regular TeamCity %param_name% syntax or accessed in scripts like native agent variables ($param_name).

Outputs

This section explains how jobs can share the results of their runs, including calculated values and generated files.

Files

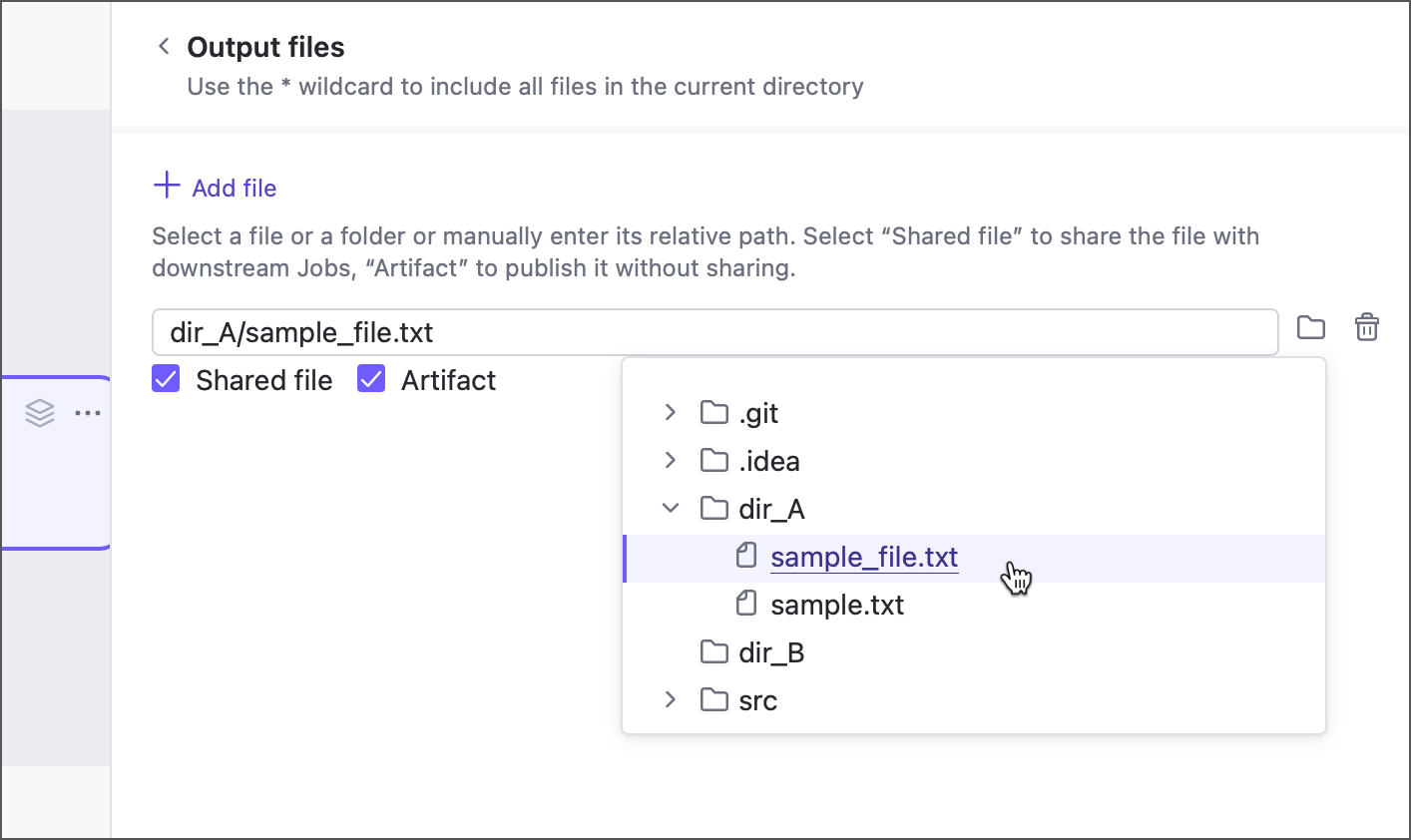

Files shared by a job can serve as artifacts, internal files for downstream jobs, or both.

- Artifacts

Artifacts are files displayed on the Artifacts tab of the run results page. Users with permission to view the project can download these files to local storage.

You can view artifacts in two ways:

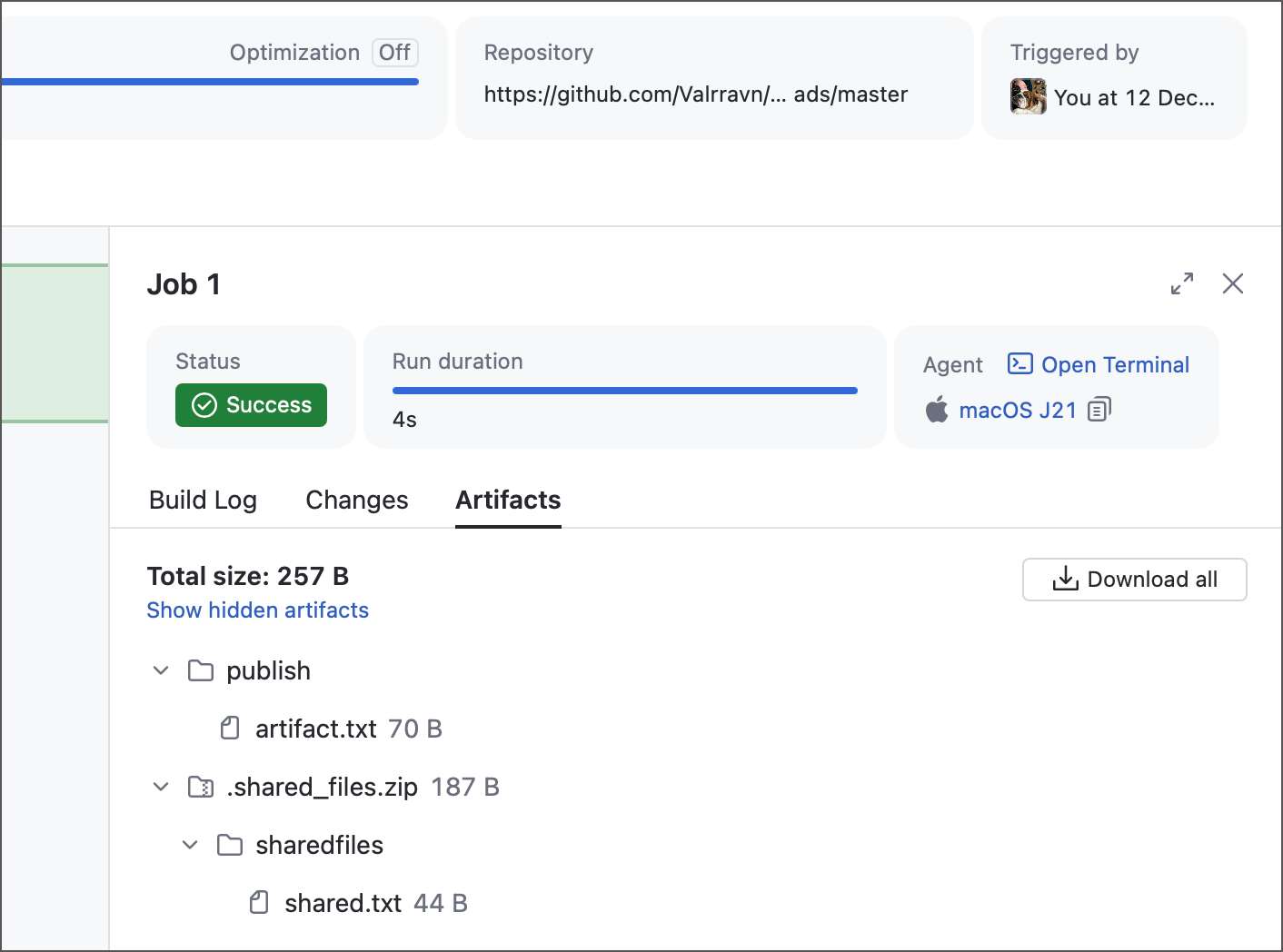

On the run results page, open the Artifacts tab to see all artifacts published by jobs in the pipeline.

From the same page, select a job to open its side panel, then switch to the Artifacts tab to view artifacts produced by that specific job.

- Shared files

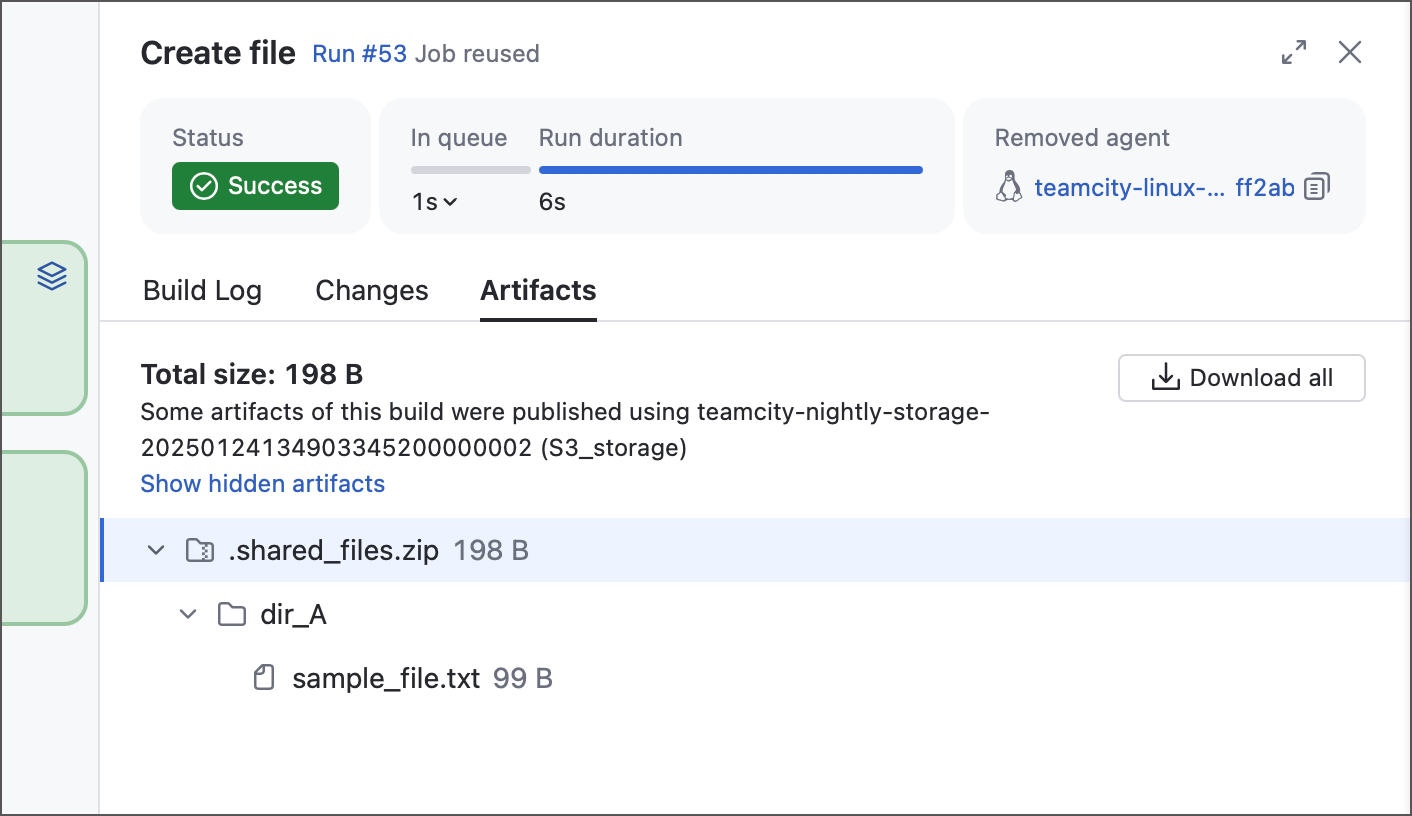

Shared files are passed down the pipeline to subsequent jobs. These are typically internal files or files that are not yet finalized.

Unlike artifacts, shared files are not displayed in the main Artifacts tab of the build results page. However, they show up on the Artifacts tab of the job side panel, packed into a hidden .shared_files.zip archive.

The YAML example below shows one job that creates and modifies a file, and a second job that imports the file and prints its contents. "Job 2" then publishes the file as an artifact.

jobs: Job1: name: Create file steps: - type: script script-content: |- touch sample.txt echo "File created by Job 1, build #%tc.build.number%" >> sample.txt files-publication: - path: sample.txt share-with-jobs: true publish-artifact: false Job2: name: Print file contents dependencies: - Job1 steps: - type: script script-content: cat sample.txt files-publication: - path: sample.txt share-with-jobs: false publish-artifact: true

These two types are not mutually exclusive: when adding an output file, you can tick both Shared file and Artifact checkboxes.

Note that shared files retain their parent directory hierarchy, whereas artifacts do not. The following sample illustrates a job that produces two files, both in their related folders.

Despite the almost identical step scripts and files-publication rules, the results slightly differ. Shared files are placed into the hidden ".shared_files.zip" archive along with their parent folders, whereas artifacts are grouped under the "publish" directory as is.

Output parameters

Jobs can work with two types of parameters: input and output.

Input parameters are name–value pairs that a job uses during its run. See the Parameters section for more information.

Output parameters store values that a job passes down the pipeline to other jobs.

Output parameters are designed as a separate entity to prevent surprise breakages across pipelines. For example, changing (or removing) a parameter in "Job A" might unexpectedly break "Job B" if it depends on it. By marking a parameter as output, TeamCity signals that it may be used elsewhere, so before changing it, check for dependencies to avoid surprises.

The YAML example below defines a pipeline with two jobs that illustrate this concept:

Job 1 uses the

env.INPUTparameter to calculate a value.The job’s script sends the

setParameterservice message to write this value to theresult_paraminput parameter. .That parameter is then mapped to the

output_paramoutput parameter.Job 2 retrieves this output parameter using the

job.<source_job_ID>.<output-parameter-name>syntax.

By following this pattern, you can separate parameters used only within a job from those explicitly shared across the pipeline.

Repository

This section allows you to select which remote repositories this job should check out. To add a repository, create a new entry in the Repositories section of pipeline settings.

By default, sources are checked into a sub-folder of the agent work directory. To ensure agents do not constantly lose sources of one job when running another, this subfolder has an auto-generated name unique for each job (for example, /mnt/agent/work/6fa95896c6cadf54).

You can specify a custom directory for checked out sources via the corresponding option of a Repository section item. The path to the checkout directory can be absolute, however it is highly recommended to use either relative paths (MyCustomFolder) or paths that reference pre-defined TeamCity parameters (%teamcity.agent.work.dir%/MyCustomFolder).

The diagram below outlines the relations between core directories involved in a building process.

Refer to the following articles to learn more:

Agent Home Directory — the installation directory of a build agent.

Agent Work Directory — the subfolder of agent home directory that stores build-related files.

Build Checkout Directory — the subfolder of agent work directory where all checked out sources are downloaded.

Build Working Directory — the directory where a build step starts (equals to "build checkout directory" by default).

Integrations

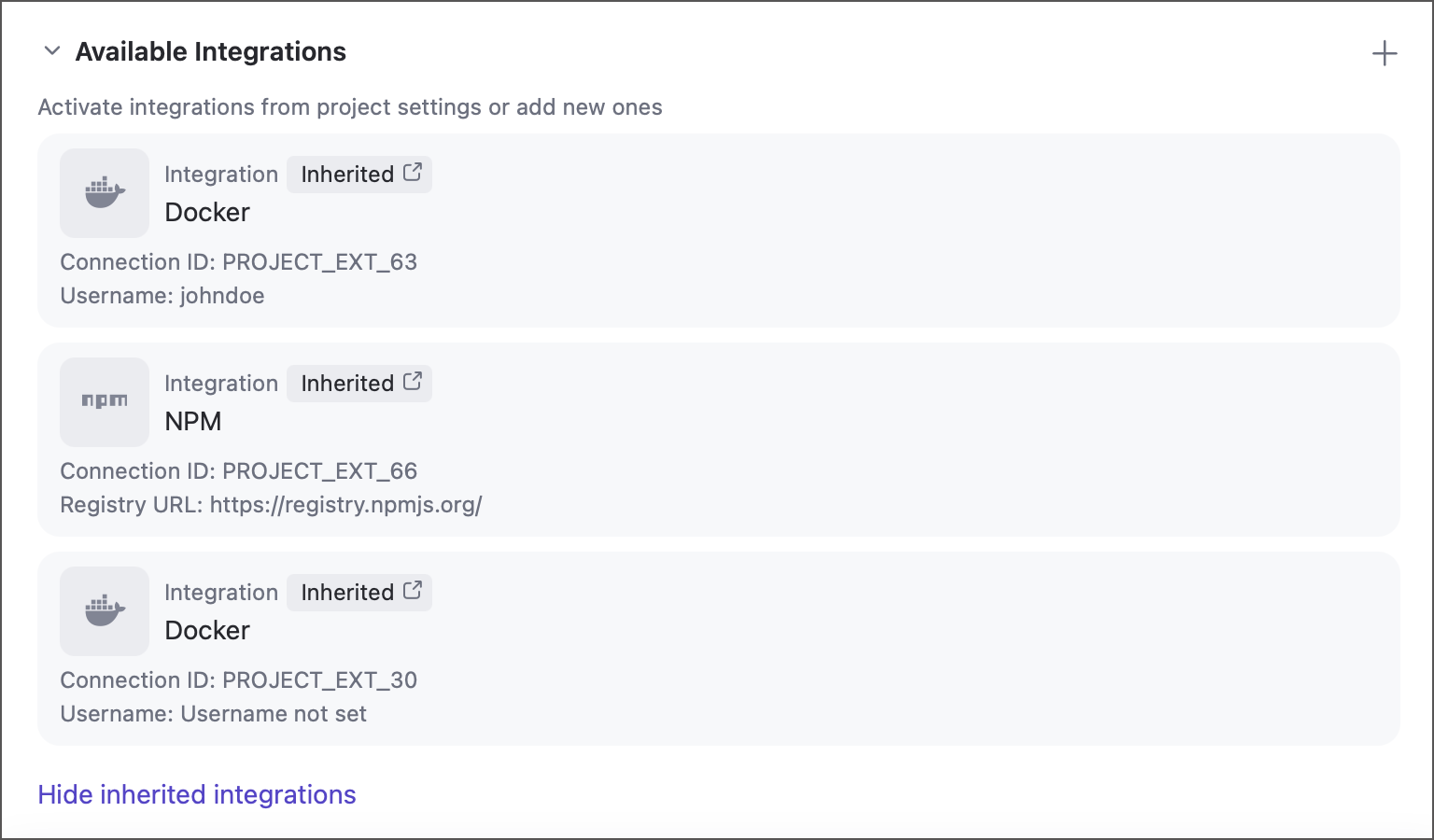

Both pipeline and job settings panels include an Integrations section for connecting to private Docker and NPM registries.

In the pipeline settings, you manage the full list of available integrations for jobs.

In job settings, toggles let you select which registries the job should log in to automatically, ensuring build steps can access the required data.

Classic TeamCity build configurations support this functionality via the "connection + build feature" combinations:

Docker Registry connection and Docker Registry Connections build feature for Docker.

NPM Registry connection and the related build feature for Node.js registries.

If you already have a Docker or NPM connection in a project, a pipeline shows it under its "Integrations" section.

These inherited integrations cannot be edited directly via the pipelines settings panel, you need to modify the origin connection in project settings to do so.