Automated Code Review versus Manual Code Review: Why Smart Teams Use Both

But don’t rely on manual code reviews alone

Manual review is rarely quick. In large projects, a single pull request might take hours to review thoroughly, and that can delay delivery. And because it’s a human process, it’s vulnerable to oversight, even the most experienced reviewers can miss subtle issues, especially when reviewing repetitive boilerplate or under tight deadlines.

In Microsoft’s research report, Expectations, Outcomes and Challenges of Modern Code Review, it states: “There is a mismatch between the expectations and the actual outcomes of code reviews. From our study, review does not result in identifying defects as often as project members would like and even more rarely detects deep, subtle, or ‘macro’-level issues.”

Consistency is another challenge. Different reviewers may have different priorities or tolerances for “good enough” code, leading to uneven quality standards over time.

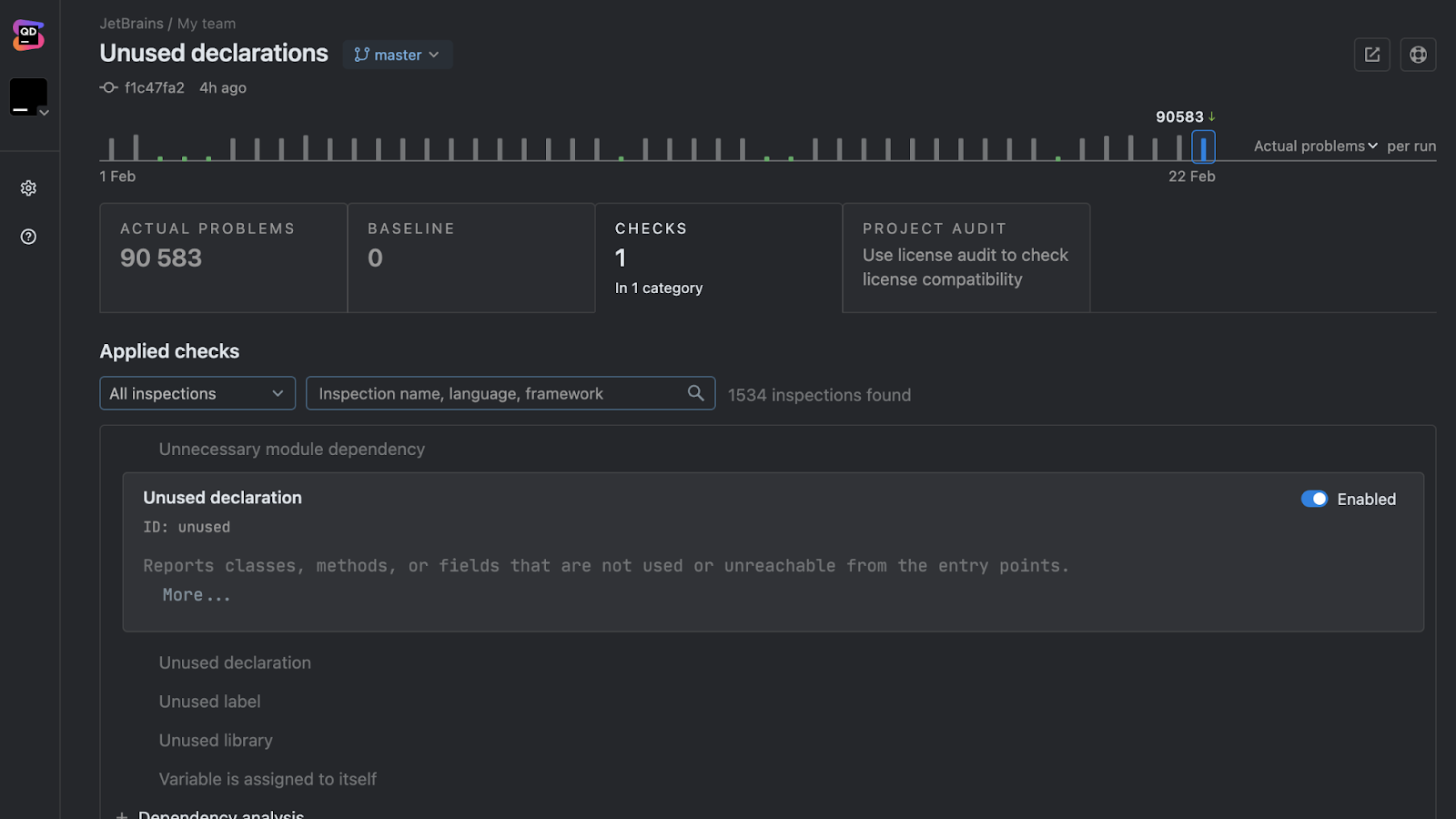

This is where automated review can pick up the slack, enforcing rules objectively and at scale. For example, Qodana could flag this risk automatically:

// Potential null pointer issue

String name = user.getName().toUpperCase(); // NPE risk