Supported LLMs

AI Assistant provides access to a variety of cloud-based LLMs through the JetBrains AI service. You can also use your own API keys to access models from third-party AI providers or connect to locally hosted models.

This flexibility allows you to choose the most suitable model for your tasks – for example, large models for complex codebase operations, smaller models for quick responses, or local models if you prefer to keep your data private.

Cloud-based models

The table below lists models available in AI Assistant with the JetBrains AI service subscription:

– these models have reasoning capabilities, meaning they are better suited for tasks that require logical thinking, structured output, or deeper contextual understanding.

– these models can process images, allowing you to add visual context to your request.

Model | Capabilities | Model context window |

|---|---|---|

| 200k | |

200k | ||

| 200k | |

| 200k | |

200k | ||

| 200k | |

200k | ||

200k | ||

| 1M | |

1M | ||

1M | ||

1M | ||

1M | ||

1M | ||

| 400k | |

| 400k | |

| 400k | |

| 400k | |

| 400k | |

| 400k | |

| 400k | |

| 400k | |

| 400k | |

1M | ||

1M | ||

1M | ||

128k | ||

| 200k | |

| 200k | |

200k | ||

| 200k | |

| 2M | |

2M | ||

| 256k | |

256k |

- Supported models history

The following table lists AI models supported by AI Assistant, along with the IDE versions in which they became available and, where applicable, the versions in which they were removed.

Model

Available in IDEs starting from version

Removed in version

2025.3.x

–

2025.3.x

–

2025.2.x

–

2025.2.x

–

2025.1.x

–

2025.2.x

–

2025.1.x

–

2024.3.x

–

2024.3.x

2025.3

2024.3.x

2025.3

2025.1.x

–

2025.1.x

–

2025.1.x

–

2025.1.x

–

2025.1.x

–

2024.3.x

–

2024.3.x

2025.1

2024.3.x

2025.1

2025.2.x

–

2025.3.x

–

2025.3.x

–

2025.3.x

–

2025.1.x

–

2025.3.x

–

2025.1.x

–

2025.1.x

–

2025.1.x

–

2025.1.x

–

2025.1.x

–

2025.1.x

–

2024.2.x

–

2025.1.x

–

2025.1.x

–

2024.3.x

–

2024.3.x

–

2024.3.x

2025.1

2025.1.x

–

2025.1.x

–

2025.1.x

–

2025.1.x

–

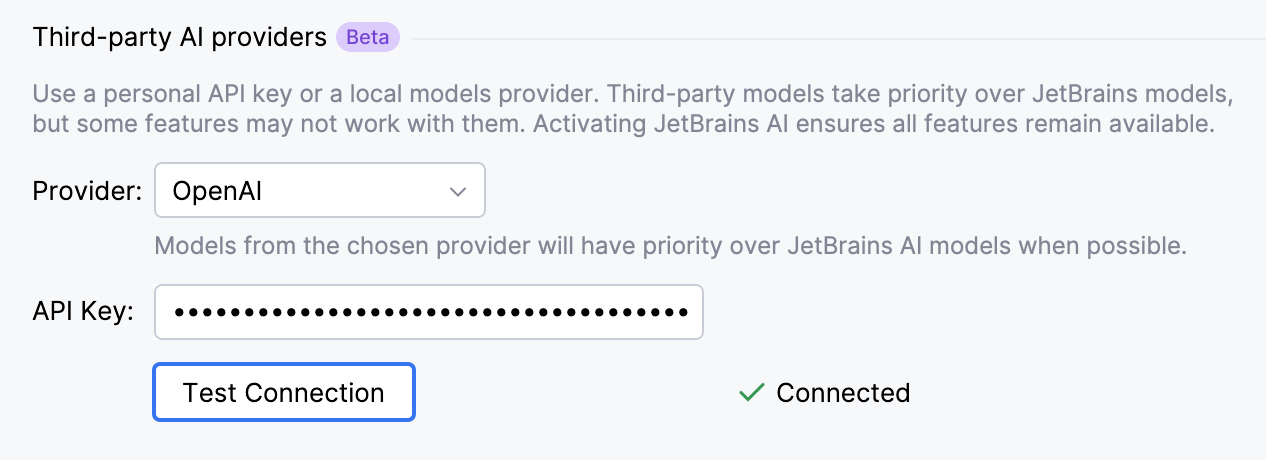

Models from third-party AI providers

AI Assistant supports the Bring Your Own Key approach, allowing you to provide your own API key to use models from a selected third-party AI provider. You can configure the connection in .

For more information, refer to Use third-party and local models.

Local models

AI Assistant supports a selection of models available through Ollama and LM Studio, allowing you to run models locally without relying on cloud-based services.

The default model context window for local models is set to 64 000 tokens. If needed, you can adjust this value in the settings.

For more information about setting up local models, refer to Connect local models.