Connect to an S3 bucket

The article explains how to create an S3 bucket connection.

Step 1. Create and configure a connection

Open the New cloud storage connection dialog.

On the Home page, select the workspace to which you want to add a cloud storage connection.

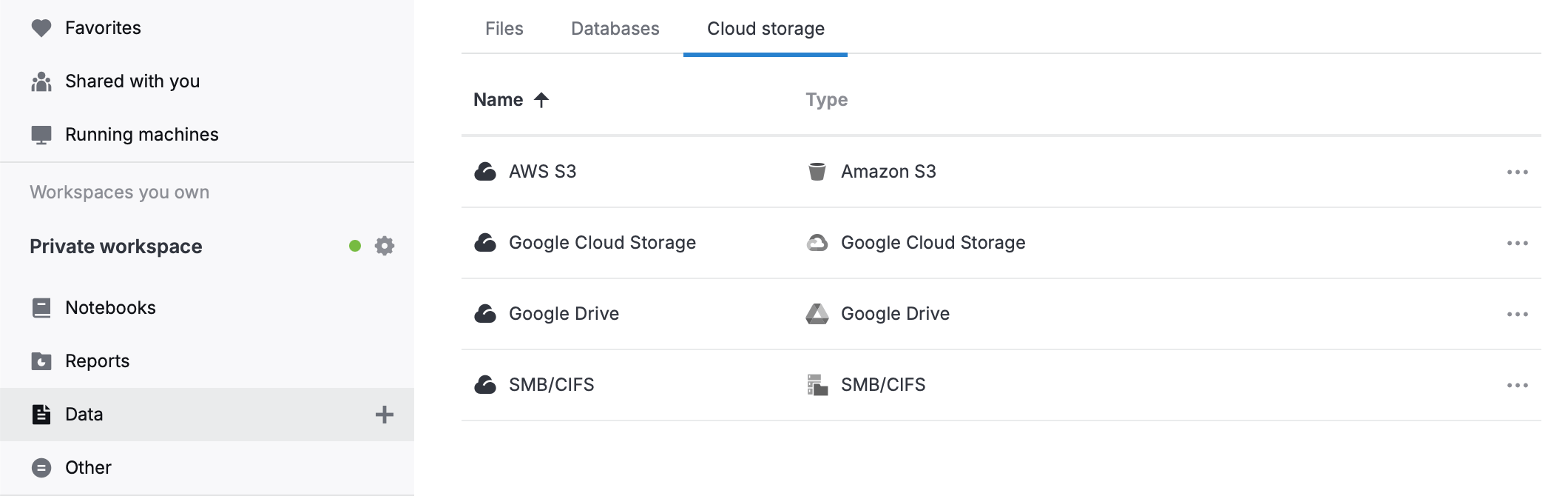

In the expanded list of workspace resources, select

Data and switch to the Cloud storage tab.

Click

New cloud storage connection at the top right.

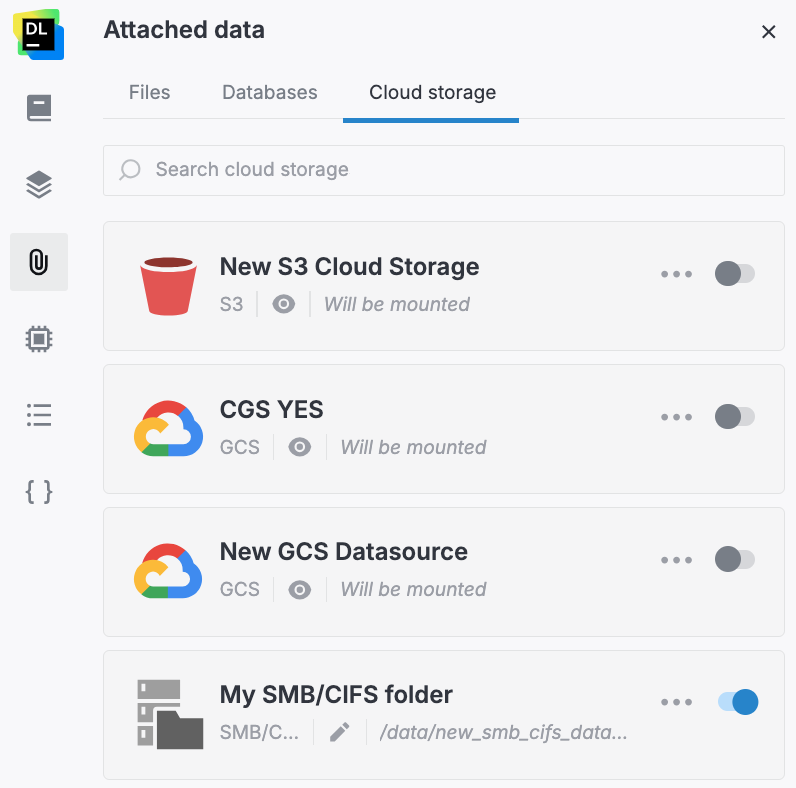

Open the Attached data tool from the left-hand sidebar.

Switch to the Cloud storage tab. You will see the list of all cloud storage connections available from the respective workspace.

At the bottom of the tab, click New cloud storage.

In the New cloud storage connection dialog, select Amazon S3.

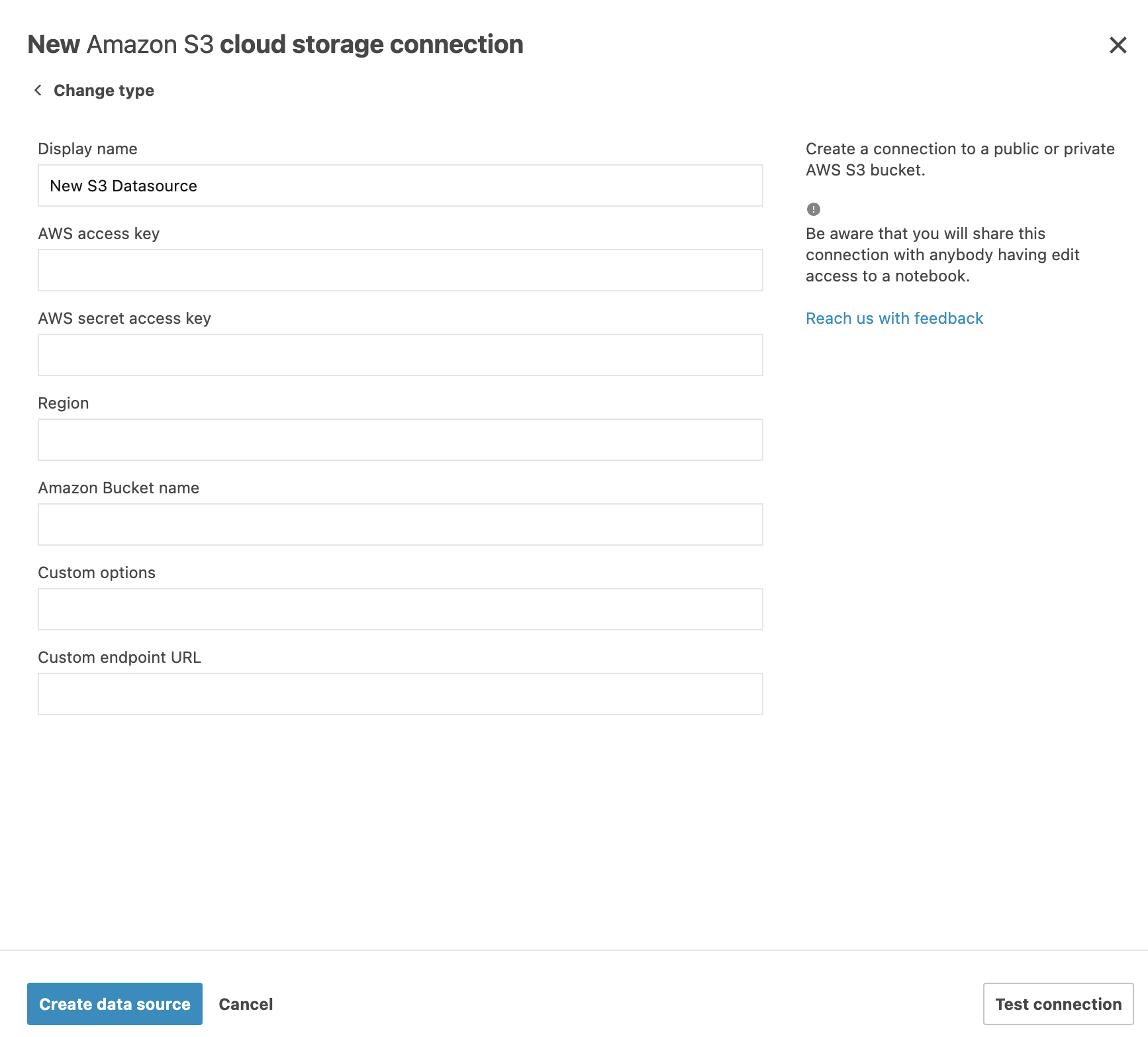

In the New Amazon S3 cloud storage connection dialog, fill in the following fields:

Display name: to specify the name for this data source in your system

AWS access key and AWS secret access key: to access your AWS account (details here)

Region: to specify your AWS region

Amazon Bucket name: to specify the name of the bucket you want to mount

Custom options: to specify additional parameters. See the example below

Custom endpoint URL: to specify the website of the bucket you want to mount

(Optional) Click the Test connection button to make sure the provided parameters are correct.

Click the Create and close button to finish the procedure.

Step 2. Configure optional parameters for S3 data sources

Use the Custom_options field for optional parameters when creating an Amazon S3 data source. Below are two examples of how it can be used.

To enable SSE-C for S3 data sources, specify the following in the Custom_options: In the Custom_options field, specify the following:

use_sse=c:/path/to/keys/filewhere:

/path/to/keys/fileis the file that contain keys. Make sure permissions are600.(For Datalore On-Premises only) To provide access based on a role associated with that of an EC2 instance profile, add

public_bucket=0,iam_roleinto the Custom_options field.

Step 3. Attach the connection to a notebook

Open the notebook you want to attach the connection to.

If the notebook is not running, start it by clicking .

In the sidebar, select Attached data and switch to the Cloud storage tab.

Enable the toggle in your S3 connection.

If the notebook is not running, start it by clicking .

In the sidebar, select Attached data and switch to the Cloud storage tab.

Enable the toggle in your S3 connection.

Step 4. Use the connection in the notebook

After the connection is mounted, you can access files in the S3 bucket from your notebook code:

The connection’s mount path, such as /data/s3/, is shown on the connection card. To copy the path, click .

Next steps

Learn how to manage and delete cloud storage connections in a workspace and in a notebook.

Keywords

S3, bucket, cloud storage, cloud storage connection, attach data, data sources, Amazon S3