Unit testing tutorial

This tutorial gives an overview of the unit testing approach and discusses four testing frameworks supported by CLion: Google Test, Boost.Test, Catch2, and Doctest.

The Unit Testing in CLion part will guide you through the process of including the frameworks into your project and describe CLion testing features.

The basics of unit testing

Unit testing aims to check individual units of your source code separately. A unit here is the smallest part of code that can be tested in isolation, for example, a free function or a class method.

Unit testing helps:

Modularize your code

As code's testability depends on its design, unit tests facilitate breaking it into specialized easy-to-test pieces.

Avoid regressions

When you have a suite of unit tests, you can run it iteratively to ensure that everything keeps working correctly every time you add new functionality or introduce changes.

Document your code

Running, debugging, or even just reading tests can give a lot of information about how the original code works, so you can use them as implicit documentation.

A single unit test is a method that checks some specific functionality and has clear pass/fail criteria. The generalized structure of a single test looks like this:

Good practices for unit testing include:

Creating tests for all publicly exposed functions, including class constructors and operators.

Covering all code paths and checking both trivial and edge cases, including those with incorrect input data (refer to negative testing).

Assuring that each test works independently and does't prevent other tests from execution.

Organizing tests in a way that the order in which you run them doesn't affect the results.

It's useful to group test cases when they are logically connected or use the same data. Suites combine tests with common functionality (for example, when performing different cases for the same function). Fixture classes help organize shared resources for multiple tests. They are used to set up and clean up the environment for each test within a group and thus avoid code duplication.

Unit testing is often combined with mocking. Mock objects are lightweight implementations of test targets, used when the under-test functionality contains complex dependencies and it is difficult to construct a viable test case using real-world objects.

Frameworks

Manual unit testing involves a lot of routines: writing stub test code, implementing main(), printing output messages, and so on. Unit testing frameworks not only help automate these operations, but also let you benefit from the following:

Manageable assertion behavior

With a framework, you can specify whether or not a failure of a single check should cancel the whole test execution: along with the regular

ASSERT, frameworks provideEXPECT/CHECKmacros that don't interrupt your test program on failure.Various checkers

Checkers are macros for comparing the expected and the actual result. Checkers provided by testing frameworks often have configurable severity (warning, regular expectation, or a requirement). Also, they can include tolerances for floating point comparisons and even pre-implemented exception handlers that check raising of an exception under certain conditions.

Tests organization

With frameworks, it's easy to create and run subsets of tests grouped by common functionality (suites) or shared data (fixtures). Also, modern frameworks automatically register new tests, so you don't need to do that manually.

Customizable messages

Frameworks take care of the tests output: they can show verbose descriptive outputs, as well as user-defined messages or only briefed pass/fail results (the latter is especially handy for regression testing).

XML reports

Most of the testing frameworks provide exporting results in XML format. This is useful when you need to further pass the results to a continuous integration system such as TeamCity or Jenkins.

Unit testing in CLion

CLion's integration with Google Test, Boost.Test, Catch2, and Doctest includes

full code insight for framework libraries,

dedicated run/debug configurations,

gutter icons to run or debug tests/suites/fixtures and check their status,

a specialized test runner,

and code generation for tests and fixture classes (available for Google Tests).

Setting up a testing framework for your project

In this chapter, we will discuss how to add the Google Test, Boost.Test, Catch2, and Doctest framework to a project in CLion and how to write a simple set of tests.

As an example, we will use the DateConverter project that you can clone from github repo. This program calculates the absolute value of a date given in the Gregorian calendar format and converts it into a Julian calendar date. Initially, the project doesn't include any tests - we will add them step by step. To see the difference between the frameworks, we will use all four to perform the same tests.

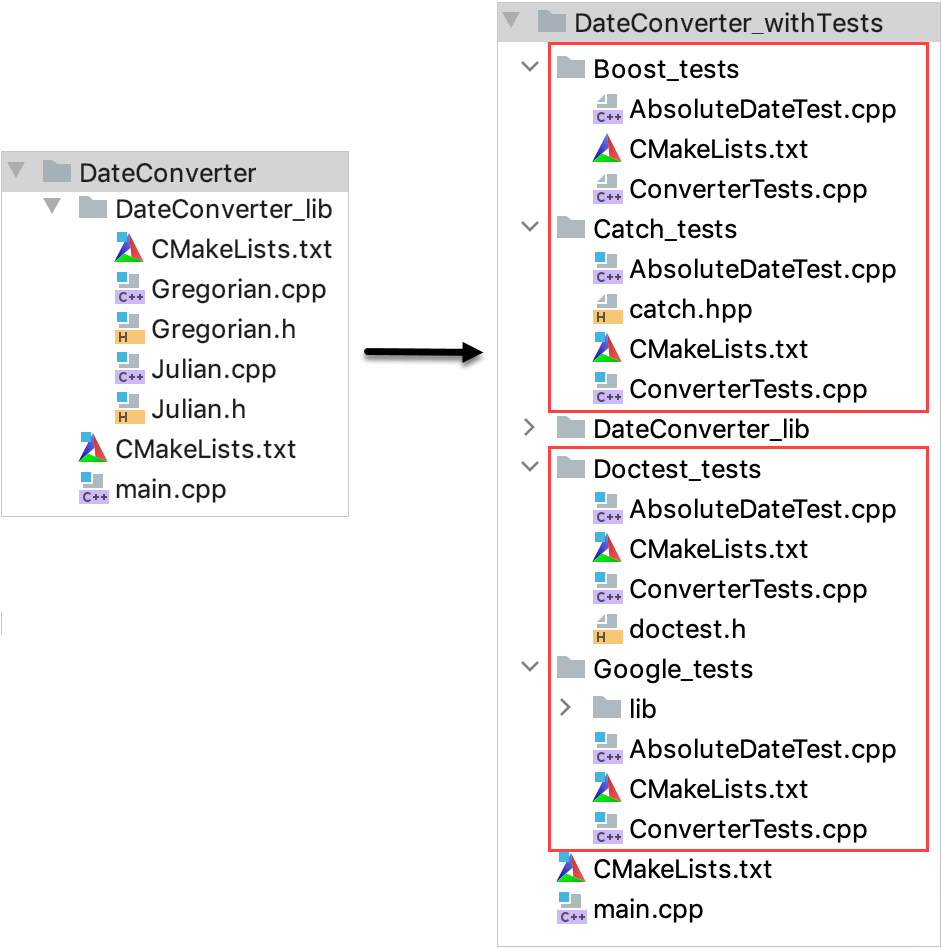

You can find the final version of the project in the DateConverter_withTests repository. Here is how the project structure will be transformed:

For each framework, we will do the following:

Add the framework to the DateConverter project.

Create two test files, AbsoluteDateTest.cpp and ConverterTests.cpp. These files will be named similarly for each framework, and they will contain test code written using the syntax of a particular framework.

Now let's open the cloned DateConverter project and follow the instructions given in the tabs below:

Include the Google Test framework

Create a folder for Google Tests under the DateConverter project root. Inside it, create another folder for the framework's files. In our example, it's Google_tests and Google_tests/lib folders respectfully.

Download Google Test from the official repository. Extract the contents of the googletest-main folder into Google_tests/lib.

Add a CMakeLists.txt file to the Google_tests folder (right-click it in the project tree and select ). Add the following lines:

project(Google_tests) add_subdirectory(lib) include_directories(${gtest_SOURCE_DIR}/include ${gtest_SOURCE_DIR})In the root CMakeLists.txt script, add the

add_subdirectory(Google_tests)line at the end and reload the project.

Add Google tests

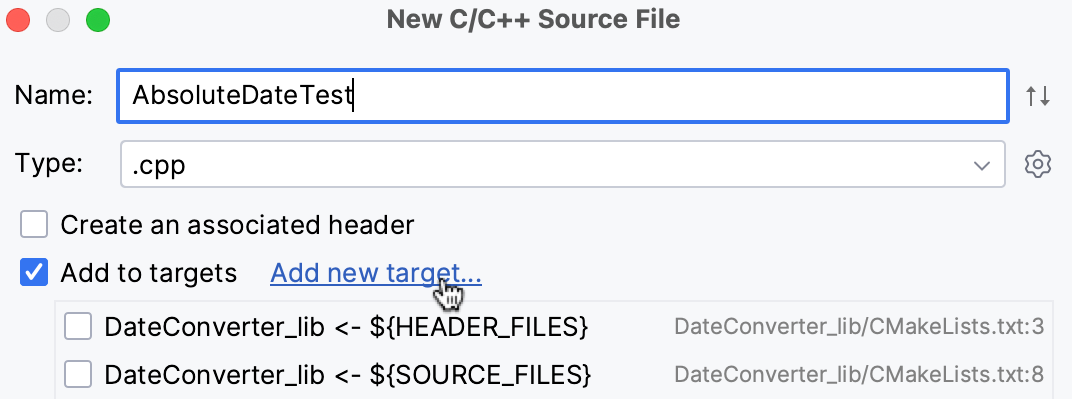

Click Google_tests folder in the project tree and select , call it AbsoluteDateTest.cpp.

CLion prompts to add this file to an existing target. We don't need to do that, since we are going to create a new target for this file on the next step.

Repeat this step for ConverterTests.cpp.

With two source files added, we can create a test target for them and link it with the

DateConverter_liblibrary.Add the following lines to Google_tests/CMakeLists.txt:

# adding the Google_Tests_run target add_executable(Google_Tests_run ConverterTests.cpp AbsoluteDateTest.cpp) # linking Google_Tests_run with DateConverter_lib which will be tested target_link_libraries(Google_Tests_run DateConverter_lib) target_link_libraries(Google_Tests_run gtest gtest_main)Copy the Google Test version of our checks from AbsoluteDateTest.cpp and ConverterTests.cpp to your AbsoluteDateTest.cpp and ConverterTests.cpp files.

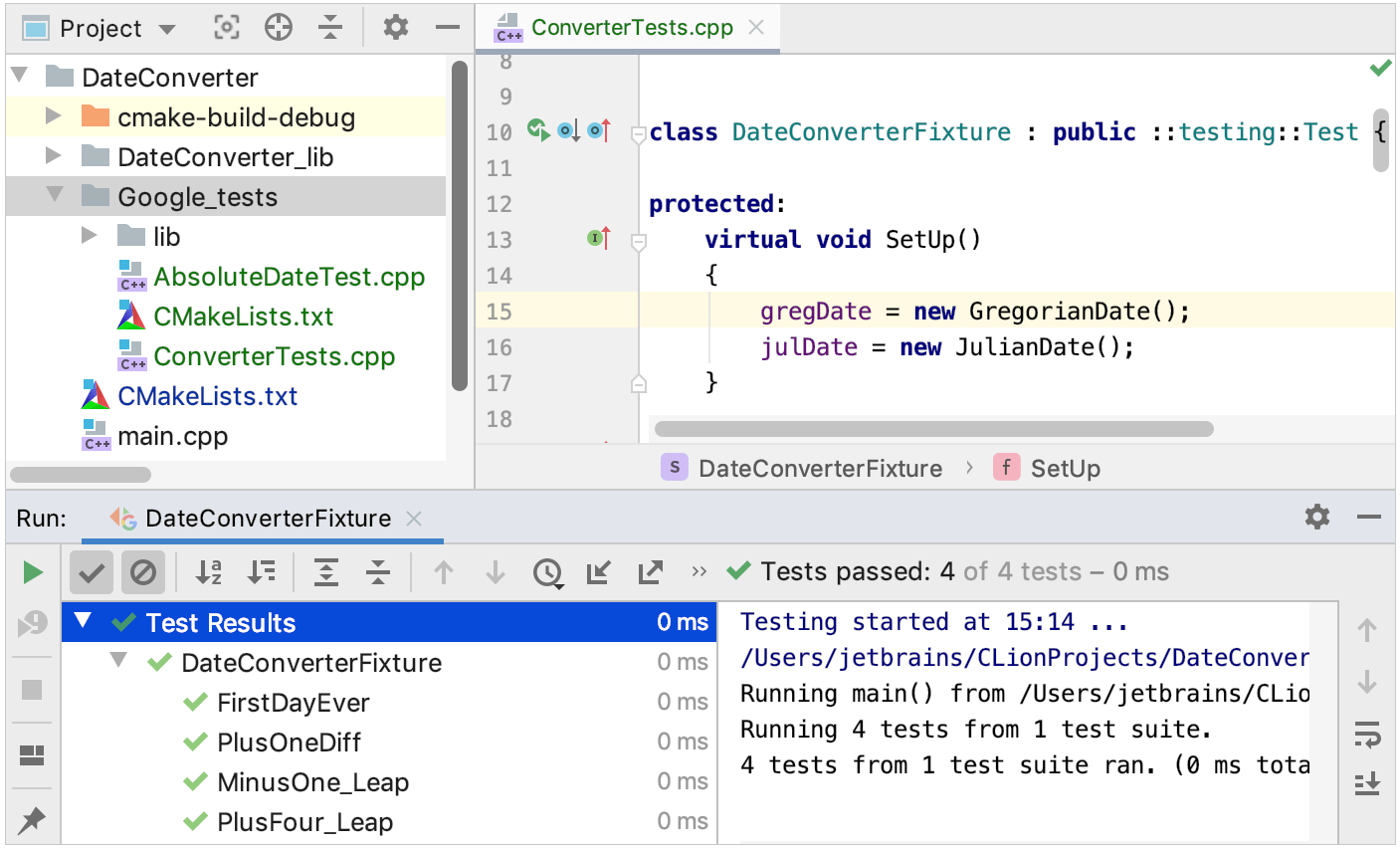

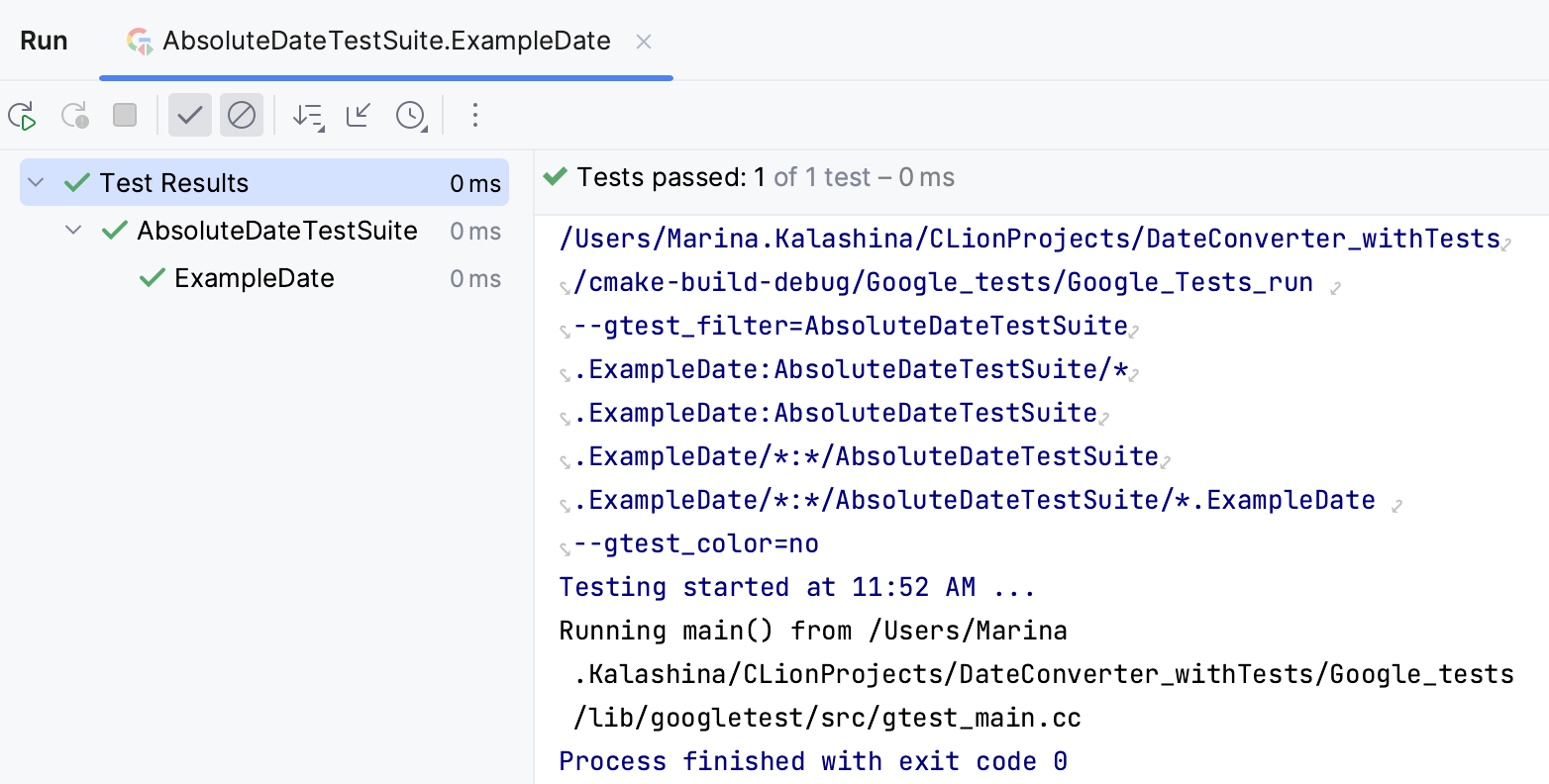

Now the tests are ready to run. For example, let's click

in the left gutter next to the

DateConverterFixturedeclaration in ConverterTests.cpp and choose Run.... We will get the following results:

Include the Boost.Test framework

Install and build Boost Testing Framework following these instructions (further in the tests we will use the shared library usage variant to link the framework).

Create a folder for Boost tests under the DateConverter project root. In our example, it's called Boost_tests.

Add a CMakeLists.txt file to the Boost_tests folder (right-click it in the project tree and select ). Add the following lines:

set (Boost_USE_STATIC_LIBS OFF) find_package (Boost REQUIRED COMPONENTS unit_test_framework) include_directories (${Boost_INCLUDE_DIRS})In the root CMakeLists.txt script, add the

add_subdirectory(Boost_tests)line at the end and reload the project.

Add Boost tests

Click Boost_tests in the project tree and select , call it AbsoluteDateTest.cpp.

CLion will prompt to add this file to an existing target. We don't need to do that, since we are going to create a new target for this file on the next step.

Repeat this step for ConverterTests.cpp.

With two source files added, we can create a test target for them and link it with the

DateConverter_liblibrary. Add the following lines to Boost_tests/CMakeLists.txt:add_executable (Boost_Tests_run ConverterTests.cpp AbsoluteDateTest.cpp) target_link_libraries (Boost_Tests_run ${Boost_LIBRARIES}) target_link_libraries (Boost_Tests_run DateConverter_lib)Reload the project.

Copy the Boost.Test version of our checks from AbsoluteDateTest.cpp and ConverterTests.cpp to the corresponding source files in your project.

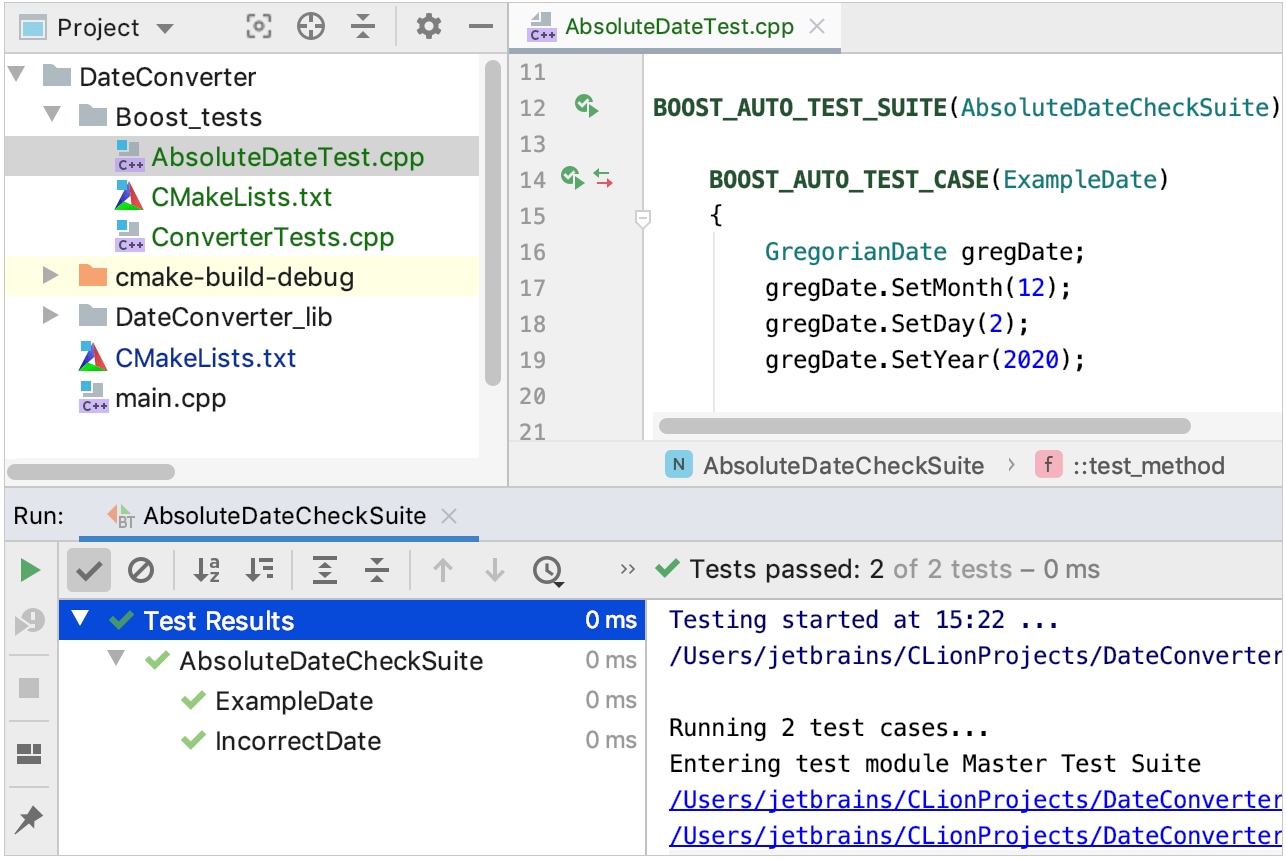

Now the tests are ready to run. For example, let's click

in the left gutter next to

BOOST_AUTO_TEST_SUITE(AbsoluteDateCheckSuite)in AbsoluteDateTest.cpp and choose Run.... We will get the following results:

Include the Catch2 framework

Install Catch2 on system following the official instruction.

Create a folder for Catch2 tests under the DateConverter project root. In our example, it's called Catch_tests.

Create a CMakeLists.txt file in the Catch_tests folder (right-click the folder in the project tree and select ).

We will fill this file step by step. For now, add one command at the top:

find_package(Catch2 3 REQUIRED)In the root CMakeLists.txt, add the following command in the end and reload the project:

add_subdirectory(Catch_tests)

Add Catch2 test targets

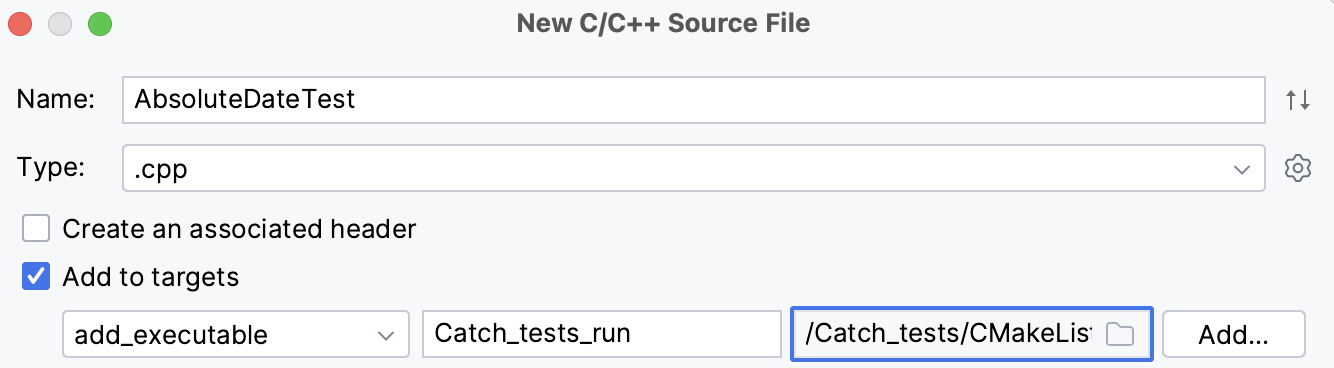

Click Catch_tests in the project tree and select , call it AbsoluteDateTest.cpp.

Click Add new target:

Set the target name to Catch_tests_run and specify its location, Catch_tests/CMakeLists.txt.

Click Add.

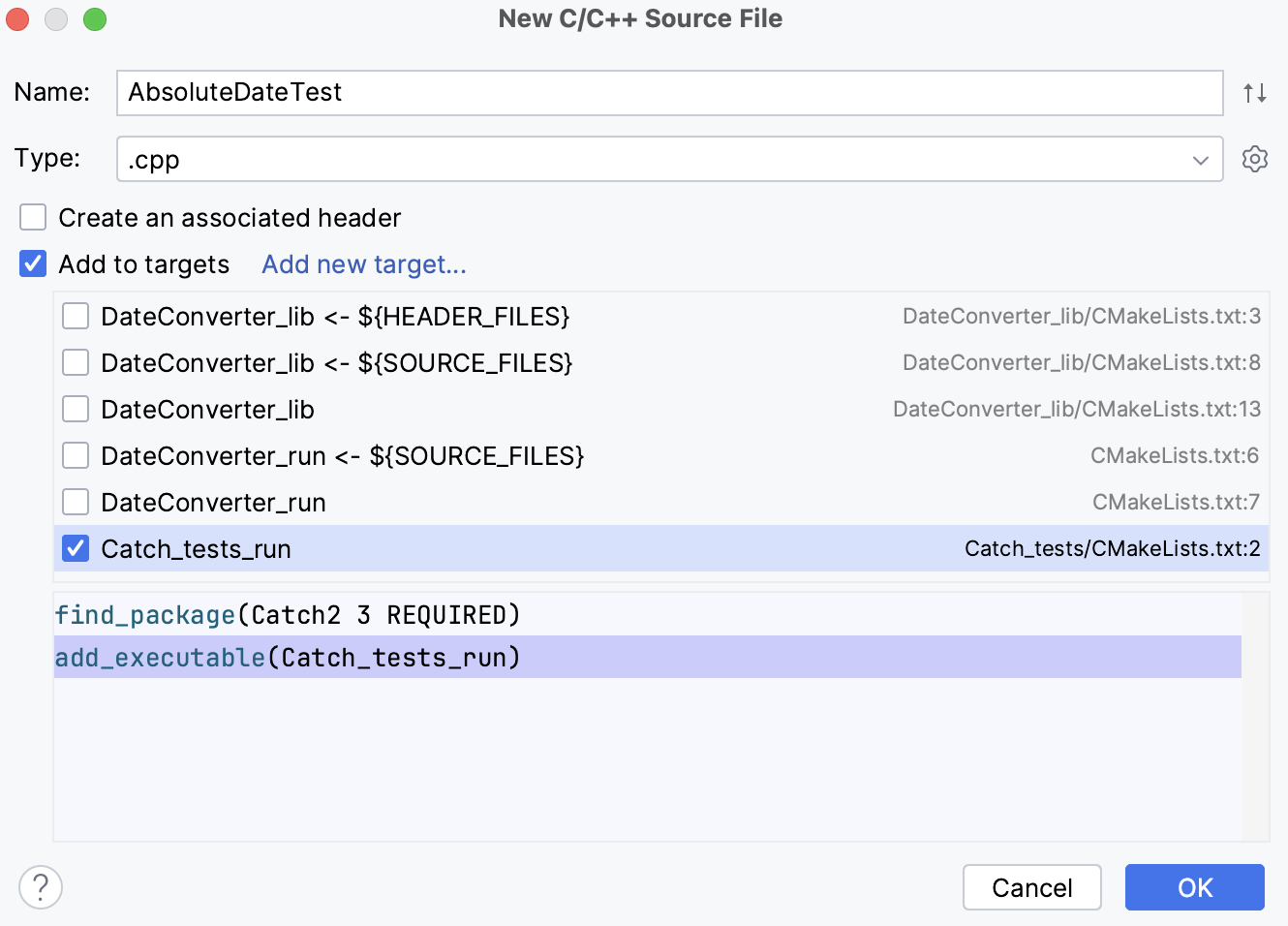

The Catch_tests_run tagret will appear in the list. Make sure to clear all the other checkboxes:

Click OK.

A new file is added to the project and the following command is added to Catch_tests/CMakeLists.txt:

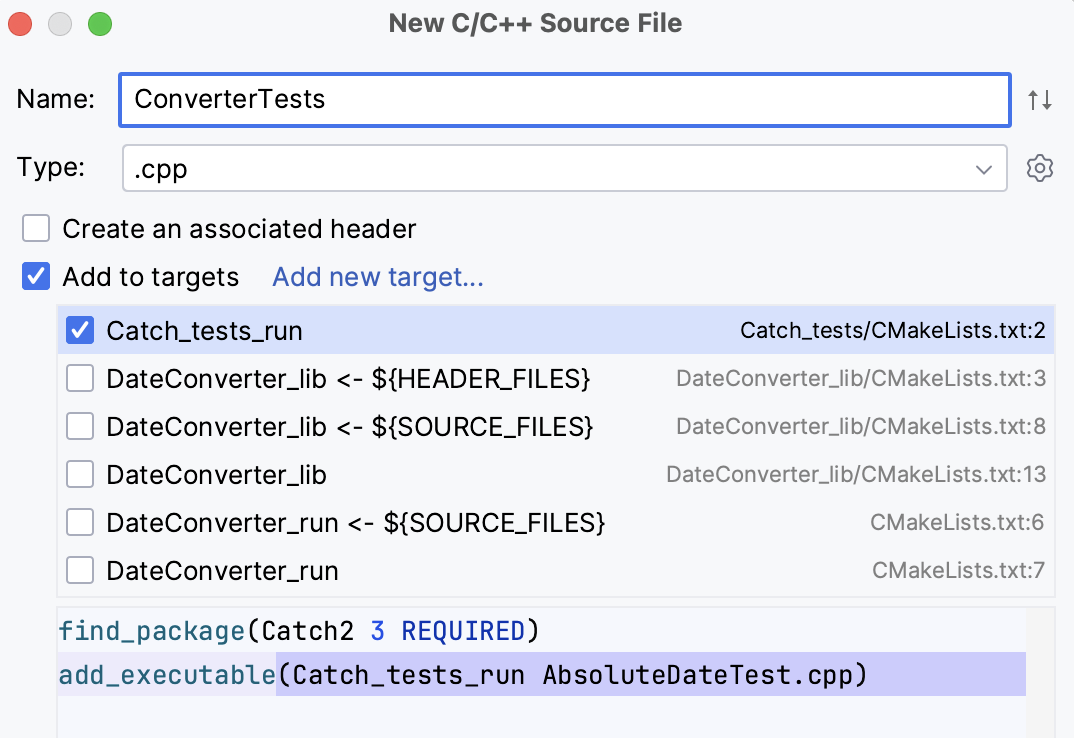

add_executable(Catch_tests_run AbsoluteDateTest.cpp)Create another file in the same location and call it ConverterTests.cpp. Add it to the

Catch_tests_runtarget:

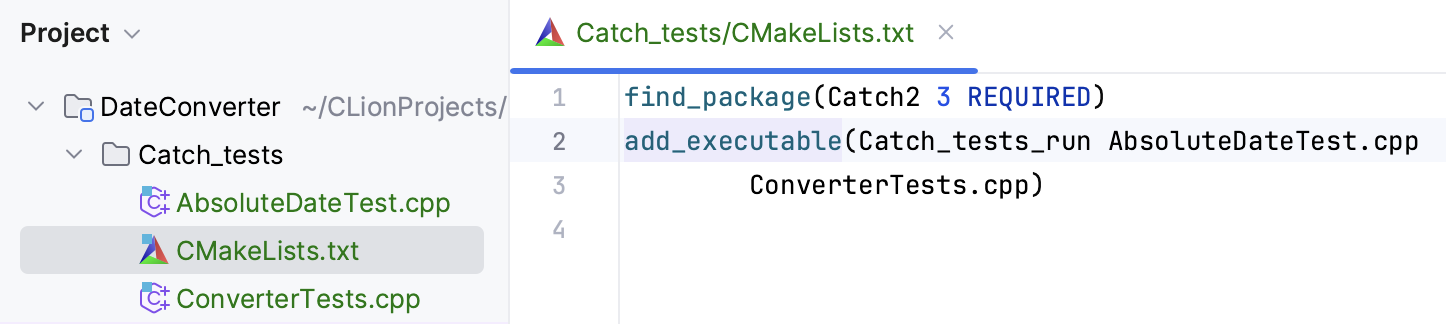

Now we have two source files linked to the tests target:

Open the Catch_tests/CMakeLists.txt script and add the following lines after the

add_executablecommand:target_link_libraries(Catch_tests_run PRIVATE DateConverter_lib) target_link_libraries(Catch_tests_run PRIVATE Catch2::Catch2WithMain) include(Catch) catch_discover_tests(Catch_tests_run)Reload the project.

Add testing code and run tests

Copy the code from AbsoluteDateTest.cpp and ConverterTests.cpp to the corresponding source files.

Notice that tests are preceded with

#include <catch2/catch_test_macros.hpp>Now the tests are ready to run.

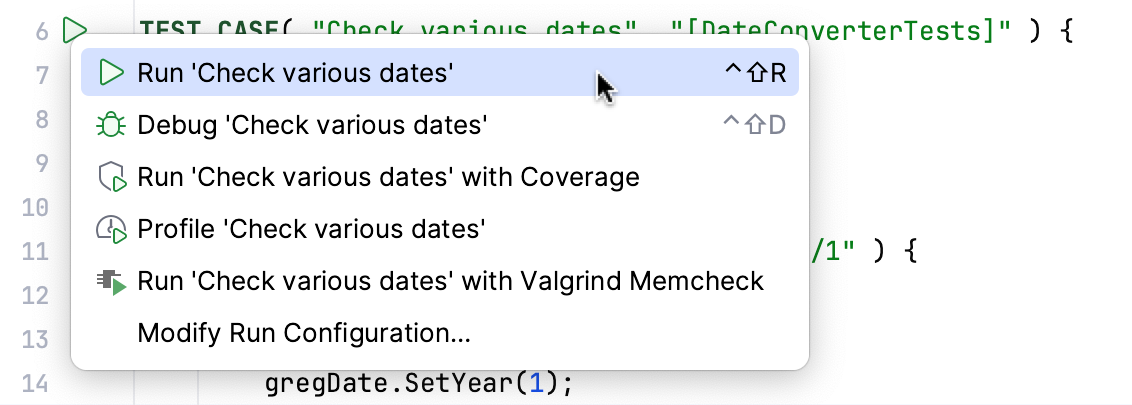

The quickest way to run tests is by clicking

in the gutter next to a

TEST_CASE:

CLion will show the results in the Test Runner tool window:

Include the Doctest framework

Create a folder for Doctest tests under the DateConverter project root. In our example, it's called Doctest_tests.

Download the doctest.h header and place it in the Doctest_tests folder.

Add Doctest tests

Click Doctest_tests in the project tree and select , call it AbsoluteDateTest.cpp.

CLion will prompt to add this file to an existing target. We don't need to do that, since we are going to create a new target for this file on the next step.

Repeat this step for ConverterTests.cpp.

Add a CMakeLists.txt file to the Doctest_tests folder (right-click the folder in the project tree and select ). Add the following lines:

add_executable(Doctest_tests_run ConverterTests.cpp AbsoluteDateTest.cpp) target_link_libraries(Doctest_tests_run DateConverter_lib)In the root CMakeLists.txt, add

add_subdirectory(Doctest_tests)in the end and reload the project.Copy the Doctest version of our checks from AbsoluteDateTest.cpp and ConverterTests.cpp to the corresponding source files in your project.

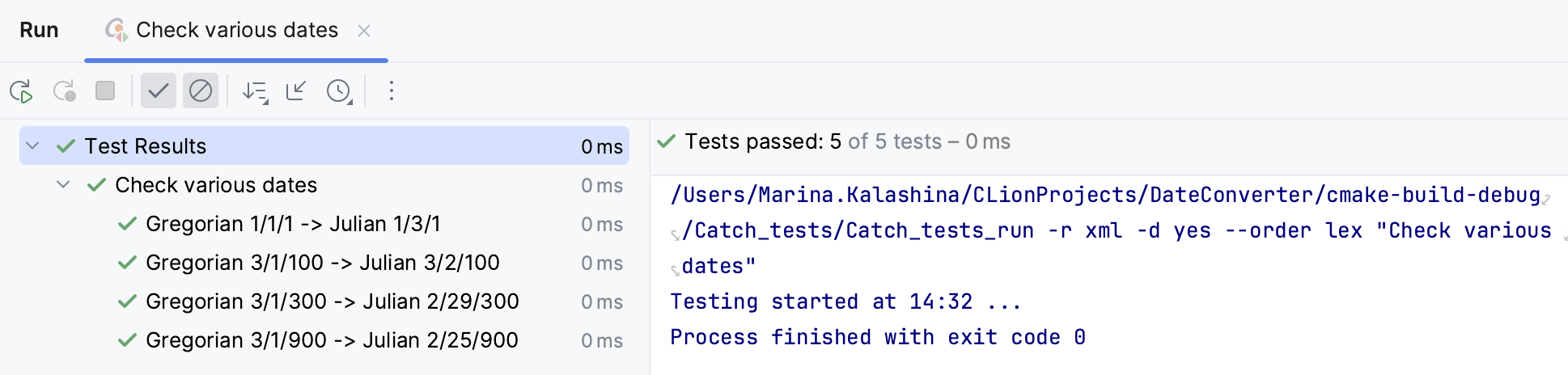

Now the tests are ready to run. For example, lets' click

in the left gutter next to

TEST_CASE("Check various dates")in ConverterTests.cpp and choose Run.... We will get the following results:

Run/Debug configurations for tests

Test frameworks provide the main() entry for test programs, so it is possible to run them as regular applications in CLion. However, we recommend using the dedicated run/debug configurations for Google Test, Boost.Test, Catch2, and Doctest. These configurations include test-related settings and let you benefit from the built-in test runner (which is unavailable if you run the tests as regular applications).

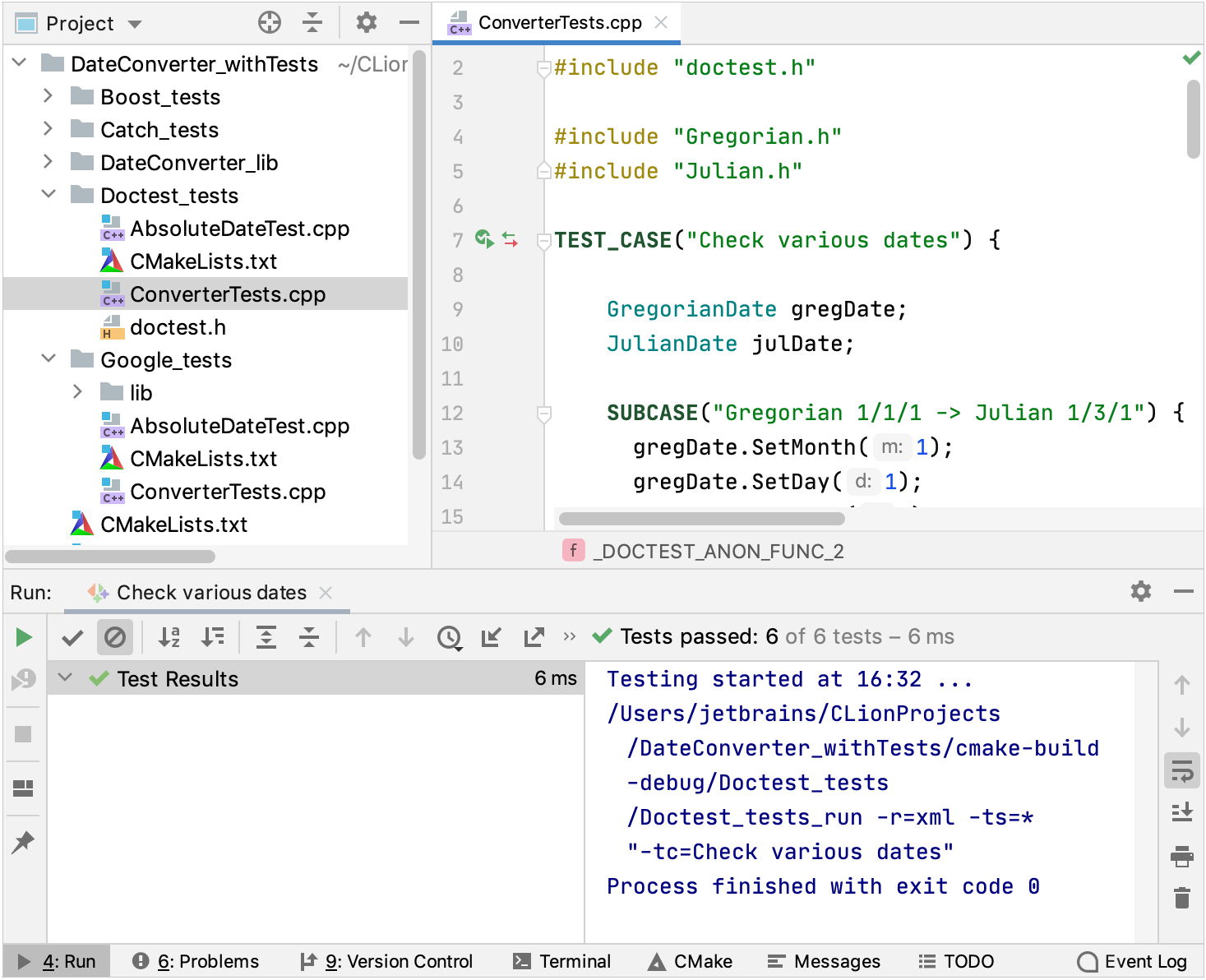

Create a run/debug configuration for tests

Go to Run | Edit Configurations, click

and select one of the framework-specific templates:

Set up your configuration

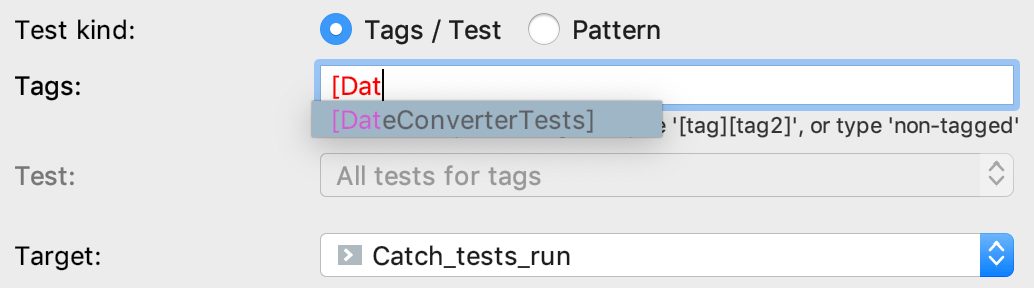

Depending on the framework, specify test pattern, suite, or tags (for Catch2). Auto-completion is available in the fields to help you quickly fill them up:

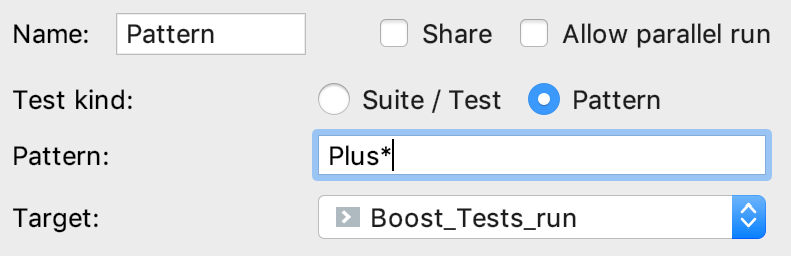

You can use wildcards when specifying test patterns. For example, set the following pattern to run only the

PlusOneDiffandPlusFour_Leaptests from the sample project:

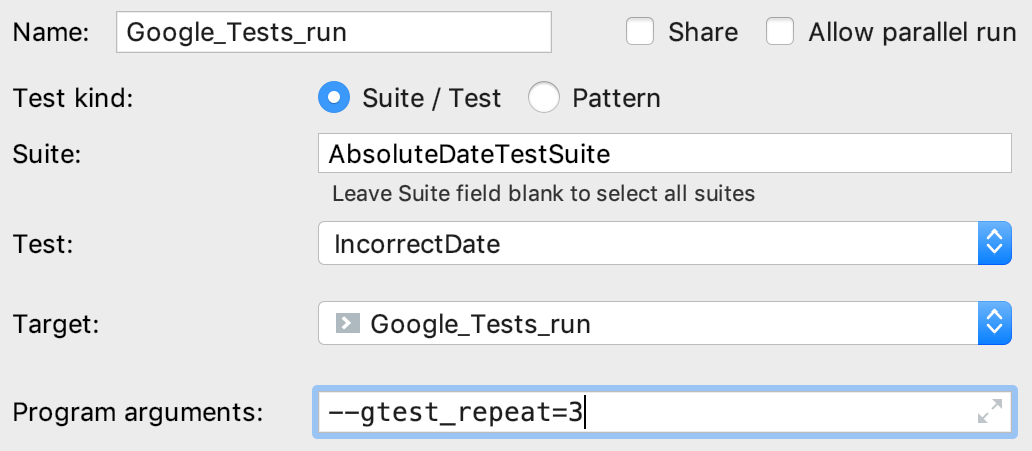

In other fields of the configuration settings, you can set environment variables or command line options. For example, in the Program arguments field you can set

-sfor Catch2 tests to force passing tests to show the full output, or--gtest_repeatto run a Google test multiple times:

The output will look as follows:

Repeating all tests (iteration 1) ... Repeating all tests (iteration 2) ... Repeating all tests (iteration 3) ...

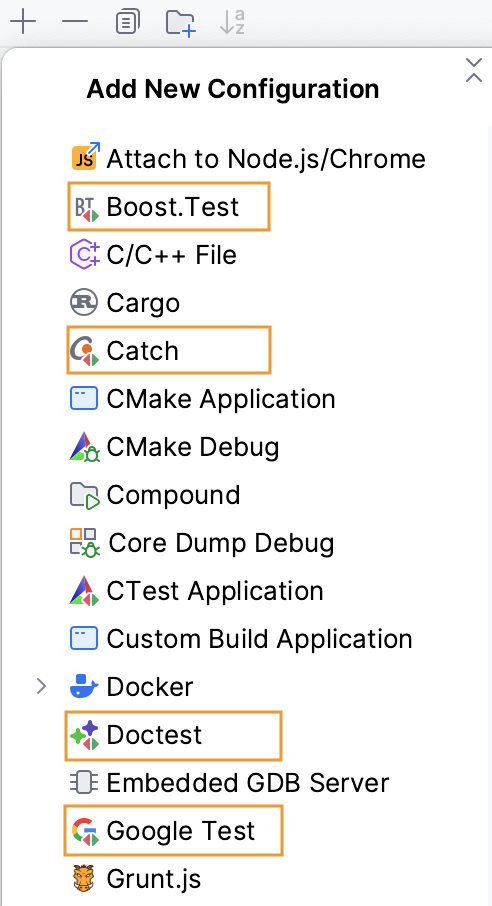

Gutter icons for tests

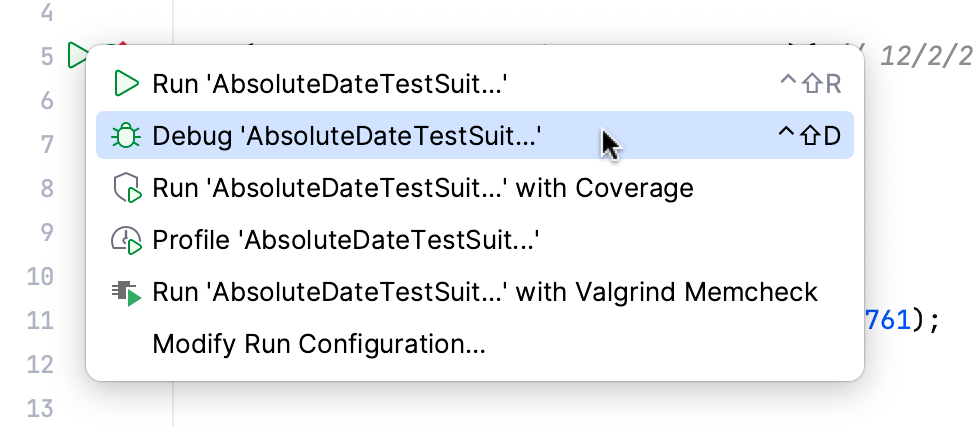

In CLion, there are several ways to start a run/debug session for tests, one of which is using special gutter icons. These icons help quickly run or debug a single test or a whole suite/fixture:

Gutter icons also show test results (when already available): success or failure

.

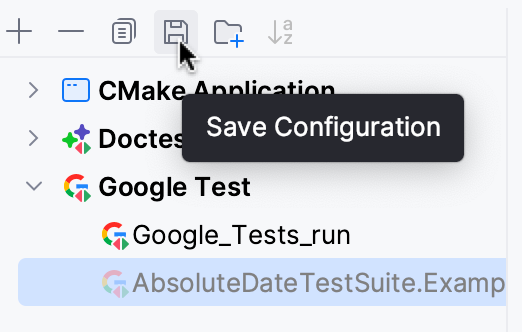

When you run a test/suite/fixture using gutter icons, CLion creates temporary Run/Debug configurations of the corresponding type. You can see these configurations greyed out in the list. To save a temporary configuration, select it in the dialog and press :

Test runner

When you run a test configuration, the results (and the process) are shown in the test runner window that includes:

progress bar with the percentage of tests executed so far,

tree view of all the running tests with their status and duration,

tests' output stream,

toolbar with the options to rerun failed

tests, export

or open previous results saved automatically

, sort the tests alphabetically

to easily find a particular test, or sort them by duration

to understand which test ran longer than others.

Other features

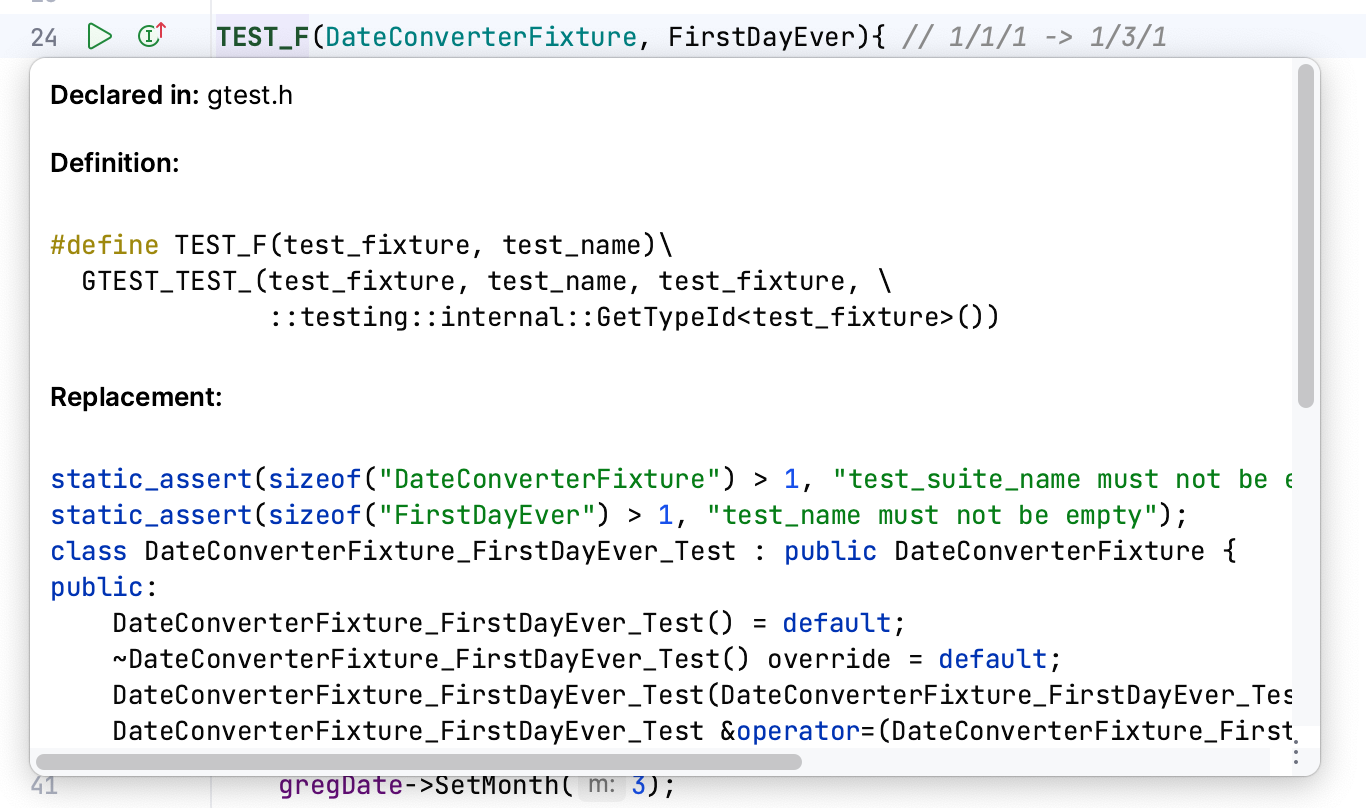

Quick Documentation for test macros

To help you explore the macros provided by testing frameworks, Quick Documentation pop-up (Ctrl+Q) shows the final macro replacement and formats it properly. It also highlights the strings and keywords used in the result substitution:

Show Test List

To reduce the time of initial indexing, CLion uses lazy test detection. It means that tests are excluded from indexing until you open some of the test files or run/debug test configurations. To check which tests are currently detected for your project, call Show Test List from . Note that calling this action doesn't trigger indexing.